Apache Kafka®️ 비용 절감 방법 및 최적의 비용 설계 안내 웨비나 | 자세히 알아보려면 지금 등록하세요

Speed Up Insights: Leveraging Motorsport Telemetry Data

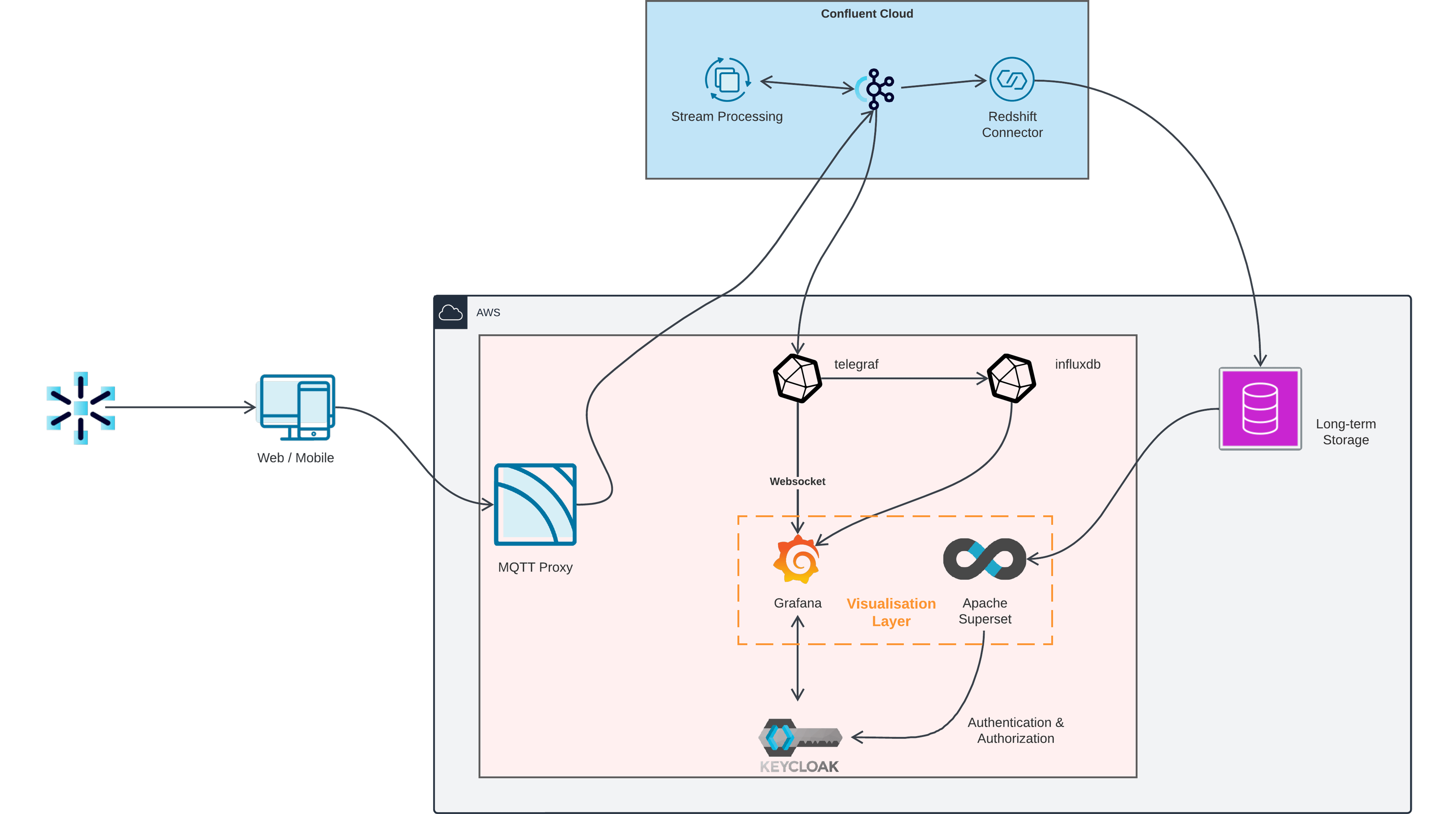

Data Reply GmbH developed an accelerator designed to efficiently ingest, process, and store real-time telemetry data from the F1 2022 game. This solution offers real-time data visualization through dashboards and ensures data is permanently stored, allowing for historical queries and analyses. Utilizing cutting-edge technologies, the accelerator achieves near-zero latency, demonstrating high performance. Furthermore, it exemplifies how a versatile accelerator can be crafted, serving as a well-tested template to expedite project development and deployment for commercial purposes.

Unlock Real-Time Insights with Cutting-Edge Telemetry Technology

The "Speed Up Insights: Leveraging Motorsport Telemetry Data" project harnesses the power of Confluent Cloud to revolutionize how real-time data from F1 2024 on the PS4 is processed, analyzed, and visualized. This solution addresses critical needs in data-driven decision-making and performance optimization by utilizing a sophisticated telemetry platform.

The project delivers a powerful, scalable, and versatile telemetry platform that transforms real-time data into strategic assets, driving performance improvements and offering a significant competitive advantage.

The platform delivers instantaneous insights into driver performance and vehicle telemetry through seamless data ingestion and processing with Kafka and stream processing, enabling immediate feedback and adjustments.

The integration with Telegraf and Grafana provides dynamic, real-time dashboards that offer clear and actionable visualizations of ongoing race conditions and metrics. Concurrently, long-term storage in Redshift and data exploration in Apache Superset empowers users to perform deep, historical analysis, uncovering trends and patterns that inform strategic decisions.

Leveraging Confluent Cloud's cloud-native architecture ensures that the platform can scale effortlessly to accommodate varying data volumes and velocities while maintaining low latency and high reliability. This scalability is crucial for handling the demanding nature of real-time telemetry data.

The project creates a holistic data ecosystem by combining real-time processing with robust long-term storage solutions. This ecosystem supports immediate operational insights and extensive historical analysis, providing a dual advantage for both short-term tactical adjustments and long-term strategic planning.

This solution leverages cutting-edge data streaming and processing technologies, positioning users at the forefront of innovation. The ability to rapidly convert raw telemetry data into valuable insights fosters a competitive edge in the high-stakes world of motorsport, where every millisecond counts.

Build with Confluent

This use case leverages the following building blocks in Confluent Cloud:

Reference Architecture

- It is generated by the F1 2022 game running on a PS4 and sent via local network on a specific port.

- A simple typescript application running on a laptop listens on the port, converts the data from binary to JSON and sends it to our MQTT proxy.

- The MQTT proxy (Confluent Platform’s component) receives the data and forwards it to the Kafka cluster running on Confluent Cloud.

- A stream processing query converts the data to AVRO and extracts nested data.

Telegraf consumes the data from the raw json topics and sends it to 2 different outputs:

- Directly to Grafana (via web-socket) – We send only a small subset of data that we want to show in real-time.

- InfluxDB – We send all the data, here we accept having near real-time information but we can perform further processing and aggregation. InfluxDB is configured as a data source in Grafana.

- A redshift sink kafka connector, running also on Confluent Cloud, moves our AVRO data to AWS Redshift.

- A Superset instance uses Redshift as a data source. Here, we can run our queries and create dashboards.

- Keycloak is used for authentication and authorization.

Resources

Contact Us

Contact Data Reply GmbH to learn more about this use case and get started.

Alberto Suman

Big Data Engineer

Sergio Spinatelli

Associate Partner

Alex Piermatteo

Associate Partner