Apache Kafka®️ 비용 절감 방법 및 최적의 비용 설계 안내 웨비나 | 자세히 알아보려면 지금 등록하세요

What is Data Serialization?

Whether you're building an application that needs to communicate with a database, developing an API to deliver data to multiple clients, or working on big data processing pipelines, understanding data serialization is important for ensuring efficient and reliable data exchange.

Thus, data serialization is a crucial concept in modern software development and data engineering that efficiently converts data objects into a transmittable format. The data serialized can then be sent or transferred to other data stores or applications for various use cases.

We'll cover what data serialization is, how it works, its benefits, and languages that can be used for data serialization.

The Definition of Data Serialization

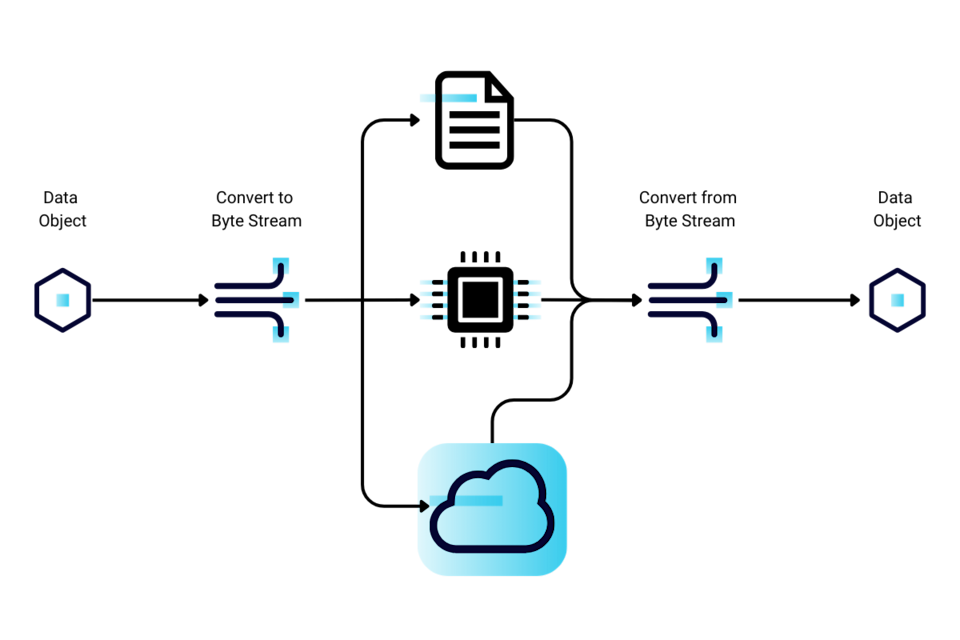

Data serialization can be defined as the process of converting data objects to a sequence of bytes or characters to preserve their structure in an easily storable and transmittable format.

The benefit of data serialization is that the serialized form of your data object — which contains the primitive values in a structured format — can be encoded into a specific format the object’s encoded represents so it can be transmitted. The reverse of this process is called deserialization — a process that reconstructs your serialized data objects.

To summarize, serialization allows complex data to be converted into a shareable or storable linear sequence of bytes or characters.

What is Serialized Data?

Data serialization results in serialized data. Computer data often comes as primitive (number, strings, and bool), complex (objects, array, structs, sets), or data object (class and struct instances, composite data types).

Serialized data is thus data that has been converted into a format that is parseable and optimized for efficient storage or transmission. It is also standardized, platform-independent formats — JSON, XML, Avro, Protobuf, and binary — and can be read and written on different operating systems using various programming languages.

Let's look at an example:

A real-world use case will be converting the serialization format of a topic in Avro format to a topic serialized in JSON Schema Registry format with Confluent Cloud for Apache Flink. This is how the Apache Flink system will handle this scenario. In this scenario, a Datagen Source Connector produces mock gaming player activity data to a Kafka topic named "gaming_player_activity_source."

- First, search for “datagen” connectors within your Flink SQL environment of the Confluent Cloud Console.

- Select Sample Data Connector and head over to the Advanced settings.

- Within the Advanced settings:

- Create a new Topic. This is “gaming_player_activity_source.”

- Using the Global Kafka credential option, generate an API key and download it.

- Configure the Connector. You can do that by selecting AVRO as the output record value format, which associates a schema with the topic and registers it with the Schema Registry.

- Choose the Gaming player activity template, set the max interval between messages to 10ms, and set the connector sizing to the default of 1 task.

- Start your "gaming_player_activity_source_connector" connector to send the event stream to the topic once the status changes from Provisioning to Running.

- Open an SQL shell within your environment’s Flink workspace from the Confluent CLI to inspect the source data.

- Next, convert the serialization format to JSON by creating a new table, “gaming_player_activity_source_json” with the same schema but with “value.format” set to “json-registry.”

- When finished, finish the running statement and delete the long-running INSERT INTO statement that converts Avro records to JSON format.

You should explore our guide: Convert the Serialization Format of a Topic with Confluent Cloud for Apache Flink, which explores this scenario in detail. Also, our documentation’s how-to guide section has several guides which you can explore to learn more about Confluent Cloud for Apache Flink.

How Data Serialization Works

Data serialization uses a serializer to convert data objects into byte streams containing the object information. After serialization, the data is saved in standardized, platform-independent formats: JSON, XML, Avro, Protobuf, and binary.

However, it is not this straightforward for complex data structures. In that case, data serialization involves encoding and flattening the data using serializers while preserving information like order before storing. This is reference tracking.

Data serialization languages are some examples of serializers.

Why Data Serialization Matters

Here are some common benefits of data serialization:

It saves the state of your data object so that it can be recreated later. This comes in handy when working with data behind a firewall or transferring user-specific information or objects across domains and applications.

It is ideal for storing data efficiently and on a large scale, as serialized data takes up less storage space.

It enhances interoperability and facilitates seamless data exchange across different applications, networks, and services in a way that the application, network, and services understand.

Faster data transfer and reduced latency as data can be transmitted quickly and efficiently over networks.

Flexibility and independence as serialized data can be shared across different programming languages and platforms.

Which Languages are Used for Data Serialization?

A data serialization language is a protocol, module, or library that converts data between its native and serialized formats.

Technically, programming languages don’t serialize or deserialize data themselves. Instead, data serialization is handled by a native library or framework with the serialization functionality of these programming languages.

A few examples include:

Java

Through Gson, the Serializable interface and ObjectOutputStream class

Python

Through the pickle module and external libraries, such as JSON, YAML, and MessagePack

Go

Through JSON, gob and Protocol Buffers

Node.js

Through JSON, BSON, and Protocol Buffers

C++

Through libraries like Boost.Serialization, cereal, and Google's Protocol Buffers

.NET

Through the System.Runtime.Serialization namespace, JSON.NET and Protocol Buffers

Use Cases and Examples

Data Integration

Serialization ensures that data from various data sources is consistent while it transforms.

Big Data Processing

Data serialization provides users with a much more optimized way to store and process Big Data.

Cloud Data Storage

Various applications use serialization to store data.

Real-Time Analytics

To process data faster for real-time analysis, most applications serialize data.

Network Communication

Data are often serialized for efficient transmission between client-server or third-party APIs, reliable asynchronous communication, and real-time communication.

Microservices Architecture

Data serialization ensures efficient communication between microservices by ensuring consistent data format and efficient transmission. This is important for inter-service communication, event-driven architecture, and data storage.

Cache Management

Storing frequently accessed data in-memory data grids, distributed caches, content delivery networks (CDNs), and application-level caching.

Why Confluent?

Confluent is designed to help your organization manage, process, and integrate your data in real-time. Data serialization is one way we can assist you.

We can advance your data integration with the following features:

Efficient, seamless, and consistent data exchange:

Confluent's clients and connectors are designed to handle serialization and deserialization of large volumes of data to ensure an efficient data exchange between systems.

Diverse data format support

Regardless of the format you need, we've got you covered. Confluent supports various data formats from Avro and JSON to Protobuf.

Kafka serialization

Since Confluent is built on top of Apache Kafka, our Kafka clients provide built-in serialization capabilities. This allows your developers to serialize data into Kafka topics easily.

Real-time stream processing

Confluent's ksqlDB, Flink, and Kafka Streams enable stream processing, which allows for serializing and deserializing data in real-time for processing and analysis.

Schema registry

Confluent provides a Schema Registry, enabling schema management and evolution for serialized data.

Scalability and integration

Confluent's distributed architecture and fully managed connectors enable you to integrate with various data sources while enjoying scalable data serialization and processing.