Apache Kafka®️ 비용 절감 방법 및 최적의 비용 설계 안내 웨비나 | 자세히 알아보려면 지금 등록하세요

Reduce Your Data Infrastructure TCO with Confluent’s New Splunk S2S Source Premium Connector

Data is at the center of our world today, especially with the ever-increasing amount of machine-generated log data collected from applications, devices, and sensors from almost every modern technology. The companies that are able to harness the power of this raw data are more efficient, profitable, innovative, and secure.

Splunk’s data platform helps collect, search, analyze, and monitor log data at massive scale, thus enabling better identification of security threats, improved observability into applications and infrastructure, and better intelligence for business operations. Splunk helps drive visibility across disparate systems and processes to prevent outages, remediate issues, and identify potential threats.

However, although Splunk is a best-in-class data platform for certain use cases like security information and event management (SIEM) and observability, it’s not optimal nor cost-efficient to send all low-value log data across an organization into the platform. In addition, although Splunk has a few mechanisms in place to export raw data, it’s primarily designed to ingest large quantities of data for log archival and analysis—not to stream to other downstream systems and applications in great volume.

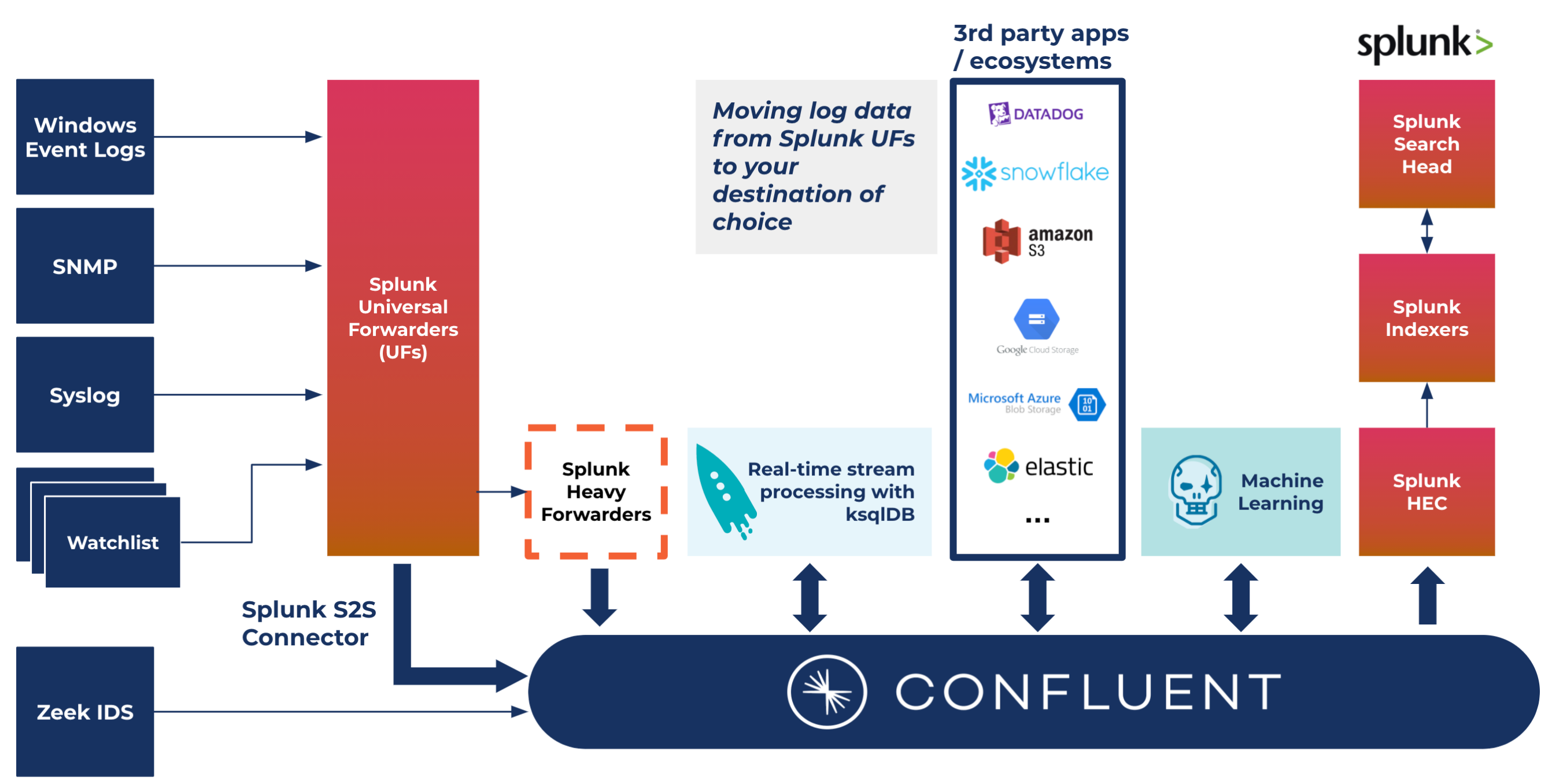

Today, we are excited to announce the release and general availability (GA) of Confluent’s premium Splunk S2S Source Connector. This pre-built, expert designed premium connector enables you to cost-effectively and efficiently send log data to Splunk and other downstream applications and data systems. By leveraging Confluent’s Splunk S2S Source Connector alongside other Confluent components, including 120+ connectors to other modern apps and data systems, you now have greater flexibility and freedom of choice to send enriched log data to the downstream systems that align best with your business needs.

Moreover, the value of bringing the data into Confluent goes beyond just filtering and routing. It can be enriched by Splunk analysts with ksqlDB and stream processing to build real-time applications. You can enable key use cases like SIEM, SOAR (security orchestration, automation, and response), data synchronization, and real-time analytics that power new and improved log management, anomaly/fraud detection, machine learning, and more.

The Splunk S2S Source Connector delivers three primary benefits:

- Reduced data infrastructure TCO by filtering the amount of low-value data ingested by the platform and reducing your infrastructure footprint. This also enables you to scale the collection of log data and avoid data ingest limitations.

- Enhanced data accessibility, portability, and interoperability by integrating Splunk data with Confluent and unlocking its use by other applications and data systems. By sending the data to Confluent first, you can collect, transform, filter, and clean log data before sending that enriched data to the best tools for analyzing it which maximizes vendor and cost flexibility.

- Savings of ~12-24+ engineering months from designing, building, testing, and maintaining a custom Splunk source connector, and avoiding the associated technical debt. This frees up precious development resources, allowing your teams to instead focus on value-added activities such as building real-time applications that drive the business forward.

The Splunk S2S connector enables customers to cost-effectively and reliably integrate Splunk data with Confluent, significantly reducing data infrastructure TCO while improving data accessibility and portability.

Using the Splunk S2S Source Premium Connector

Let’s now look at how you can read data directly from Splunk Universal Forwarders with Confluent’s self-managed connector for the Splunk S2S Source and send it to the Confluent Platform or Confluent Cloud (self-managed connectors can run on a Kafka cluster in the cloud). While this demo focuses on reading data from Splunk Universal Forwarders, Confluent also has sink connectors, which helps you to write data to other destinations like Elasticsearch, Snowflake, S3/HDFS, or back into Splunk.

Demo scenario

Let’s imagine you work at a company called SweetMoney which is a big enterprise dealing with financial services and has a lot of applications that store almost all the data related to the business. You are an engineer or an architect on the new security data analysis team charged with rapidly detecting and responding to advanced cybersecurity threats across the enterprise. The security data analysis team wants to leverage data in motion for rapid detection of vulnerabilities and malicious behavior while scaling across your entire cybersecurity data landscape without increasing costs. However, there are a few key challenges:

- Reading unprocessed data directly from the Splunk Universal Forwarder

- Enriching, filtering, and analyzing data before sending it to downstream systems

- Reading data from different sources and input formats

Setting up the connector

Download and install the connector from Confluent Hub.

To install the connector using confluent-hub:

confluent-hub install confluentinc/kafka-connect-splunk-s2s:1.0.0

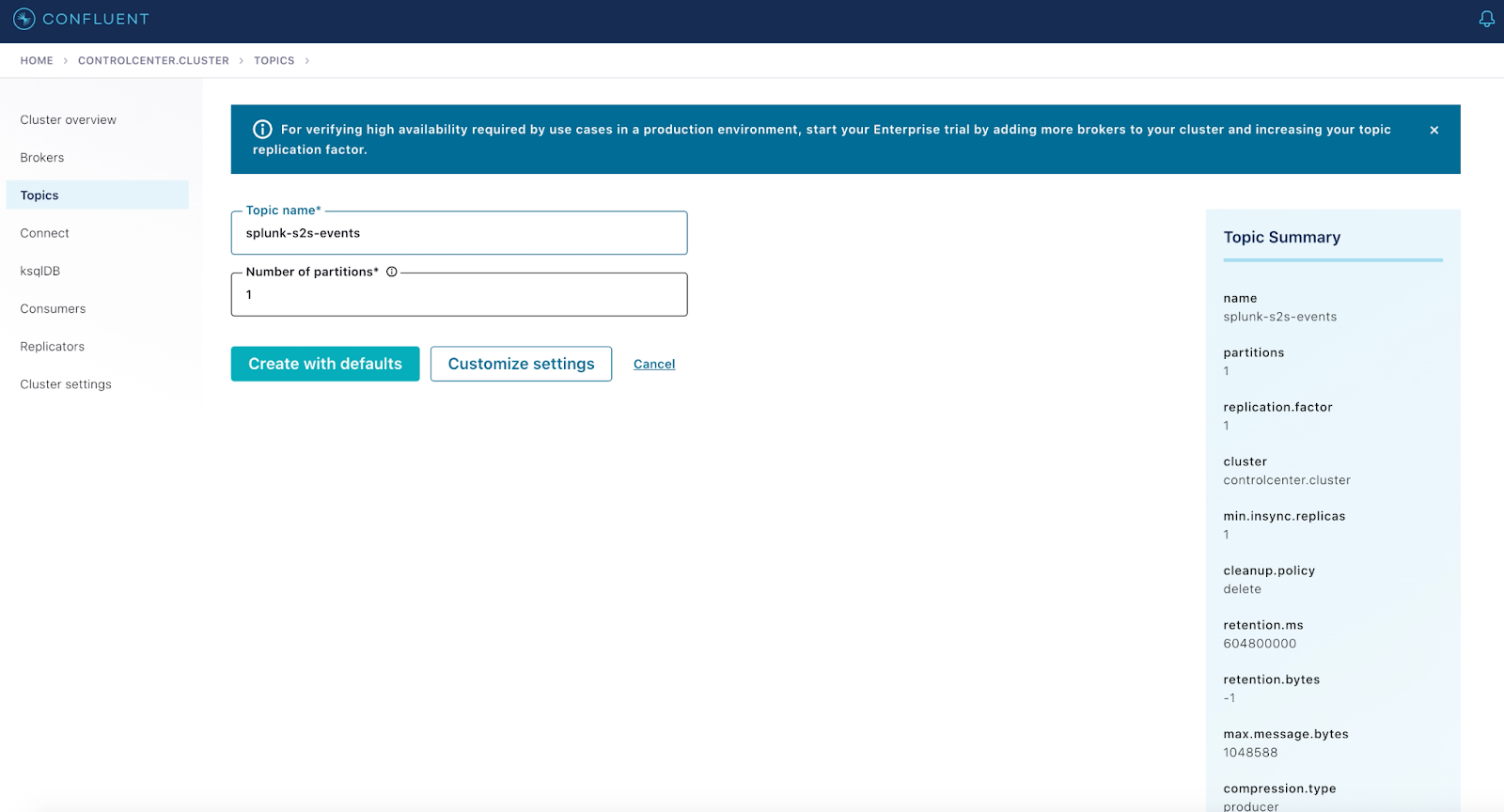

Create a topic called splunk-s2s-events.

Create and configure the Splunk S2S Source Connector:

Connector Config:

name=splunk-s2s-source tasks.max=1 connector.class=io.confluent.connect.splunk.s2s.SplunkS2SSourceConnector splunk.s2s.port=9997 kafka.topic=splunk-s2s-events key.converter=org.apache.kafka.connect.storage.StringConverter value.converter=org.apache.kafka.connect.json.JsonConverter key.converter.schemas.enable=false value.converter.schemas.enable=false confluent.topic.bootstrap.servers=localhost:9092 confluent.topic.replication.factor=1 confluent.license=<free for first 30days>

Command:

confluent local services connect connector load splunk-s2s-source --config splunk-s2s-source.properties

— confluent local services connect connector load splunk-s2s-source --config splunk-s2s-source.properties

The local commands are intended for a single-node development environment only, NOT for production usage. https://docs.confluent.io/current/cli/index.html

{

"name":"splunk-s2s-source",

"config":{

"confluent.topic.bootstrap.servers":"localhost:9092",

"confluent.topic.replication.factor":"1",

"connector.class":"io.confluent.connect.splunk.s2s.SplunkS2SSourceConnector",

"kafka.topic":"splunk-s2s-events",

"key.converter":"org.apache.kafka.connect.storage.StringConverter",

"key.converter.schemas.enable":"false",

"splunk.s2s.port":"9997",

"tasks.max":"1",

"value.converter":"org.apache.kafka.connect.json.JsonConverter",

"value.converter.schemas.enable":"false",

"name":"splunk-sZs-source"

},

"tasks":[],

"type":"source"

}

Ensure that the connector is in a running state using this command:

confluent local services connect connector status splunk-s2s-source

Set up the Splunk forwarder.

In the Splunk forwarder directory ($SPLUNK_HOME):

- Add log files for monitoring.

Network.log:112.111.162.4 - - [16/Feb/2021:18:26:36] "GET /product.screen?productId=WC-SH-G04&JSESSIONID=SD7SL8FF5ADFF4964 HTTP 1.1" 200 778 "http://www.buttercupgames.com/category.screen?categoryId=SHOOTER" "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.52 Safari/536.5" 194 112.111.162.4 - - [16/Feb/2021:18:26:37] "POST /cart.do?action=addtocart&itemId=EST-18&productId=WC-SH-G04&JSESSIONID=SD7SL8FF5ADFF4964 HTTP 1.1" 200 215 "http://www.buttercupgames.com/product.screen?productId=WC-SH-G04" "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.52 Safari/536.5" 727 112.111.162.4 - - [16/Feb/2021:18:26:38] "POST /cart.do?action=purchase&itemId=EST-18&JSESSIONID=SD7SL8FF5ADFF4964 HTTP 1.1" 200 1228 "http://www.buttercupgames.com/cart.do?action=addtocart&itemId=EST-18&categoryId=SHOOTER&productId=WC-SH-G04" "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.52 Safari/536.5" 430 112.111.162.4 - - [16/Feb/2021:18:26:38] "POST /cart/error.do?msg=CreditDoesNotMatch&JSESSIONID=SD7SL8FF5ADFF4964 HTTP 1.1" 200 1232 "http://www.buttercupgames.com/cart.do?action=purchase&itemId=EST-18" "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.52 Safari/536.5" 841 112.111.162.4 - - [16/Feb/2021:18:26:37] "GET /category.screen?categoryId=NULL&JSESSIONID=SD7SL8FF5ADFF4964 HTTP 1.1" 505 2445 "http://www.buttercupgames.com/category.screen?categoryId=NULL" "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.52 Safari/536.5" 393 112.111.162.4 - - [16/Feb/2021:18:26:38] "GET /oldlink?itemId=EST-7&JSESSIONID=SD7SL8FF5ADFF4964 HTTP 1.1" 503 1207 "http://www.buttercupgames.com/category.screen?categoryId=NULL" "Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/536.5 (KHTML, like Gecko) Chrome/19.0.1084.52 Safari/536.5" 704 74.125.19.106 - - [16/Feb/2021:18:32:15] "GET /cart.do?action=addtocart&itemId=EST-16&productId=DC-SG-G02&JSESSIONID=SD4SL7FF10ADFF4998 HTTP 1.1" 200 1425 "http://www.buttercupgames.com" "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-GB; rv:1.8.1.6) Gecko/20070725 Firefox/2.0.0.6" 375 74.125.19.106 - - [16/Feb/2021:18:32:15] "GET /category.screen?categoryId=NULL&JSESSIONID=SD4SL7FF10ADFF4998 HTTP 1.1" 503 2039 "http://www.buttercupgames.com/oldlink?itemId=EST-13" "Mozilla/5.0 (Windows; U; Windows NT 5.1; en-GB; rv:1.8.1.6) Gecko/20070725 Firefox/2.0.0.6" 533 117.21.246.164 - - [16/Feb/2021:18:36:02] "POST /cart.do?action=changequantity&itemId=EST-21&productId=WC-SH-A01&JSESSIONID=SD9SL6FF8ADFF5015 HTTP 1.1" 200 809 "http://www.buttercupgames.com" "Googlebot/2.1 (http://www.googlebot.com/bot.html)" 643 117.21.246.164 - - [16/Feb/2021:18:36:03] "POST /cart.do?action=addtocart&itemId=EST-27&productId=DC-SG-G02&JSESSIONID=SD9SL6FF8ADFF5015 HTTP 1.1" 200 1291 "http://www.buttercupgames.com/cart.do?action=addtocart&itemId=EST-27&productId=DC-SG-G02" "Googlebot/2.1 (http://www.googlebot.com/bot.html)" 795

Secure.log:

Thu Feb 16 2021 00:15:01 www1 sshd[4747]: Failed password for invalid user jabber from 118.142.68.222 port 3187 ssh2 Thu Feb 16 2021 00:15:01 www1 sshd[4111]: Failed password for invalid user db2 from 118.142.68.222 port 4150 ssh2 Thu Feb 16 2021 00:15:01 www1 sshd[5359]: Failed password for invalid user pmuser from 118.142.68.222 port 3356 ssh2 Thu Feb 16 2021 00:15:01 www1 su: pam_unix(su:session): session opened for user root by djohnson(uid=0) Thu Feb 16 2021 00:15:01 www1 sshd[2660]: Failed password for invalid user irc from 118.142.68.222 port 4343 ssh2 Thu Feb 16 2021 00:15:01 www1 sshd[1705]: Failed password for happy from 118.142.68.222 port 4174 ssh2 Thu Feb 16 2021 00:15:01 www1 sshd[1292]: Failed password for nobody from 118.142.68.222 port 1654 ssh2 Thu Feb 16 2021 00:15:01 www1 sshd[1560]: Failed password for invalid user local from 118.142.68.222 port 4616 ssh2 Thu Feb 16 2021 00:15:01 www1 sshd[59414]: Accepted password for myuan from 10.1.10.172 port 1569 ssh2 Thu Feb 16 2021 00:15:01 www1 sshd[1876]: Failed password for invalid user db2 from 118.142.68.222 port 1151 ssh2 Thu Feb 16 2021 00:15:01 www1 sshd[3310]: Failed password for apache from 118.142.68.222 port 4343 ssh2 Thu Feb 16 2021 00:15:01 www1 sshd[2149]: Failed password for nobody from 118.142.68.222 port 1527 ssh2 Thu Feb 16 2021 00:15:01 www1 sshd[2766]: Failed password for invalid user guest from 118.142.68.222 port 2581 ssh2 Thu Feb 16 2021 00:15:01 www1 sshd[3118]: pam_unix(sshd:session): session opened for user djohnson by (uid=0) Thu Feb 16 2021 00:15:01 www1 sshd[2598]: Failed password for invalid user system from 118.142.68.222 port 1848 ssh2 Thu Feb 16 2021 00:15:01 www1 sshd[4637]: Failed password for invalid user sys from 211.140.3.183 port 1202 ssh2 Thu Feb 16 2021 00:15:01 www1 sshd[1335]: Failed password for invalid user mysql from 211.140.3.183 port 3043 ssh2 Thu Feb 16 2021 00:15:01 www1 sshd[4153]: Failed password for squid from 211.140.3.183 port 3634 ssh2 Thu Feb 16 2021 00:15:01 www1 sshd[3149]: Failed password for invalid user irc from 208.240.243.170 port 3104 ssh2 Thu Feb 16 2021 00:15:01 www1 sshd[4620]: Failed password for invalid user sysadmin from 208.240.243.170 port 3321 ssh2

Template:

bin/splunk add monitor -source

Command:

bin/splunk add monitor -source /tmp/logs/secure.log

- Add a forward server.

Template:bin/splunk add forward-server

Command:

bin/splunk add forward-server localhost:9997

SplunkForwarder bin/splunk add forward-server localhost:9997 Added forwarding to: localhost:9997. SplunkForwarder bin/splunk list monitor Monitored Directories: [No directories monitored.] Monitored Files: /tmp/logs/network.log /tmp/logs/secure.log

- Start the universal forwarder.

Command:bin/splunk start

->SplunkForwarder bin/splunk start

Splunk> Australian for grep.

Checking prerequisites... Checking mgmt port [8089]: open Checking conf files for problems... Done Checking default conf files for edits... Validating installed files against hashes from '/Applications/SplunkForwarder/splunkforwarder-8.0.2-a7f645ddaf91-darwin-64-manifest' All installed files intact. Done All preliminary checks passed.

Starting splunlk server daemon (splunkd)... Done - Check for the file status in the Splunk UF.

Command:bin/splunk list inputstatus

Cooked:tcp : tcp Raw:tcp : tcp TailingProcessor:FileStatus : tmp/logs/network.log file position = 3027 file size = 3027 percent = 100.00 type = finished reading /tmp/logs/secure.log file position = 2222 file size = 2222 percent = 100.00 type = finished reading tcp_raw:listenerports : 5141

Once the connector is running, messages from the log files network.log and secure.log will start flowing to the Kafka topic called splunk-s2s-events.

Now check that the log file data is available in the splunk-s2s-events topic in Confluent.

After completion of the last step, you can see that the data from both network.log and secure.log is available in the Kafka topic.

You now have a working data pipeline from a Splunk UF to Confluent Platform using Confluent’s Splunk S2S Source Premium Connector. Data was read successfully using the S2S protocol into the Kafka topic.

Learn more about the Splunk S2S Source Connector

To learn more about Confluent’s Splunk S2S Source Connector, please review the documentation.

You can check out Confluent’s latest Splunk S2S Source Premium Connector on Confluent Hub.* Please contact your Confluent account manager if you have any further questions.

*Subject to Confluent Platform licensing requirements for Premium connectors.

이 블로그 게시물이 마음에 드셨나요? 지금 공유해 주세요.

Confluent 블로그 구독

New with Confluent Platform 7.9: Oracle XStream CDC Connector, Client-Side Field Level Encryption (EA), Confluent for VS Code, and More

This blog announces the general availability of Confluent Platform 7.9 and its latest key features: Oracle XStream CDC Connector, Client-Side Field Level Encryption (EA), Confluent for VS Code, and more.

Meet the Oracle XStream CDC Source Connector

Confluent's new Oracle XStream CDC Premium Connector delivers enterprise-grade performance with 2-3x throughput improvement over traditional approaches, eliminates costly Oracle GoldenGate licensing requirements, and seamlessly integrates with 120+ connectors...