Apache Kafka®️ 비용 절감 방법 및 최적의 비용 설계 안내 웨비나 | 자세히 알아보려면 지금 등록하세요

Apache Kafka as a Service with Confluent Cloud Now Available on GCP Marketplace

Following Google’s announcement to provide leading open source services with a cloud-native experience by partnering with companies like Confluent, we are delighted to share that Confluent Cloud is now available on Google Cloud Platform (GCP) Marketplace.

The integrated experience and billing model makes it easier for developers to get started with Confluent Cloud, our fully managed event streaming platform built around Apache Kafka®, by leveraging their existing billing service on GCP. With Confluent Cloud available natively in the Google Cloud Console, this is the first step towards making Kafka a first-class-citizen service on GCP so that developers can be even more productive.

Developer joy with zero hassle

Considering Apache Kafka’s tremendous adoption throughout the years, either developers are fully convinced that only Kafka can grant them the scalability and performance their workloads demand, or perhaps the ecosystem of APIs around Kafka has grown so much that pretty much all major developer stacks support Kafka, which in turn fuels further adoption. But it may also mean that developers are building their applications more oriented to streams rather than state, because it allows them to create applications that can react more quickly to opportunities or threats that the business behind the application may face, as well as make instant decisions.

Regardless, we’ve always heard that managing Apache Kafka is a job for the pros—but that doesn’t have to be the case.

Confluent Cloud is a fully managed Apache Kafka service that removes the operational complexities of having to manage Kafka on your own (you can read this blog post for more on the importance of a fully managed service for Apache Kafka). Built as a cloud-native service, Confluent Cloud offers developers a serverless experience with elastic scaling and pricing that charges only for what you stream.

While Confluent Cloud has always been available on GCP, it requires developers to sign up and provide a credit card as a form of payment to get started. This meant having to manage an additional bill in addition to their GCP bill. This could be a point of friction since developers familiar with GCP are not used to the concept of swapping a credit card for every service or resource they spin up for usage.

We are happy to say that having two separate bills is no longer necessary, and you can now use Confluent Cloud with your existing project’s billing and credits. Any consumption of Confluent Cloud is now a line in your monthly GCP bill.

Event streaming applications made easy and fun

An event streaming platform such as Confluent Cloud is made up of components and other layers that make developing event streaming applications easier and fun. Just like a Kafka cluster, these other pieces in the ecosystem are available as fully managed services as well, such as Confluent Schema Registry and connectors like the newly available BigQuery connector. There is also support for ksqlDB as a managed service.

When developers use these tools as part of their Confluent Cloud subscription, they don’t have to write code or worry about technical decisions. All this to say, if you are developing event applications with GCP, the amount of effort required by your architecture can significantly decrease because this is all built into Confluent Cloud. This is particularly useful if your use case is all about event ingestion into BigQuery, and events need to be curated (e.g., filtered, partitioned, adjusted, aggregated, or enriched) before getting into a BigQuery table.

Getting started with Confluent Cloud on GCP Marketplace

In honor of Linus Torvalds, the creator of Linux kernel who once said, “Talk is cheap, show me the code,” let’s see how GCP developers can start streaming with Confluent Cloud.

Step 1: Spin up Confluent Cloud

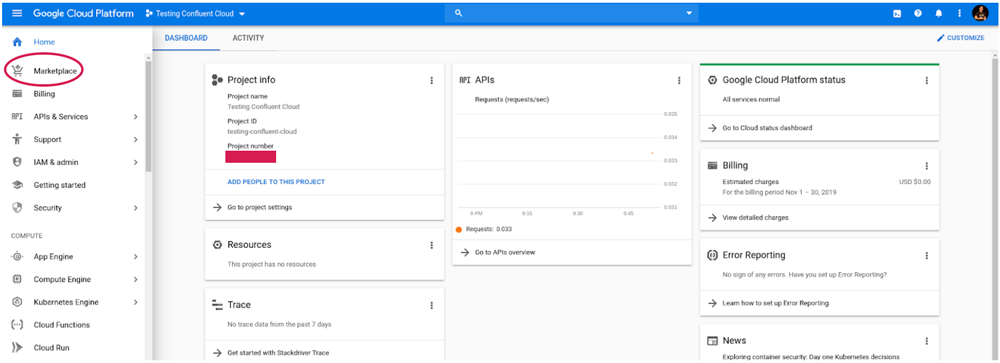

First, log into your account and select the project where you want to spin up Confluent Cloud. Then click on the “Marketplace” option, as shown in Figure 1.

Figure 1. GCP console showing the home page of the selected project

Step 2: Select Confluent Cloud in Marketplace

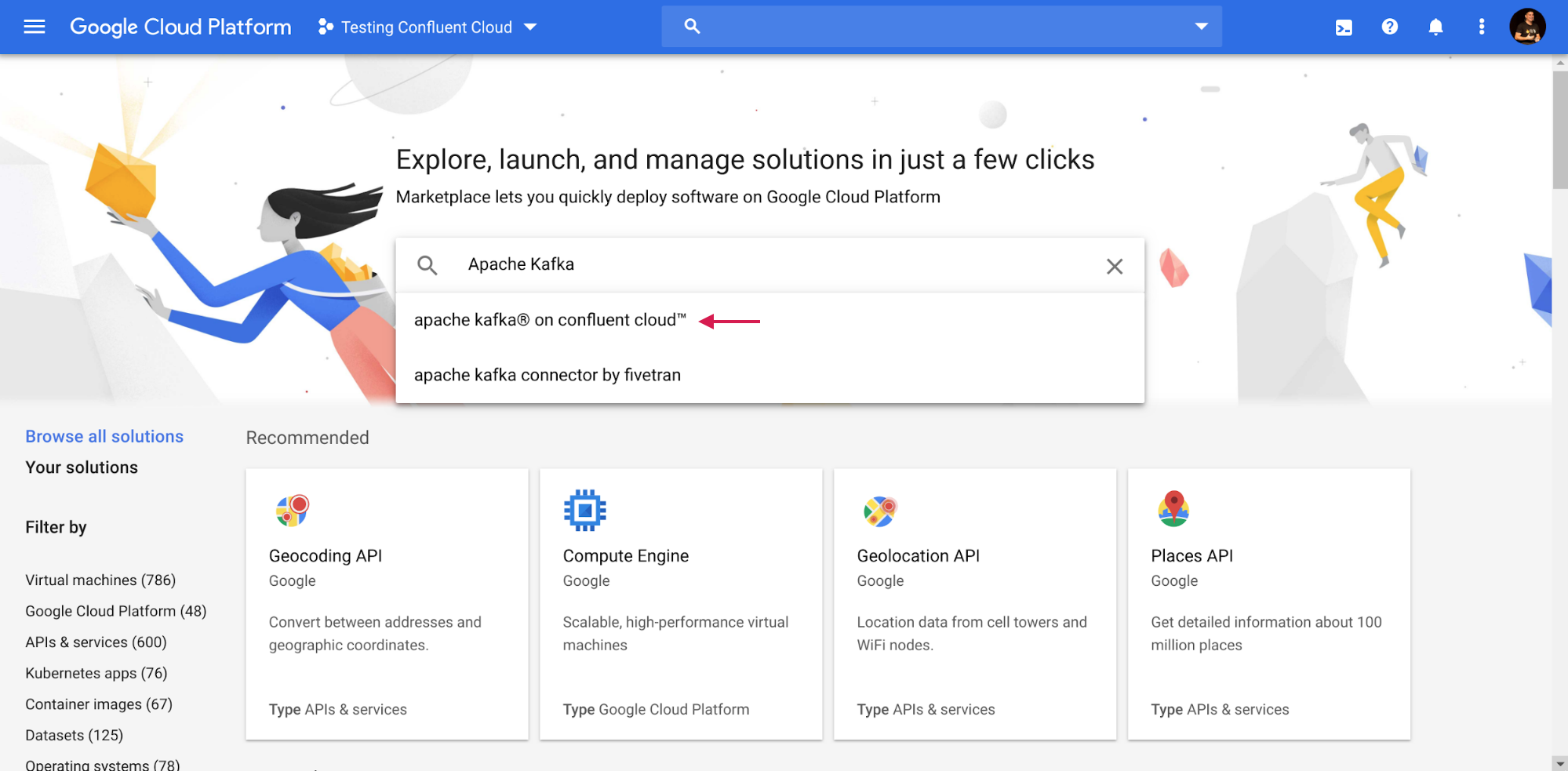

In Marketplace, simply use this page or search for “Apache Kafka” or “Confluent Cloud.” Select the option shown in Figure 2.

Figure 2. Selecting Confluent Cloud in Marketplace

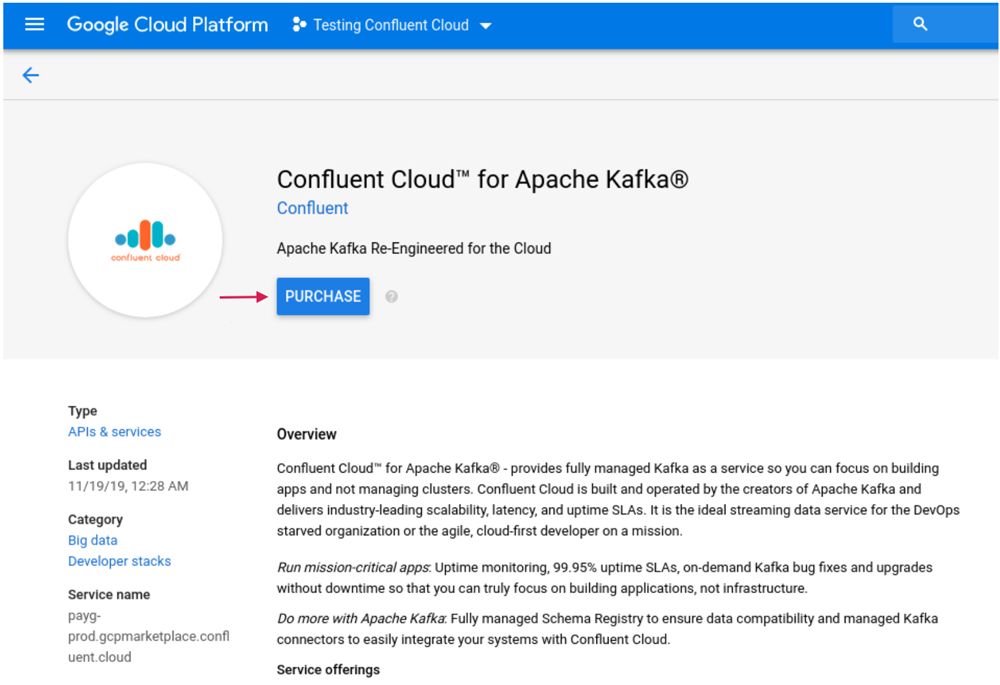

Step 3: Purchase Confluent Cloud

After selecting Confluent Cloud, you will be served with a page that allows you to purchase the service. Simply click on the button “Purchase” as shown in Figure 3.

Figure 3. Purchasing Confluent Cloud

To pay for Confluent Cloud, GCP has enabled Confluent Consumption Units (CCUs). Each CCU equals $0.0001. Pricing in GCP Marketplace is no different than our direct purchase pricing. For example, if you stream 1 GB of data into a cluster in region us-east1, you would pay $0.11 or the equivalent of 1,100 CCUs.

Pick “Confluent Consumption Unit” as the pricing option and make sure to select the box that authorizes Google to make this purchase on your behalf. Now, let’s enable Confluent Cloud.

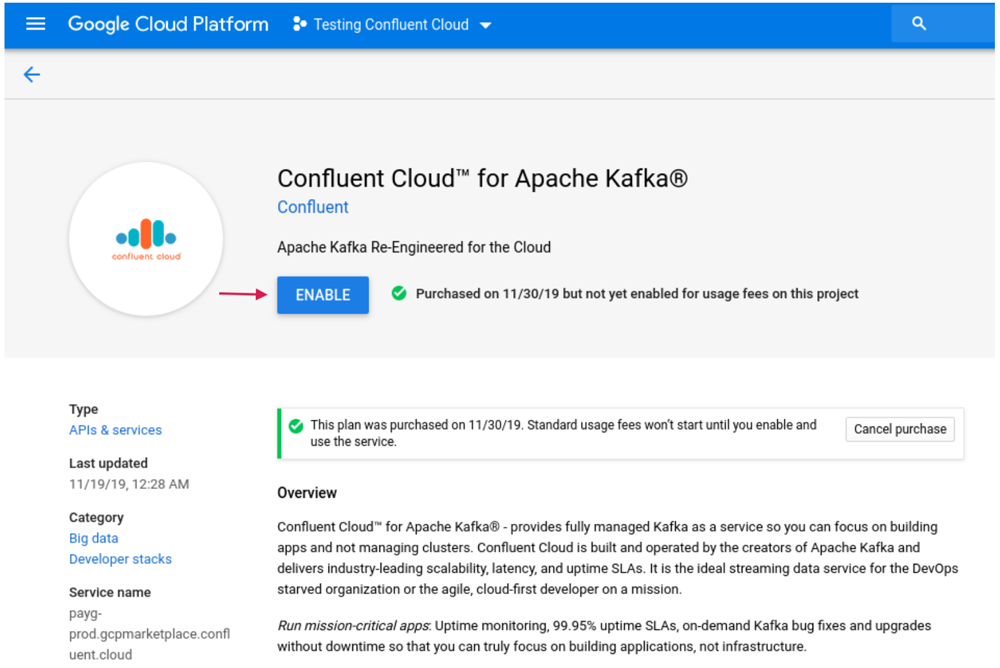

Step 4: Enable Confluent Cloud on GCP

After some seconds, GCP will show that Confluent Cloud has been successfully purchased and that you can now enable its API for usage. Note that there are absolutely no charges until you enable the Confluent Cloud API in GCP. Click on the “Enable” button to enable Confluent Cloud on GCP, as shown in Figure 4.

Figure 4. Enabling the Confluent Cloud API on GCP

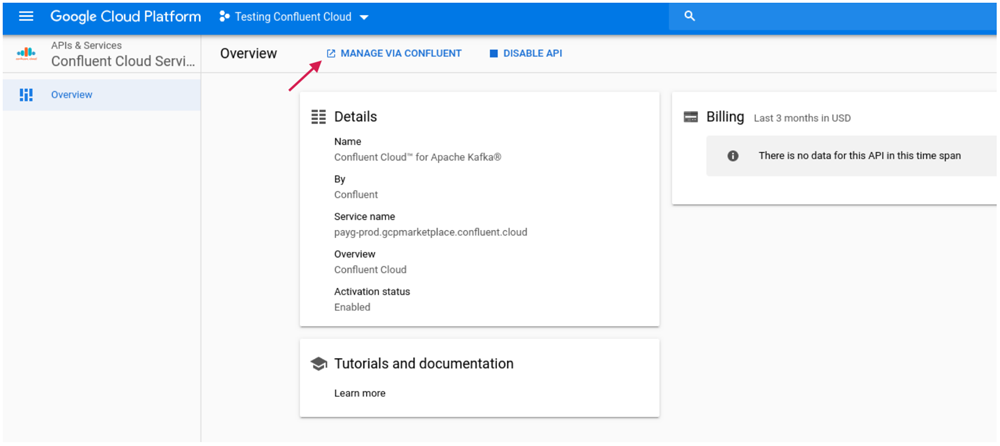

Enabling Confluent Cloud on GCP can take up to one minute. You should see the overview page display that GCP now has Confluent Cloud enabled, as shown in Figure 5.

Figure 5. Confluent Cloud properly enabled on GCP

Step 5: Register the admin user

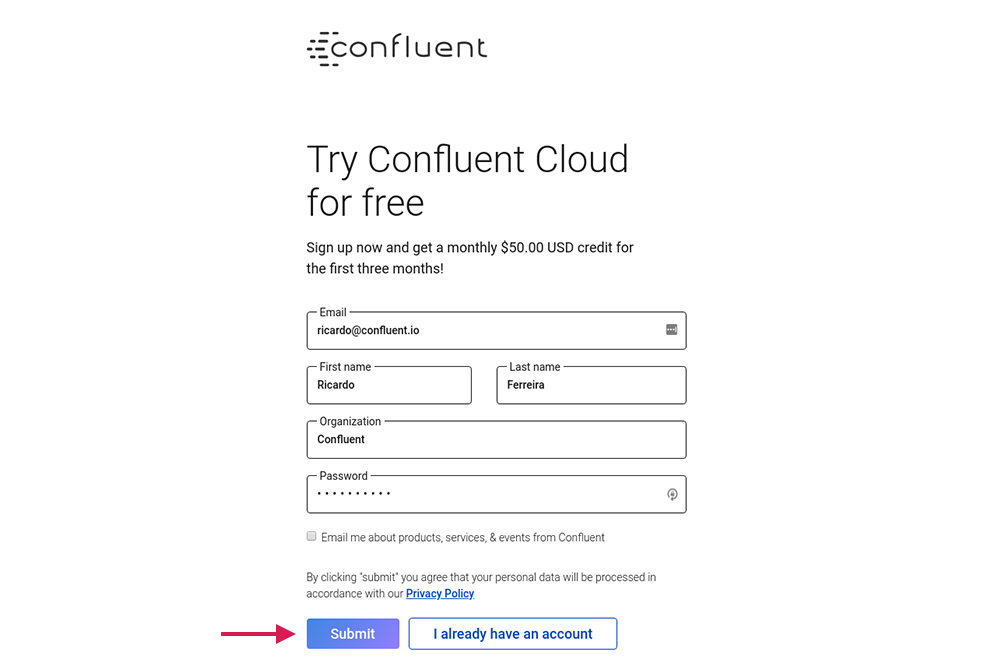

Click on the “Manage via Confluent” link to start working with Confluent Cloud. By clicking that link, you will be brought outside of the GCP console to a signup page where you will register yourself as the main user of Confluent Cloud. During the registration process, please use the same email that you use to log into GCP. Fill in all the fields required and then click on the “Submit” button as shown in Figure 6.

Figure 6. Registering the administrator for Confluent Cloud

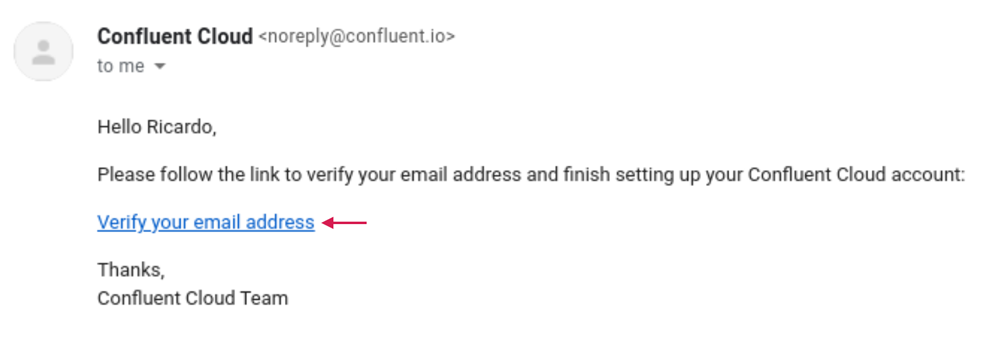

Step 6: Verify your email

You should receive an email asking you to verify your email address. This step is required for security purposes, so Confluent can verify if you are the person that initiated the registration process. Click on the “Verify your email address” link as shown in Figure 7.

Figure 7. Verifying your email address

After verification, you will be automatically logged into Confluent Cloud with your new account (which happens to be the administrator of the account) and you can start playing with the service immediately.

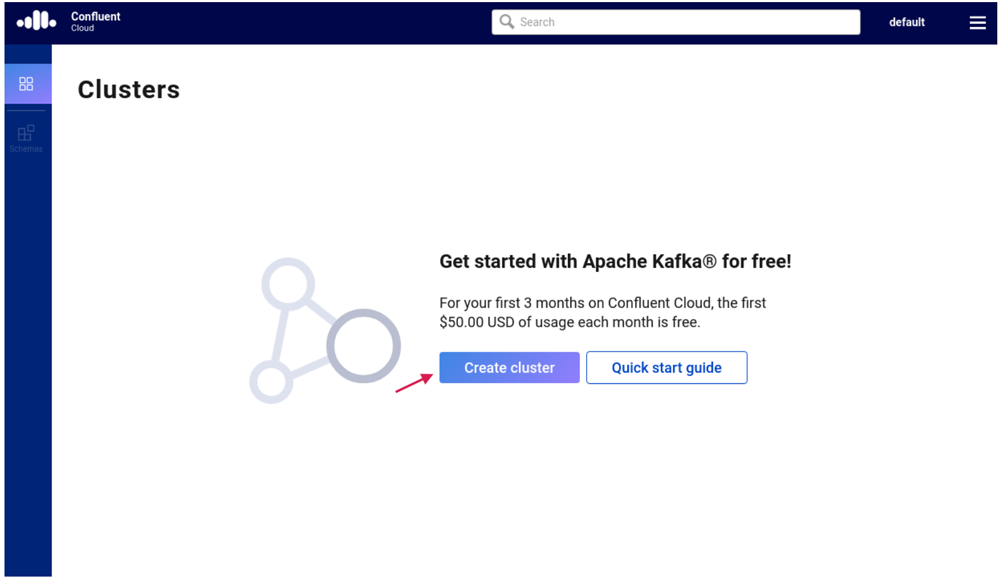

For new users, the first $50.00 of the service usage will be free each month for the first three months. That means if you have a steady flow of events that on average computes ~50 KB per second (an estimate for region us-east1 with the topic retention policy configured to seven days), you won’t pay anything because the total bill would be ~$37.60, and the $50 allowance covers that. In other words, you can try the service for free.

Figure 8. Now the fun begins. Create as many clusters you need.

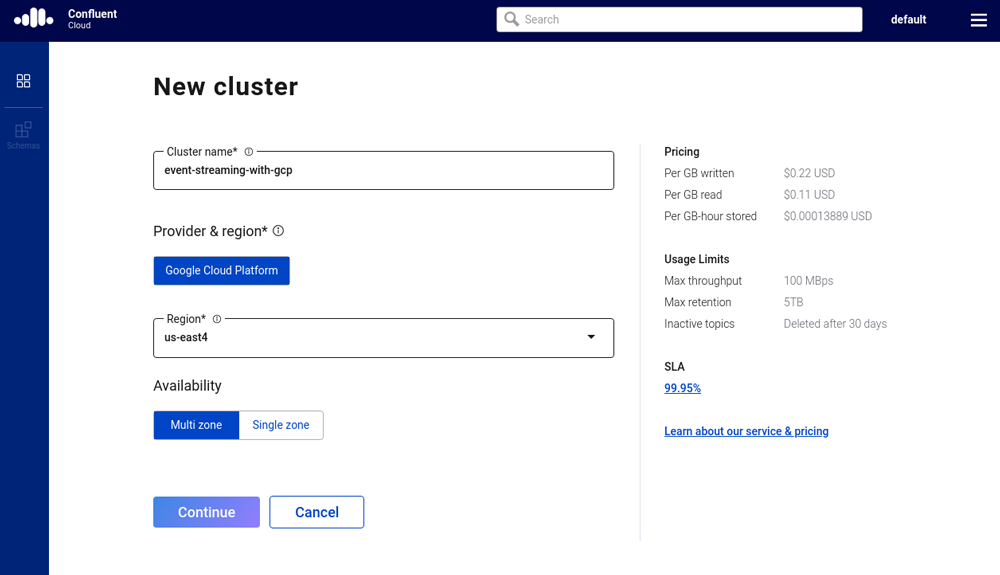

When it comes to creating clusters, you have some decisions to make, but none that are as scary as they would be if you were managing Apache Kafka by yourself. Instead, you just have to name your cluster, choose which region from GCP to spin up the cluster from (it should be as close as possible to your apps to minimize latency), and choose the availability of your cluster. Figure 10 shows an example of cluster creation.

Figure 9. Creating a new cluster in Confluent Cloud

Creating a cluster in Confluent Cloud enabled by GCP is no different than if users were to sign up directly with Confluent, except for the fact that any cluster you create won’t ask for credit card details. The Confluent Cloud documentation provides more details on how to use the service.

Summary

Confluent Cloud makes it easy and fun to develop event streaming applications. Now, we’re taking another step towards putting Apache Kafka at the heart of every organization by making Confluent Cloud a first-class citizen on GCP, so you can use the service with your existing GCP billing account.

If you haven’t already, sign up for Confluent Cloud today!

이 블로그 게시물이 마음에 드셨나요? 지금 공유해 주세요.

Confluent 블로그 구독

New with Confluent Platform 7.9: Oracle XStream CDC Connector, Client-Side Field Level Encryption (EA), Confluent for VS Code, and More

This blog announces the general availability of Confluent Platform 7.9 and its latest key features: Oracle XStream CDC Connector, Client-Side Field Level Encryption (EA), Confluent for VS Code, and more.

Meet the Oracle XStream CDC Source Connector

Confluent's new Oracle XStream CDC Premium Connector delivers enterprise-grade performance with 2-3x throughput improvement over traditional approaches, eliminates costly Oracle GoldenGate licensing requirements, and seamlessly integrates with 120+ connectors...