Apache Kafka®️ 비용 절감 방법 및 최적의 비용 설계 안내 웨비나 | 자세히 알아보려면 지금 등록하세요

Confluent Blog

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

Today is the day! Welcome to Kafka Summit San Francisco 2017!

A huge number of people have been hard at work to make today happen. Our speakers have been writing presentations and preparing to teach their audiences about new Apache Kafka® […]

The Simplest Useful Kafka Connect Data Pipeline in the World…or Thereabouts – Part 2

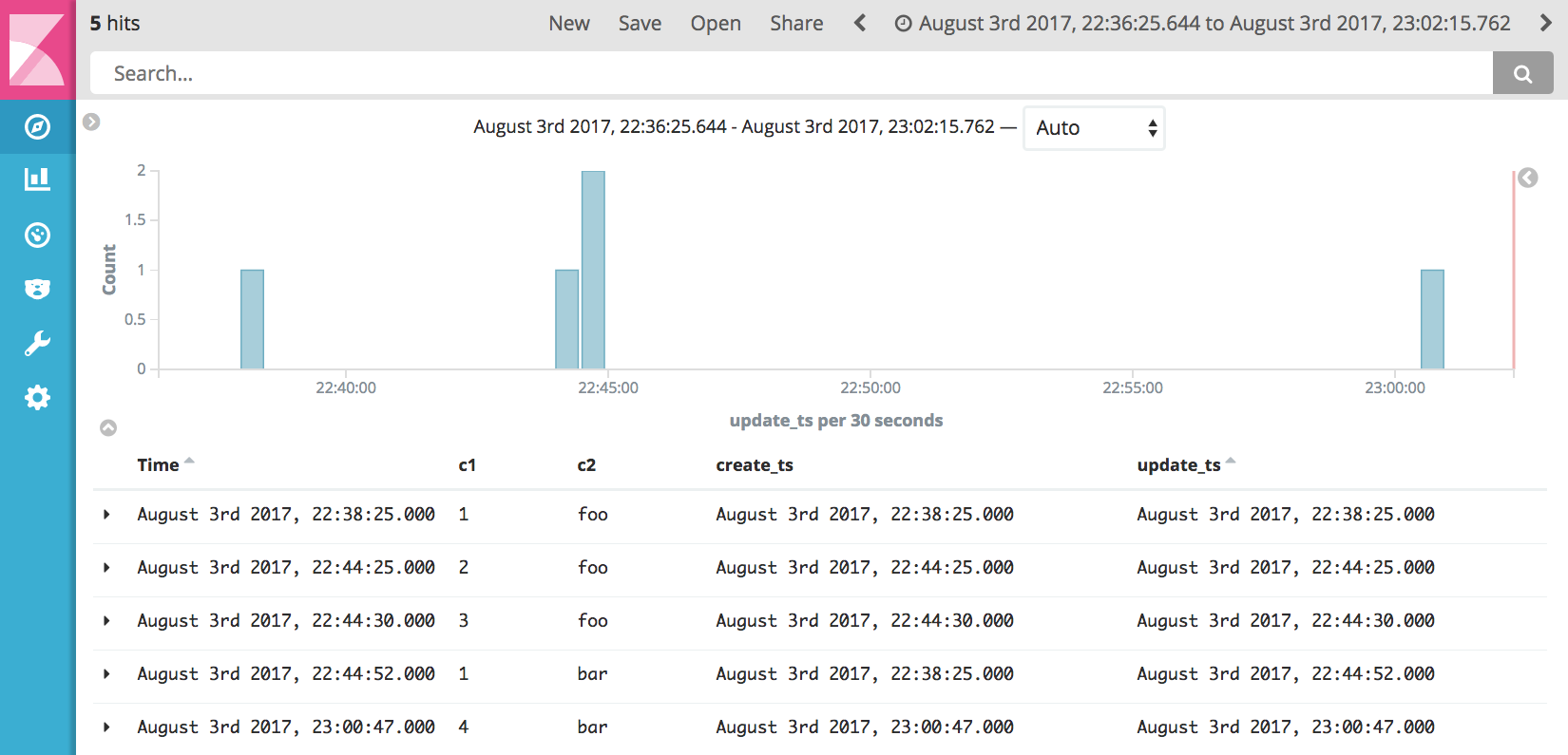

In the previous article in this blog series I showed how easy it is to stream data out of a database into Apache Kafka®, using the Kafka Connect API. I […]

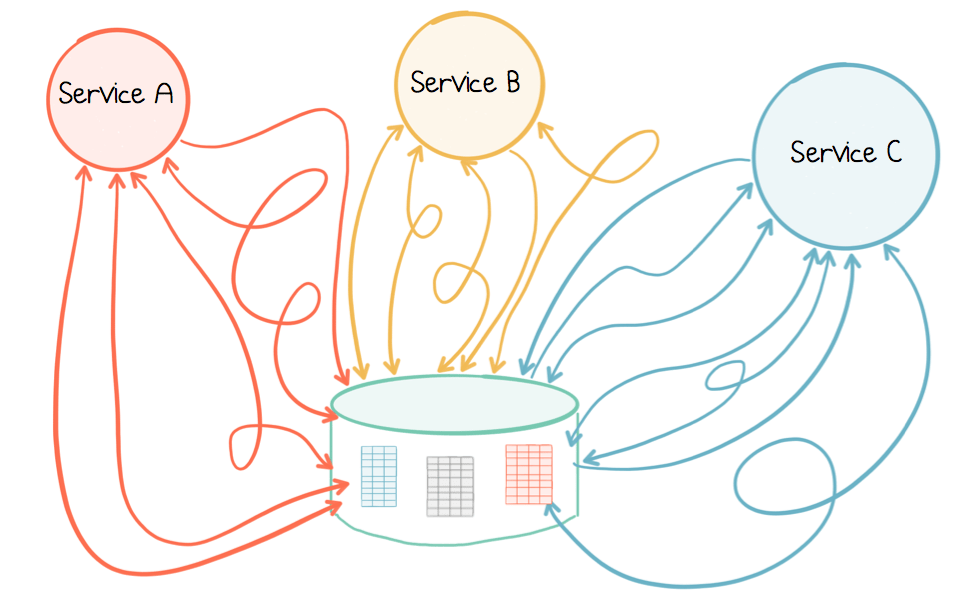

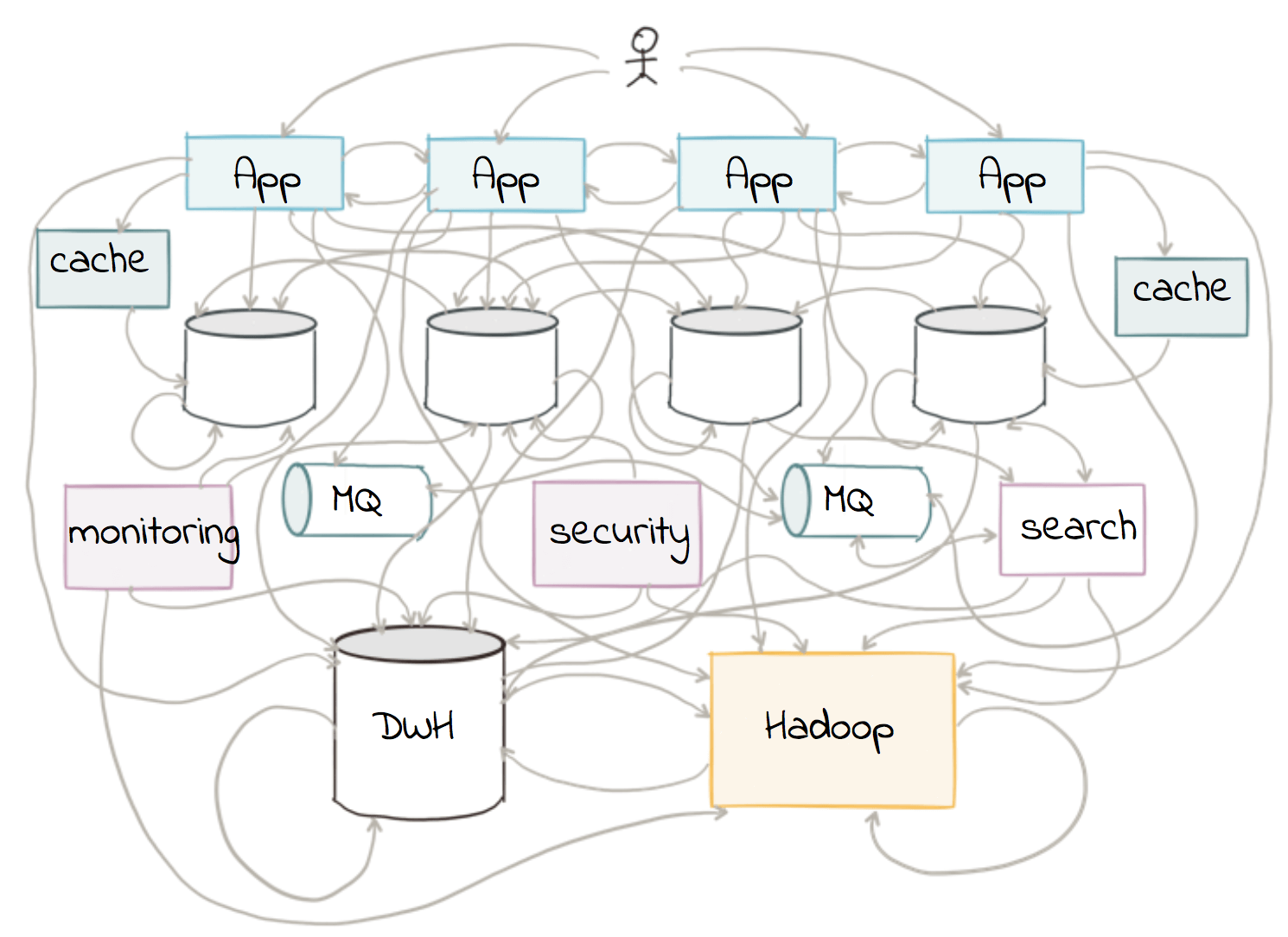

Leveraging the Power of a Database ‘Unbundled’

When you build microservices using Apache Kafka®, the log can be used as more than just a communication protocol. It can be used to store events: messaging that remembers. This […]

The Simplest Useful Kafka Connect Data Pipeline in the World…or Thereabouts – Part 1

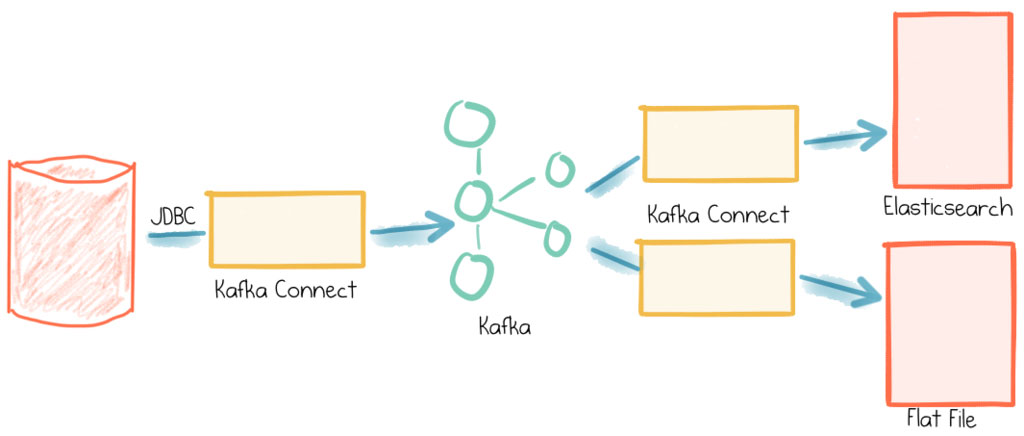

This short series of articles is going to show you how to stream data from a database (MySQL) into Apache Kafka® and from Kafka into both a text file and Elasticsearch—all […]

Are You Ready for Kafka Summit San Francisco?

Just a few months ago, hundreds of Apache Kafka® users and leaders from 400+ companies and 20 countries gathered in New York City to learn about the latest Kafka developments […]

Messaging as the Single Source of Truth

This post discusses Event Sourcing in the context of Apache Kafka®, examining the need for a single source of truth that spans entire service estates. Events are Truth One of […]

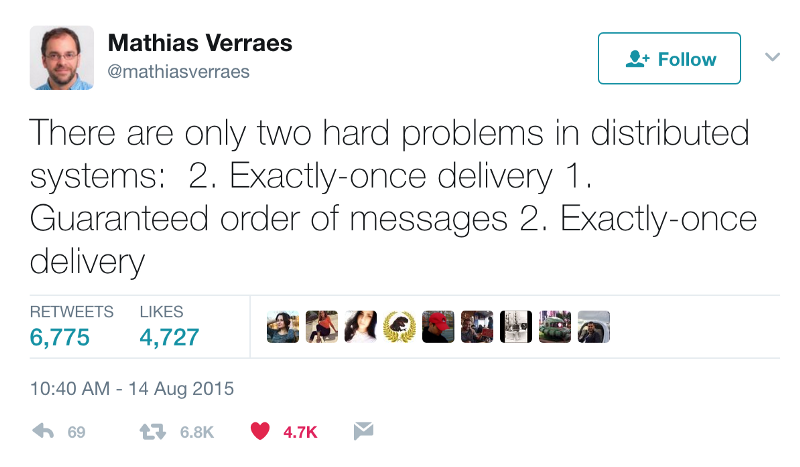

We’ll Say This Exactly Once: Confluent Platform 3.3 is Available Now

Confluent Platform and Apache Kafka® have come a long way from the time of their origin story. Like superheroes finding out they have powers, the latest updates always seem to […]

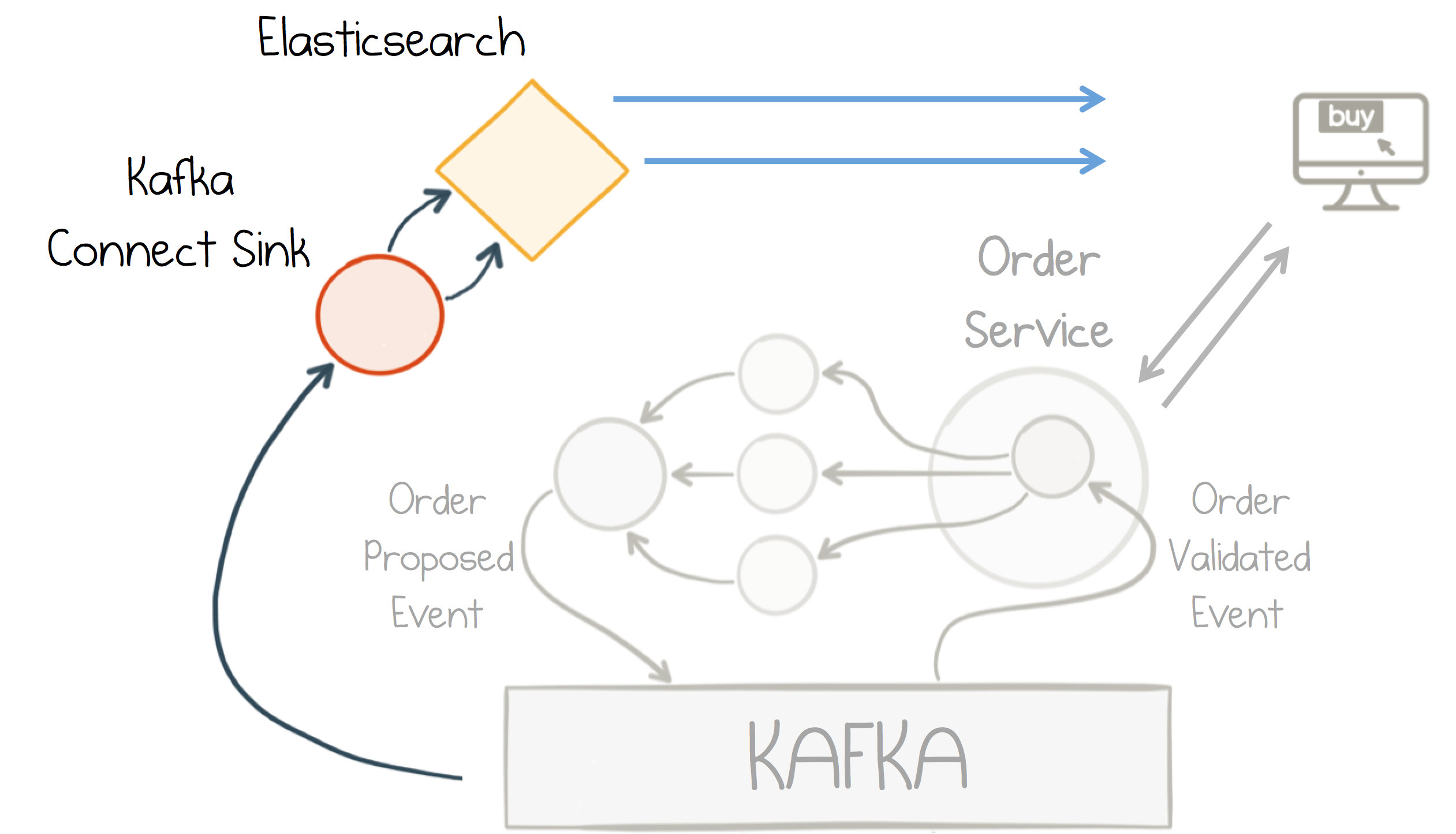

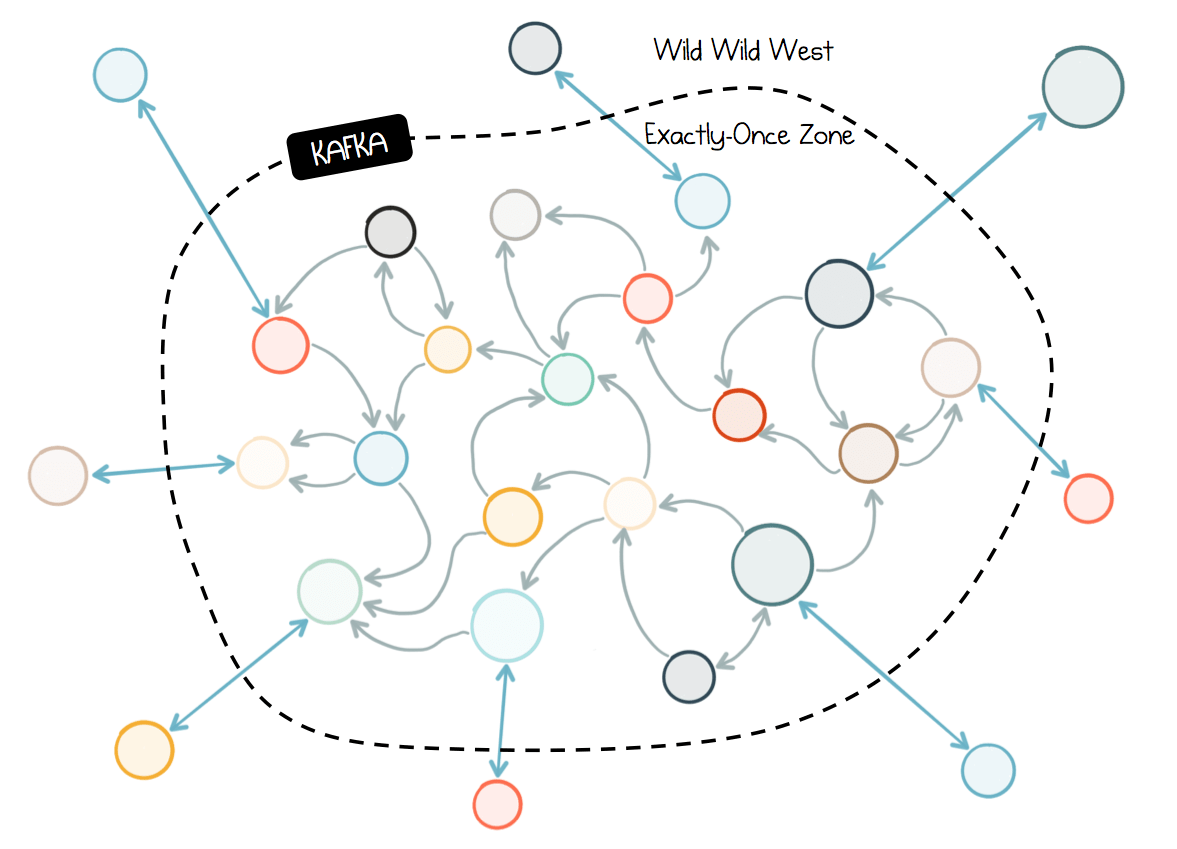

Chain Services with Exactly-Once Guarantees

This fourth post in the microservices series looks at how we can sew together complex chains of services, efficiently, and accurately, using Apache Kafka’s Exactly-Once guarantees. Duplicates, Duplicates Everywhere Any […]

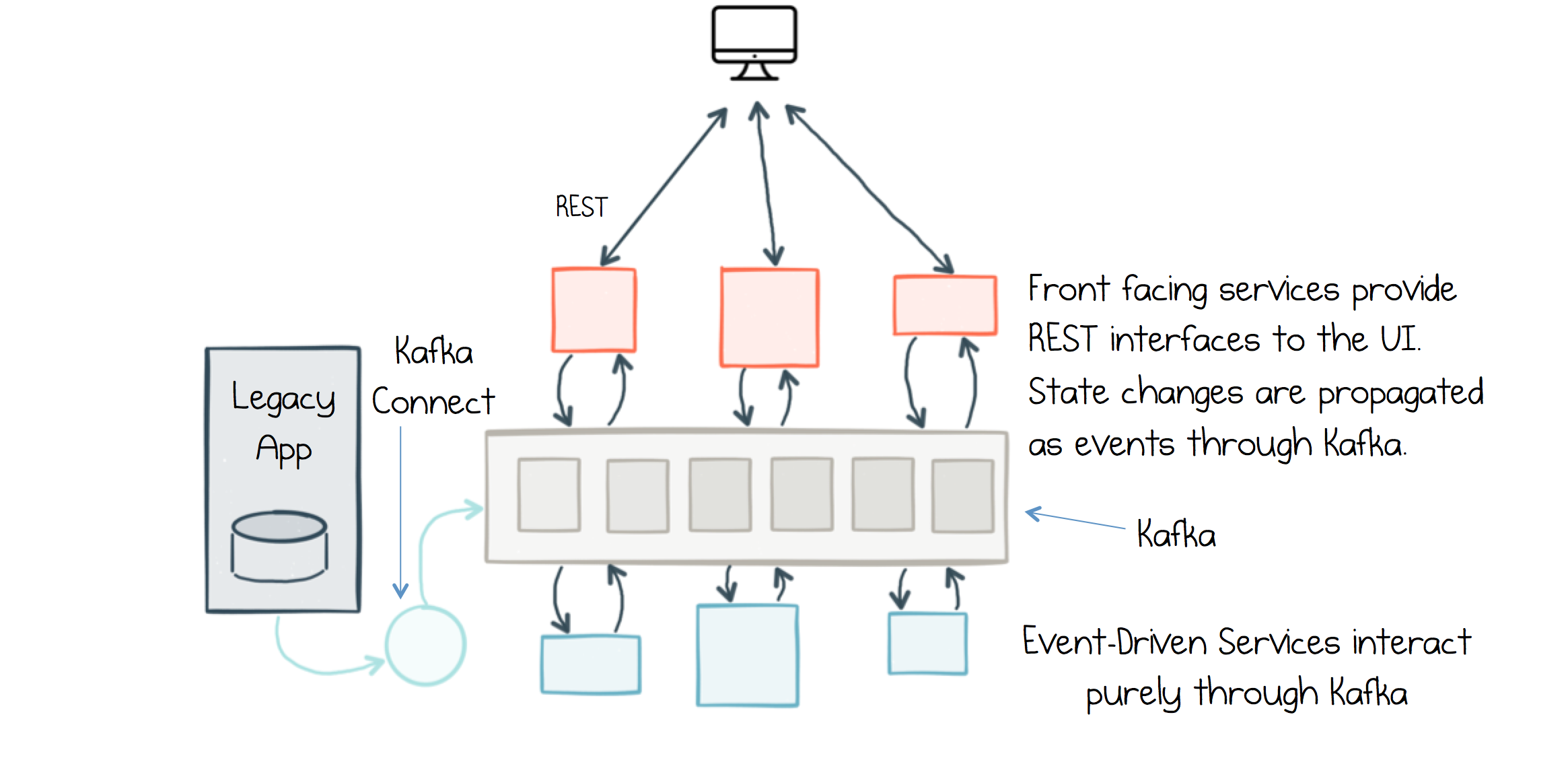

Using Apache Kafka as a Scalable, Event-Driven Backbone for Service Architectures

The last post in this microservices series looked at building systems on a backbone of events, where events become both a trigger as well as a mechanism for distributing state. […]

Upgrading Apache Kafka Clients Just Got Easier

This is a very exciting time to be part of the Apache Kafka® community! Every four months, a new Apache Kafka release brings additional features and improvements. We’re particularly excited […]

Exactly-Once Semantics Are Possible: Here’s How Kafka Does It

I’m thrilled that we have hit an exciting milestone the Apache Kafka® community has long been waiting for: we have introduced exactly-once semantics in Kafka in the 0.11 release and […]

Building a Real-Time Streaming ETL Pipeline in 20 Minutes

There has been a lot of talk recently that traditional ETL is dead. In the traditional ETL paradigm, data warehouses were king, ETL jobs were batch-driven, everything talked to everything […]

Log Compaction – Highlights in the Apache Kafka® and Stream Processing Community – June 2017

We are very excited for the GA for Kafka release 0.11.0.0 which is just days away. This release is bringing many new features as described in the previous Log Compaction […]

Introduction to Apache Kafka® for Python Programmers

In this blog post, we’re going to get back to basics and walk through how to get started using Apache Kafka with your Python applications. We will assume some basic […]