[Virtual Event] GenAI Streamposium: Learn to Build & Scale Real-Time GenAI Apps | Register Now

リアルタイムデータストリーミングを使用して検索と分析を最適化

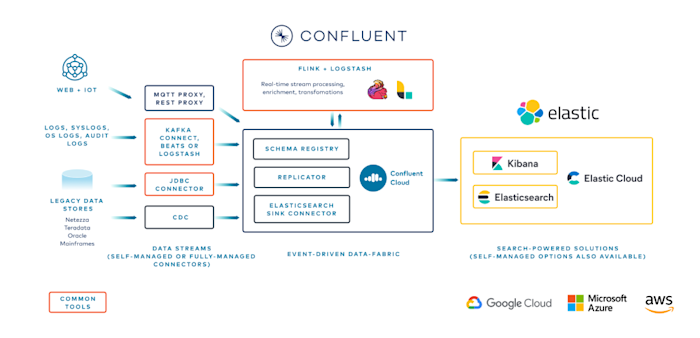

Confluent と Elastic を活用して強力なデータ駆動型アプリケーションを構築し、リアルタイムデータストリーミングを利用して検索、分析、顧客体験を最適化します。Confluent は、Confluent Platform と Confluent Cloud を使用して、1日に数兆件のイベントを処理できる分散型でスケーラブル、セキュアなデータ配信を提供します。Elastic は安全で柔軟なデータストレージ、集約、検索・リアルタイム分析プラットフォームを提供しており、オンプレミスまたは Elastic Cloud で導入可能です。

Confluent と Elastic を組み合わせる理由

センサーデータの取り込み、集計、保管

セキュリティイベントとセンサーデータを、単一のスケーラブルかつ永続的な分散型プラットフォームに統合します。この統合アプローチにより、データの一貫性が向上し、さまざまなデータソースにわたるより包括的な分析が可能になります。

変換、処理、分析

Flink を使用して多様なデータストリームを統合し、より充実した脅威の検出、調査、リアルタイム分析を実現します。この高度な処理により、より微細な洞察が得られ、潜在的なセキュリティ問題への対応時間が短縮されます。

あらゆるソースにデータを共有

集約データを、SIEM のインデックス、検索、カスタムアプリなど、接続されるあらゆるソースに送信できます。重要な情報が最も必要とされる場所で利用できるようにし、システムの相互運用性と意思決定プロセスを改善します。

新しい異常検出機能を可能に

新しい機械学習モデルや人工知能モデルを実行することで、SIEM データのインサイトを引き出します。従来の分析方法では見逃される可能性のある微妙なパターンや異常も特定することができます。

Confluent と Elastic のインテグレーションの仕組み

Elasticsearch シンクコネクターは最小限の労力で Apache Kafka と Elasticsearch を統合するのに役立ちます。これにより、Kafka から Elasticsearch にデータを直接ストリーミングし、ログ分析、セキュリティ分析、全文検索に利用できます。さらに、このデータに対してリアルタイム分析を実行したり、Kibana などの他の視覚化ツールと併用してデータストリームから実用的な洞察を得ることができます。

主な機能

柔軟なデータ処理

スキーマの有無にかかわらず、JSON データおよび Avro 形式をサポートします。

バージョンの互換性

Elasticsearch 2.x、5.x、6.x、7.x に対応しています。

インデックスローテーション

TimestampRouter SMT を使用して、ログデータ管理に最適な時間ベースのインデックスを作成します。

エラー処理

不正な形式のドキュメントを無視、警告、または失敗のオプションで処理する方法を設定します。

Tombstone メッセージサポート

null 値の動作を指定して、Elasticsearch でのレコードの削除を有効にします。

パフォーマンスチューニング

バッチサイズ、バッファサイズ、フラッシュタイムアウトを設定可能にしてスループットを最適化します。