[Virtual Event] GenAI Streamposium: Learn to Build & Scale Real-Time GenAI Apps | Register Now

Apache Kafka Benefits & Use Cases

Apache Kafka is arguably one of the most popular open-source stream processing systems today. Used by over 80% of the Fortune 100, it has countless advantages for any organization that benefits from real-time data streaming, event-driven architecture, data integration, or analytics.

Founded by the original creators of Kafka, Confluent is a fully managed Kafka rebuilt as a cloud-native data streaming platform for more elasticity, performance, and scalability.

Quick Intro: What is Apache Kafka?

Apache Kafka is an open-source distributed streaming platform that can simultaneously ingest, store, and process data across thousands of sources. While Kafka is most commonly used to build real-time data pipelines, streaming applications, and event-driven architecture, today, there are thousands of use cases revolutionizing Banking, Retail, Insurance, Healthcare, IoT, Media, and Telecom. used by thousands of companies for low-latency data pipelines, streaming analytics

Apache Kafka originated at LinkedIn in 2011 as a solution for platform analytics for user activity at scale in social networking. The functionality has been extended through open source development across enterprise IT organizations to support data streaming, data pipeline management, data stream processing, and data governance across distributed systems in event-driven architecture. Kafka is open source under an Apache 2.0 license.

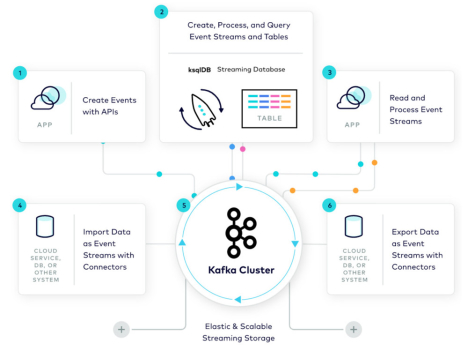

One of the major advantages of Apache Kafka is data integration across thousands of microservices with connectors that enable real-time search and analytic capabilities. This enables software development teams to introduce new features to enterprise software according to the specific requirements of their organizational logistics by sector or industry.

Who uses Kafka?

Some of the major brands currently leading Apache Kafka development in enterprise are:

- Uber

- Splunk

- Lyft

- Netflix

- Walmart

- Tesla

...just to name a few! Read on to learn more about Kafka's benefits and use cases.

Why use it?

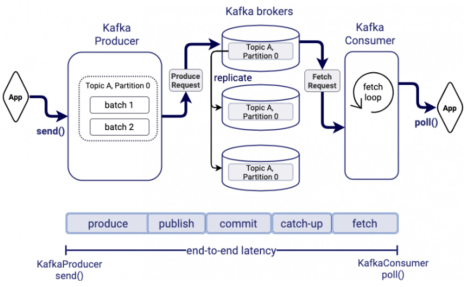

Apache Kafka creates an event variable with a record, timestamp, key value for data colocation, and header for metadata code. Events can monitor platform activity from websites and mobile applications or return values from IoT devices in manufacturing and environmental research. Each event receives a unique key value that is never reused and is stored in data archives where they can be processed for use in both real-time and historical analytics.

Kafka Benefits - Why Organizations Adopt Streaming Data Architecture:

The key benefit of Apache Kafka is that organizations can adopt streaming data architecture to build custom software services that store and process “big data” according to the particular requirements of their industry or business model. What is Kafka? Businesses from shipping and logistics can use the same data center architecture as websites, mobile apps, IoT, and robotic devices in daily manufacturing with platform analytics based on event-stream tracking.

Custom programming teams are required to fine tune the Kafka service functionality to the unique needs of each application across sectors in industry, although the processing logic of the software remains the same. This allows companies to share open source code and developer resources for the Kafka platform across projects to accelerate the introduction of new features, platform functionality, and security patches with ongoing peer review.

Some of the main advantages of Apache Kafka for enterprise software development are:

Processing Speed:

Kafka implements a data processing system with brokers, topics, and APIs that outperforms both SQL and NoSQL database storage with horizontal scalability of hardware resources in multi-node clusters that can be positioned across multiple data center locations. In benchmarks, Kafka outperforms Pulsar & RabbitMQ with lower latency in delivering real-time data across streaming dataarchitecture.

Platform Scalability:

Originally, Apache Kafka was built in order to overcome the high latency associated with batch queue processing using RabbitMQ at the scale of the world’s largest websites. The differences between mean, peak, and tail latency times in event message storage systems enable or limit their real-time functionality on the basis of accuracy. Kafka’s broker, topic, and elastic multi-cluster scalability supports enterprise “big data” with real-time processing with greater adoption than Hadoop.

Pre-Built Integrators:

Kafka Connect offers more than 120 pre-built connectors from open source developers, partners, and ecosystem companies. Examples include integration with Amazon S3 storage, Google BigQuery, ElasticSearch, MongoDB, Redis, Azure Cosmos DB, AEP, SAP, Splunk, and DataDog. Confluent uses converters to serialize or deserialize data in and out of Kafka architecture for advanced metrics and analytics. Programming teams can use the connector resources of Kafka Connect to accelerate application development with support for organizational requirements.

Managed Cloud:

Confluent Cloud is a fully-managed Apache Kafka solution with ksql DB integration, tiered storage, and multi-cloud runtime orchestration that assists software development teams to build streaming dataapplications with greater efficiency. By relying on a pre-installed Kafka environment that is built on the best practices in enterprise and regularly maintained for security upgrades, business organizations can focus on building their code without the hardships of assembling a team and managing the streaming dataarchitecture with 24/7 support over time.

Real-time Analytics:

One of the most popular applications of data streaming technology is to provide real-time analytics for business logistics and scientific research at scale to organizations. The capabilities enabled by real-time stream processing cannot be matched by other systems of data storage, which has led to the wide-spread adoption of Apache Kafka across diverse projects with different goals, as well as to the cooperation in code development from business organizations in different sectors. Kafka delivers real-time analytics for Kubernetes with Prometheus integration.

Enterprise Security:

Kafka is governed by the Apache Software Foundation, which provides the structure for peer-reviewed security across Fortune 500 companies, startups, government organizations, and other SMEs. Confluent Cloud provides software developers with a pre-configured enterprise-grade security platform that includes Role-Based Access Control (RBAC) and Secret Protection for passwords. Structured Audit Logs allow for the tracing of cloud events to enact security protocols that protect networks from scripted hacking, account penetration, and DDoS attacks.

Confluent Cloud is pre-configured for compliance with SOC 1-3 and ISO 27001 requirements. The platform is also PCI compliant and GDPR-ready, with the ability to support HIPAA standards for healthcare records. Confluent also provides consultants who can assist enterprise organizations to upgrade or modernize their apps to event-driven architecture.

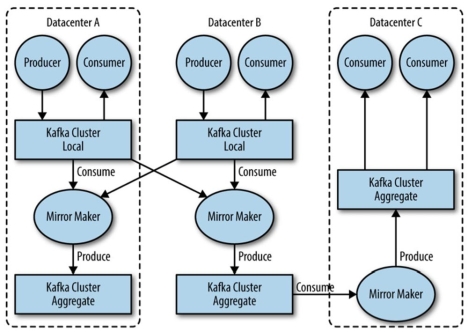

Hybrid & Multi-Cloud:

Run Apache Kafka Across Multiple Data Center Instances.

Confluent’s tiered storage and multi-cloud orchestration capabilities enable enterprise software development teams to support GDPR compliance, as well as backup and disaster recovery systems for their Kafka resources, vital for IT management in complex projects.

Kafka Use Cases by Industry

The capabilities of Apache Kafka as an event-driven architecture or messaging solution have led to widespread innovation in corporate industry. Real-time analytics from APIs or IoT devices have empowered “big data” applications to solve real-world problems. As more companies become cloud-native and data-driven organizations, Apache Kafka provides a functionality described as “the central nervous system” of enterprise organizations operating factory manufacturing, robotics, financial analysis, sales, marketing, and network analytics.

The list below highlights some of the many ways that software development teams are using Apache Kafka to build new solutions based on event-driven architecture for enterprise groups:

Banking:

Nationwide, ING Bank, CapitalOne, RobinHood, and other banking services use Apache Kafka for real-time fraud detection, cybersecurity, and regulatory compliance. Finance groups also use the service for stock market trading applications, such as quant platforms and security price charting using ML/DL for data analytics.

Retail:

Apache Kafka is used by companies like Walmart, Lowe’s, Domino’s, and Bosch for product recommendations, inventory management, deliveries, supply-chain optimization, and omni-channel experience creation. Ecommerce companies also use Kafka on their platforms for real-time analytics of user traffic and fraud protection.

Insurance:

Confluent has been recommended by Morgan Stanley, Bank of America, JP Morgan, and Credit Suisse for bringing innovation to the insurance industry through “big data” analysis and real-time monitoring systems that improve predictive modeling. This includes the use of data from weather, seismic, financial markets, logistics, etc.

Healthcare:

Data streaming applications in healthcare extend from IoT devices that continually monitor patient’s vital data to record keeping systems with HIPAA compliance. The low-latency of real-time monitoring systems with Kafka are important in hospitals to allow medical personnel to respond to critical issues with system alerts.

IoT:

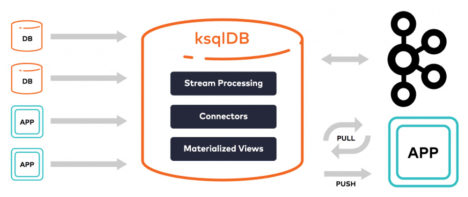

Companies like Audi, E.ON, Target, and Severstal are innovating with IoT (Internet of Things) devices using Kafka for message queues and event streams. Confluent’s MQTT Proxy is a great choice for developers to stream their data into ksql for analysis. Other IoT developers use Confluent’s REST Proxy to stream data via API to hardware.

Telecom:

Apache Kafka is used extensively by telcos worldwide as part of OSS, BSS, OTT, IMS, NFV, Middleware, Mainframe, etc. solutions. Confluent assists telecom companies to deploy resources across on-premise data centers, edge processing, and multi-cloud architectures at scale. Netflix, 8x8, Tivo, & Sky also use Kafka for services.

While these are just a short list of companies defining “What is Kafka” by sector, the main advantage of event-driven architecture is that it can be customized to the requirements of any company and business mode. Confluent, the original creator of Kafka, empowers organizations with a fully managed, cloud-native Kafka with enterprise grade security, robust Kafka connectors, governance, and scalability without added IT burden.

Confluent ksqlDB:

“Integrates out of the box with the 100+ existing Kafka connectors.”

One of the main advantages of Confluent Cloud and our managed-cloud runtimes for Apache Kafka is integration with ksqlDB for real-time data analytics, metrics, and operational logistics.

Kafka Innovation in Every Industry

Another way that Confluent is innovating to help Fortune 500 companies to optimize their business operations is to improve the functionality of Apache ZooKeeper with the release of KIP-500 and Quorum Controller. This has led to lower latency in stream processing at the highest rates of input and to better recovery protocols for failed Kafka clusters in production.

Confluent’s work with Apache Samza has also led to new applications using streaming dataarchitecture with frameworks like Facebook’s React, Angular, and Ember. The list below highlights some of the ways Fortune 500 companies innovate to redefine “What is Kafka”:

Uber/Lyft:

Kafka benefits Uber and Lyft in the matching of drivers and customers at scale on a global level. Kafka architecture and real-time tracking enable the companies to build software applications which optimize ride sharing and taxi reservations. Driver pairing and route optimization can be further improved with ML.

Twitter:

Social media companies use Apache Kafka for content recommendations at scale for hundreds of millions of users. The customization of social media feeds based on user preferences combines algorithms, machine learning, omni-channel, and event streaming architecture for “big data” processing with historical archives & backups.

Splunk:

Confluent has a Splunk connector for simple, real-time integration of event data available for SIEM, security, and metrics applications. Kafka-based Application Performance Monitoring (APM) solutions for cloud SaaS and SDDC management on multi-cloud hardware are used with Prometheus, Grafana, Splunk, and Datadog.

Netflix:

As part of the platform’s transition to original content creation, Netflix adopted Apache Kafka to utilize the advantages of the publish/subscribe model in media recommendations to their user base. Netflix defines Apache Kafka as “the de-facto standard for its eventing, messaging, and stream processing needs” in the cloud.

Walmart:

One of the best examples of the use of Apache Kafka and streaming dataarchitecture for inventory management at global scale in the Fortune 500 is Walmart. The company has committed to event-stream architecture as a means to optimize corporate operations on data-driven methods with real-time tracking of timestamps.

Tesla:

Confluent Cloud provides real-time data streaming, API integration, and machine learning (ML) frameworks that are designed for the automotive industry. Tesla uses Apache Streaming for autonomous vehicle development, supply chain optimization, and manufacturing.

For enterprise software development teams, Confluent’s 120+ pre-built connectors are designed to integrate streaming data architecture into any IT arcthicture. Add real-time data analytics to any process, platform, or project includes search, analytics by machine learning (ML), and use with the most common programming languages. API connections speed up data processing between third-party providers, while Kafka brokers optimize the writing and storage of event data in operations with security. Using these resources, developers can build custom software with innovation.

How Kafka is Used for Twitter's Content Recommendations

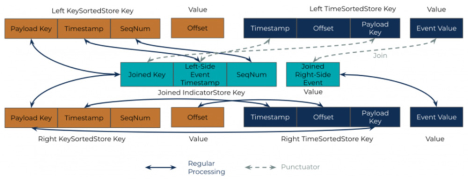

Data model for a machine learning (ML) pipeline.

The opportunity for innovation across every sector of industry is what makes Apache Kafka and streaming data architecture one of the main priorities of IT departments in the Fortune 500 today. Enterprise programming teams can jump start projects using Confluent Cloud and use pre-built connectors to modernize legacy systems to be cloud-compliant with data security, governance, and privacy regulations using hybrid or multi-cloud hardware resources.

Start Kafka in Minutes on Any Cloud with Confluent

Confluent offers complete, fully managed, cloud-native data streaming that's available everywhere your data and applications reside. With schema management, stream processing, real-time data governance, and enterprise security features, developers can maximize all the benefits Kafka has to offer within minutes.

Get started for free on any cloud, or download now. New users get $400 free to spend within their first 3 months.