Creating an IoT-Based, Data-Driven Food Value Chain with Confluent Cloud

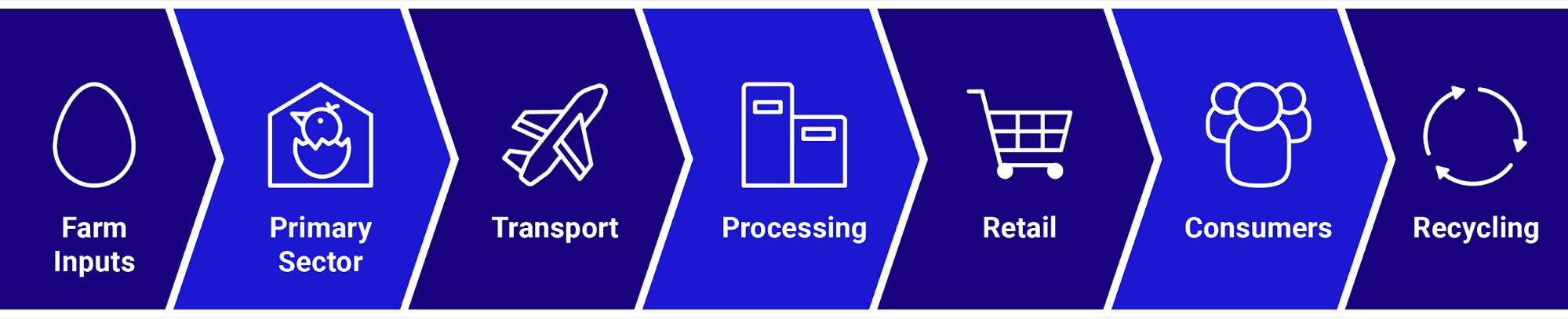

Industries are oftentimes more complex than we think. For example, the dinner you order at a restaurant or the ingredients you buy (or have delivered) to cook dinner at home encounter a number of parties along the supply chain before making it to your table or kitchen.

Think about it. You have farmers. You have transportation companies. You have food processors. You have distributors (more transportation). You have retailers and restaurants. Then there are consumers. And hopefully there are recyclers for any waste developed during and at the end of this process.

Worldwide food processing machinery manufacturer BAADER sees all these necessary handoffs as opportunities to make the food value chain more efficient. By capturing Internet of Things (IoT) event data from farm to fork with Apache Kafka® and Confluent Cloud, BAADER is increasing its value as part of this chain, creating new business opportunities and enabling its partners to optimize their operations.

“By capturing events all along the food value chain, processing those events in Confluent Cloud and sharing insights with interested parties throughout the chain, we are not only increasing the value we provide but also making food production as a whole more intelligent and efficient,” says Stefan Frehse, Digitalization Software Architect for BAADER.

“Confluent Cloud provides us with a fully managed Kafka cluster for both development and production, so we can iterate the way we capture and use events with a select partner or two at each step before rolling new applications out to all interested parties along the food value chain.”

Confluent Cloud is Kafka in a fully managed, resilient, scalable event streaming service deployable in minutes. Essentially, Confluent Cloud will serve as the core digital platform for all solutions BAADER develops for the food processing industry, according to Frehse.

“Confluent Cloud gives BAADER the ability to control sharing, replication and permissions so it can act as a single source of truth across the food value chain. By making Confluent’s event streaming platform the single source of truth, we are changing the corporate culture. Teams may have different perspectives on the insights uncovered in our events, but they all recognize that these events are the cornerstone of our operations. With the connectors from Confluent, we are able to share events via a variety of interfaces with all the teams at BAADER, so everyone can embrace the importance of these events in the food value chain.”

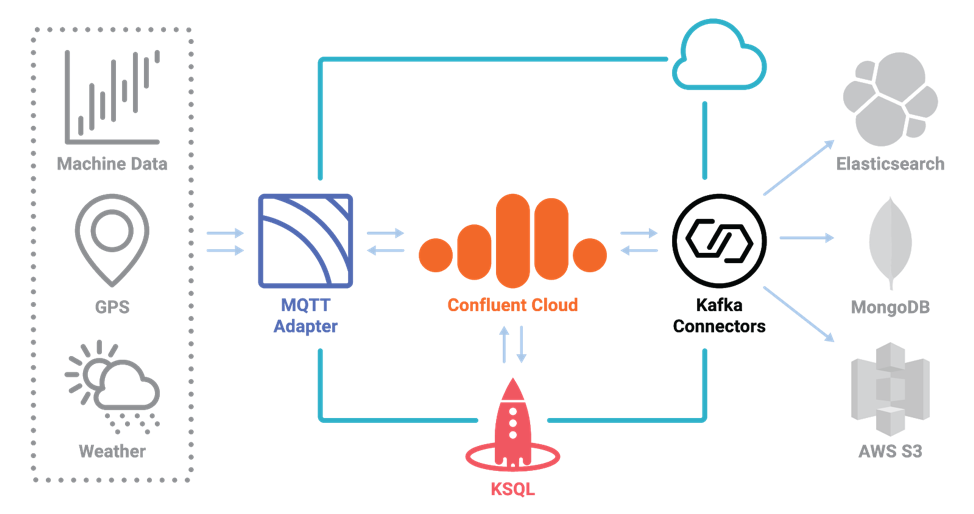

In one example application, BAADER leverages KSQL and IoT data from cameras in their processing facilities to calculate how many times a machine is empty in five-minute rolling windows. With a connector from Confluent, they push these events to Elasticsearch and Kibana for visualizations. When the number exceeds a specified threshold, an application sends an alert to factory managers about a potential drop in efficiency and which machines may require maintenance.

In another example, BAADER collects delivery truck GPS data, integrates with the Google Distance Matrix API, makes a few calculations with KSQL and alerts their customers on the arrival times of delivery trucks. This is business critical for those customers’ operations.

Layering onto this operational use case, BAADER collects weather data to pair with the GPS data. This will help companies understand how traveling conditions affect product quality, and more importantly, animal welfare. According to Frehse, no one in the industry is collecting event data at this scale.

After thorough review, the BAADER team determined that KSQL is the most complete and easy-to-use solution on the market for building event streaming applications.

“With Confluent Cloud, we get more than just a Kafka service. We have all the tools we need to collect, process and distribute high-value events to create a true event-driven food value chain. Investing in Confluent Cloud means we get a complete event streaming platform.” For example, we are leveraging KSQL to build intelligent streams and apply simple filters and transformations so that we only capture high-value data and events. These aspects are important for us because we are building entirely around Kafka and expect it to become our core data architecture.”

Ultimately, BAADER wants consumers to be able to give feedback on quality via a mobile app. Then they will analyze the data in Confluent Cloud to determine where participants in the food value chain can make changes to improve product quality and the consumer experience.

“With so much event data and so many applications built on top of that data, we can completely offload management of Kafka thanks to Confluent Cloud,” according to Frehse. By working with the experts at Confluent and taking advantage of the entire Confluent Cloud ecosystem, the BAADER team is able to focus on building valuable applications for themselves and their partners rather than managing events.

Frehse cites several advantages to having built this solution with Confluent Cloud:

- Kafka makes it easy to build streaming applications in the context of IoT

- The event-driven architecture of Kafka is ideal

- It is easy to experiment at a low cost

- Kafka provides a single source of truth for a data-driven company

Fault tolerance, strict ordering, high availability and scalability were all critical factors for Frehse and BAADER as well. Choosing Confluent Cloud as the event streaming platform was ultimately just as significant.

“We only had to configure a few parameters, got started very quickly and our environment is incredibly secure,” says Frehse.

“More important though, by building our solutions for a data-driven food value chain on Confluent Cloud, we’ve received world-class support, both in terms of response time and quality, from day one. And by working with the primary contributors to the Kafka ecosystem, we discovered opportunities to leverage existing technologies rather than reinventing the wheel time and time again.”

If you’d like to learn more about Apache Kafka as a service, you can check out Confluent Cloud, a fully managed event streaming service based on Apache Kafka. Use the promo code CL60BLOG to get an additional $60 of free Confluent Cloud usage.*

Related content

このブログ記事は気に入りましたか?今すぐ共有

Confluent ブログの登録

Chopped: AI Edition - Building a Meal Planner

Dinnertime with picky toddlers is chaos, so I built an AI-powered meal planner using event-driven multi-agent systems. With Kafka, Flink, and LangChain, agents handle meal planning, syncing preferences, and optimizing grocery lists. This architecture isn’t just for food, it can tackle any workflow.

Predictive Analytics: How Generative AI and Data Streaming Work Together to Forecast the Future

Discover how predictive analytics, powered by generative AI and data streaming, transforms business decisions with real-time insights, accurate forecasts, and innovation.