Stream Processing

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

Seamless SIEM – Part 2: Anomaly Detection with Machine Learning and ksqlDB

We talked about how easy it is to send osquery logs to the Confluent Platform in part 1. Now, we’ll consume streams of osquery logs, detect anomalous behavior using machine […]

Integrating Elasticsearch and ksqlDB for Powerful Data Enrichment and Analytics

Apache Kafka® is often deployed alongside Elasticsearch to perform log exploration, metrics monitoring and alerting, data visualisation, and analytics. It is complementary to Elasticsearch but also overlaps in some ways, […]

Building a Materialized Cache with ksqlDB

When a company becomes overreliant on a centralized database, a world of bad things start to happen. Queries become slow, taxing an overburdened execution engine. Engineering decisions come to a […]

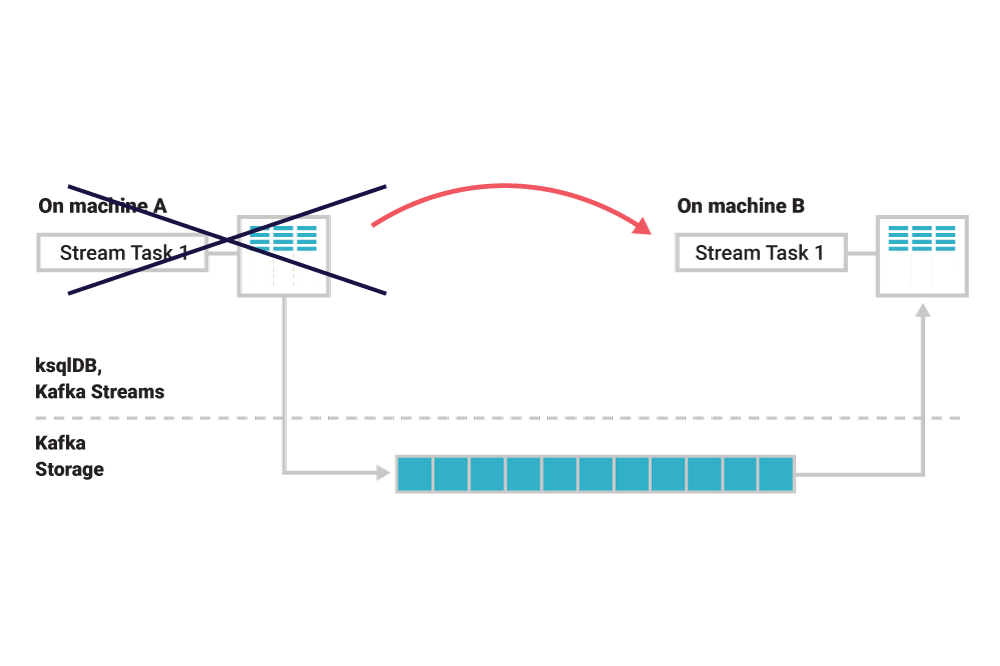

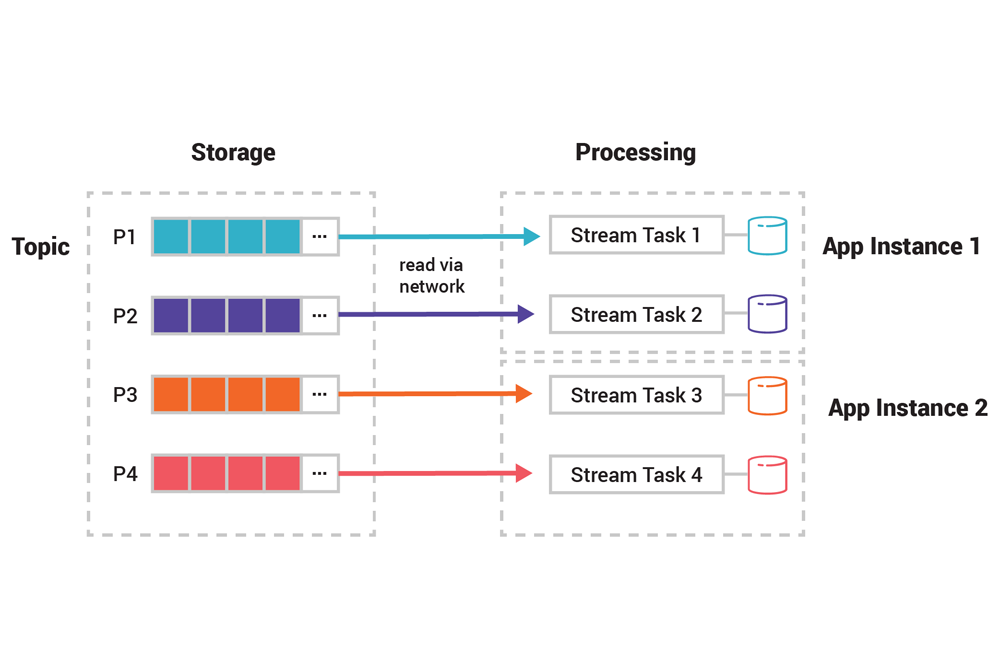

Streams and Tables in Apache Kafka: Elasticity, Fault Tolerance, and Other Advanced Concepts

Now that we’ve learned about the processing layer of Apache Kafka® by looking at streams and tables, as well as the architecture of distributed processing with the Kafka Streams API […]

Streams and Tables in Apache Kafka: Processing Fundamentals with Kafka Streams and ksqlDB

Part 2 of this series discussed in detail the storage layer of Apache Kafka: topics, partitions, and brokers, along with storage formats and event partitioning. Now that we have this […]

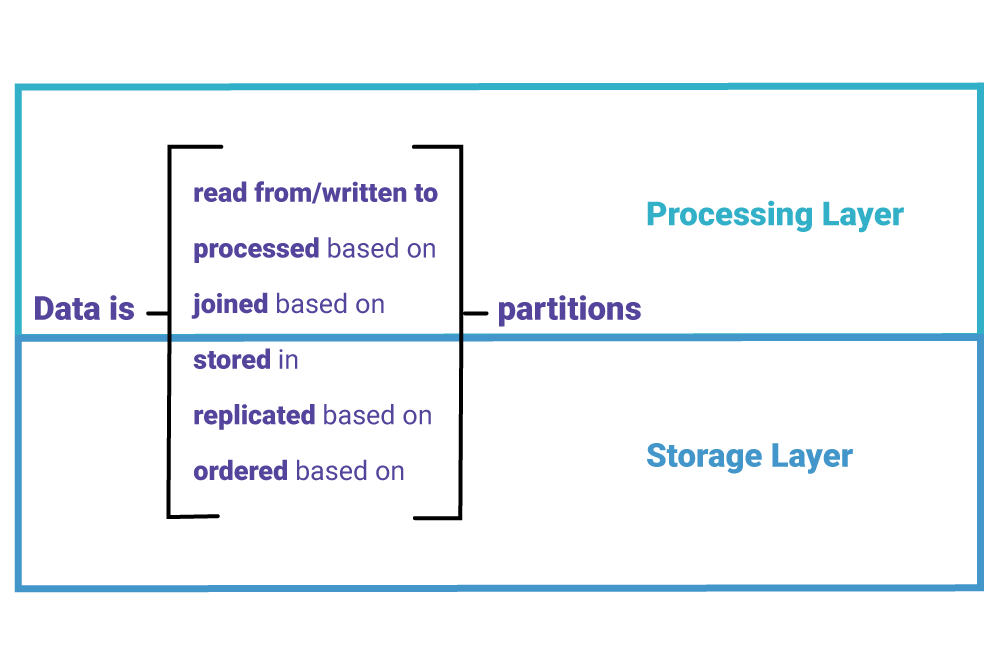

Streams and Tables in Apache Kafka: Topics, Partitions, and Storage Fundamentals

Part 1 of this series discussed the basic elements of an event streaming platform: events, streams, and tables. We also introduced the stream-table duality and learned why it is a […]

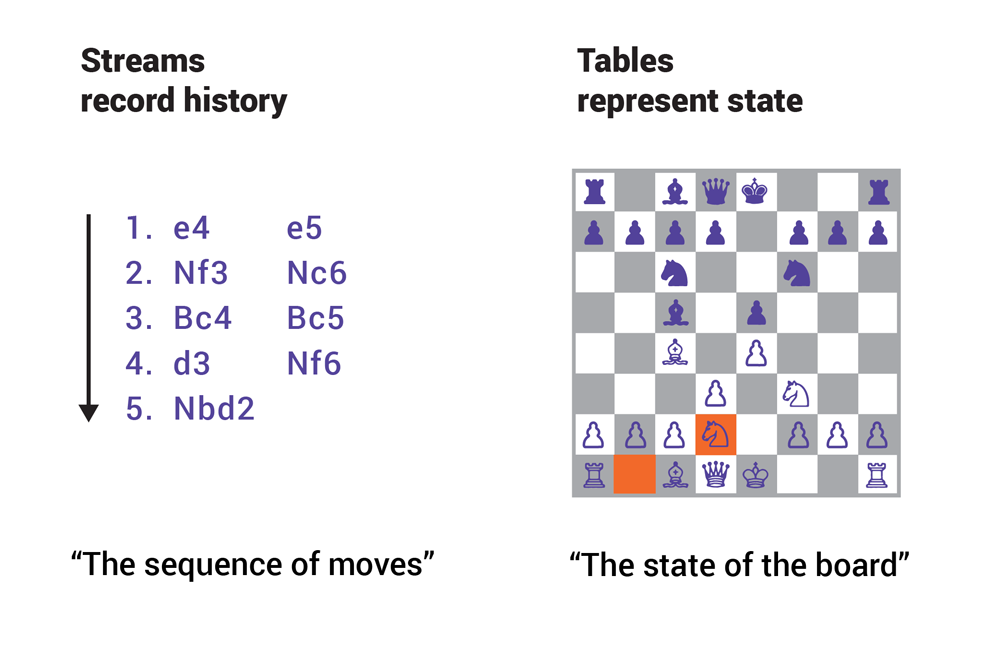

Streams and Tables in Apache Kafka: A Primer

This four-part series explores the core fundamentals of Kafka’s storage and processing layers and how they interrelate. In this first part, we begin with an overview of events, streams, tables, […]

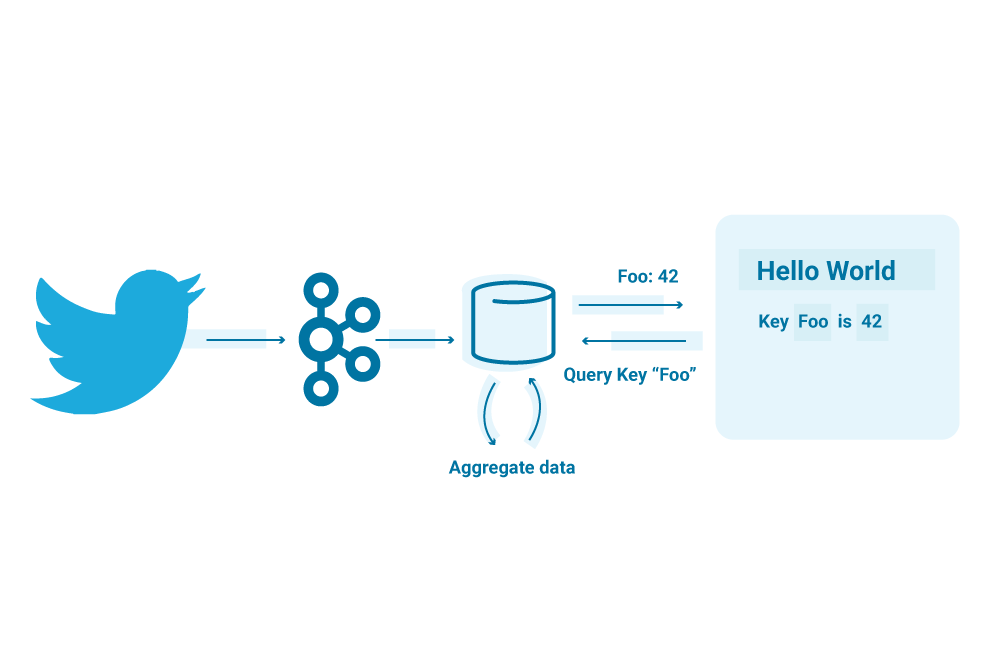

Exploring ksqlDB with Twitter Data

When KSQL was released, my first blog post about it showed how to use KSQL with Twitter data. Two years later, its successor ksqlDB was born, which we announced this […]

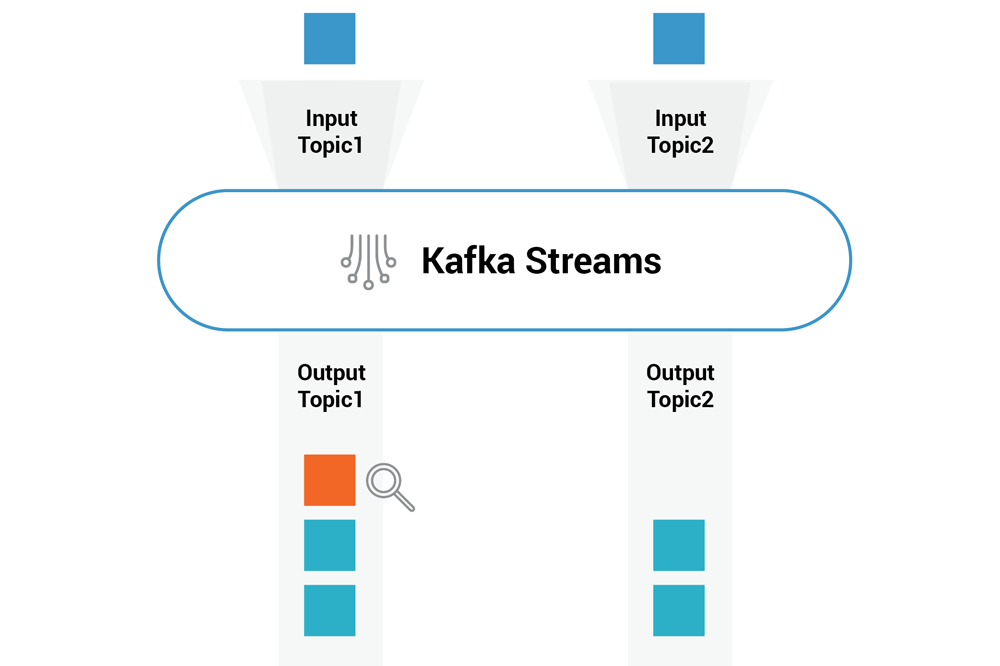

Testing Kafka Streams Using TestInputTopic and TestOutputTopic

As a test class that allows you to test Kafka Streams logic, TopologyTestDriver is a lot faster than utilizing EmbeddedSingleNodeKafkaCluster and makes it possible to simulate different timing scenarios. Not […]

Kafka Streams and ksqlDB Compared – How to Choose

ksqlDB is a new kind of database purpose-built for stream processing apps, allowing users to build stream processing applications against data in Apache Kafka® and enhancing developer productivity. ksqlDB simplifies […]

Introducing ksqlDB

Today marks a new release of KSQL, one so significant that we’re giving it a new name: ksqlDB. Like KSQL, ksqlDB remains freely available and community licensed, and you can […]

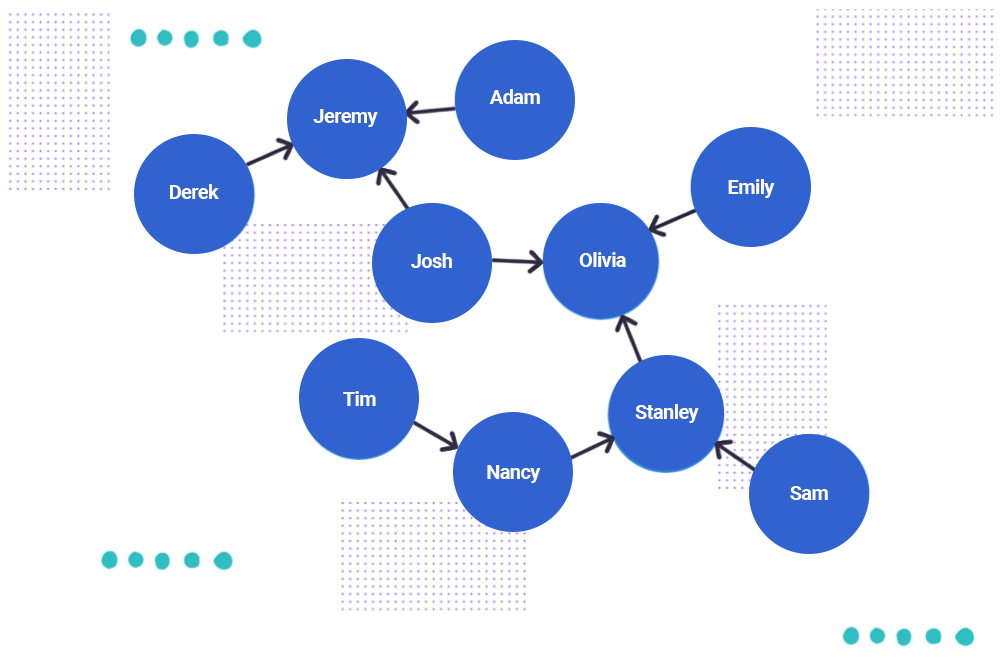

Using Graph Processing for Kafka Streams Visualizations

We know that Apache Kafka® is great when you’re dealing with streams, allowing you to conveniently look at streams as tables. Stream processing engines like ksqlDB furthermore give you the […]

ksqlDB UDFs and UDAFs Made Easy

One of ksqlDB’s most powerful features is allowing users to build their own ksqlDB functions for processing real-time streams of data. These functions can be invoked on individual messages (user-defined […]

Building Shared State Microservices for Distributed Systems Using Kafka Streams

The Kafka Streams API boasts a number of capabilities that make it well suited for maintaining the global state of a distributed system. At Imperva, we took advantage of Kafka […]