[Virtual Event] GenAI Streamposium: Learn to Build & Scale Real-Time GenAI Apps | Register Now

Technology

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

Integrating Microservices with Confluent Cloud Using Micronaut® Framework

Continuing our discussion of JVM microservices frameworks used with Apache Kafka, we introduce Micronaut. Let’s integrate a Micronaut microservice with Confluent Cloud—using Stream Governance—and test the Kafka integration with TestContainers.

Introducing Confluent’s JavaScript Client for Apache Kafka®

Confluent launches the general availability of a new JavaScript client for Apache Kafka®, a fully supported Kafka client for JavaScript and TypeScript programmers in Node.js environments.

New with Confluent Platform 7.8: Confluent Platform for Apache Flink® (GA), mTLS Identity for RBAC Authorization, and More

This blog announces the general availability of Confluent Platform 7.8 and its latest key features: Confluent Platform for Apache Flink® (GA), mTLS Identity for RBAC Authorization, and more.

Confluent’s Customer Zero: Supercharge Lead Scoring with Apache Flink® and Google Cloud Vertex AI, Part 1

This blog details an end-to-end real-time prediction project leveraging the combined capabilities of Confluent Cloud stacks and Google Cloud Vertex AI. This project aims to deliver a streamlined solution for real-time prediction applications, catering to the evolving needs and challenges of moder...

Unify Streaming and Analytical Data with Apache Iceberg®, Confluent Tableflow, and Amazon SageMaker® Lakehouse

Tableflow can seamlessly make your Kafka operational data available to your AWS analytics ecosystem with minimal effort, leveraging the capabilities of Confluent Tableflow and Amazon SageMaker Lakehouse.

Securely Query Confluent Cloud from Amazon Redshift with mTLS

With both Confluent and Amazon Redshift supporting mTLS, streaming developers and architects are able to take advantage of a native integration that allows Amazon Redshift to query Confluent Cloud topics.

Deep Dive into Handling Consumer Fetch Requests: Kafka Producer and Consumer Internals, Part 4

Dive into the inner workings of brokers as they serve data up to a consumer.

CDC and Data Streaming: Capture Database Changes in Real Time with Debezium PostgreSQL Connector

Since its inception, change data capture (CDC) technology has significantly evolved, transitioning from a tool primarily used for database replication and migration to a cornerstone of real-time streaming. Its pivotal role in modern data architectures enables businesses to harness real-time data...

Introducing Apache Kafka® 3.9

We are proud to announce the release of Apache Kafka 3.9.0. This is a major release, the final one in the 3.x line. This will also be the final major release to feature the deprecated Apache ZooKeeper® mode. Starting in 4.0 and later, Kafka will always run without ZooKeeper.

Shift Left: Headless Data Architecture, Part 2

Building a headless data architecture requires us to identify the work we’re already doing deep inside our data analytics plane, and shift it to the left. Learn the specifics in this blog.

Announcing the Confluent for Startups AI Accelerator Program: Empowering the First Generation of Real-Time AI Startups

The Confluent for Startups AI Accelerator Program is a 10-week virtual initiative designed to support early-stage AI startups building real-time, data-driven applications. Participants will gain early access to Confluent’s cutting-edge technology, one-on-one mentorship, marketing exposure, and...

Shift Left: Headless Data Architecture, Part 1

A headless data architecture means no longer having to coordinate multiple copies of data, and being free to use whatever processing or query engine is most suitable for the job. This blog details how it works.

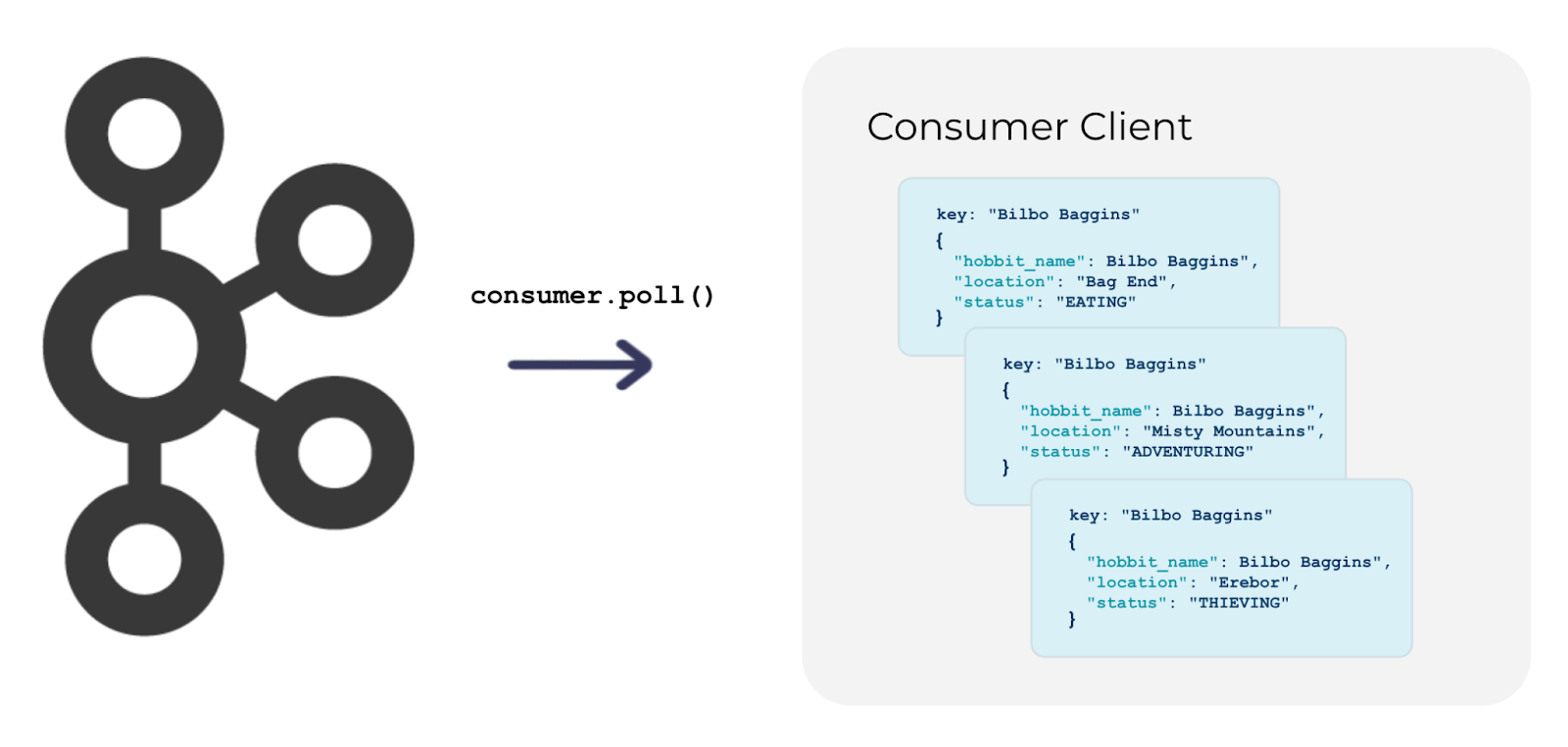

Preparing the Consumer Fetch: Kafka Producer and Consumer Internals, Part 3

In this third installment of a blog series examining Kafka Producer and Consumer Internals, we switch our attention to Kafka consumer clients, examining how consumers interact with brokers, coordinate their partitions, and send requests to read data from Kafka topics.