[Virtual Event] GenAI Streamposium: Learn to Build & Scale Real-Time GenAI Apps | Register Now

Real-Time Data Integration Hub

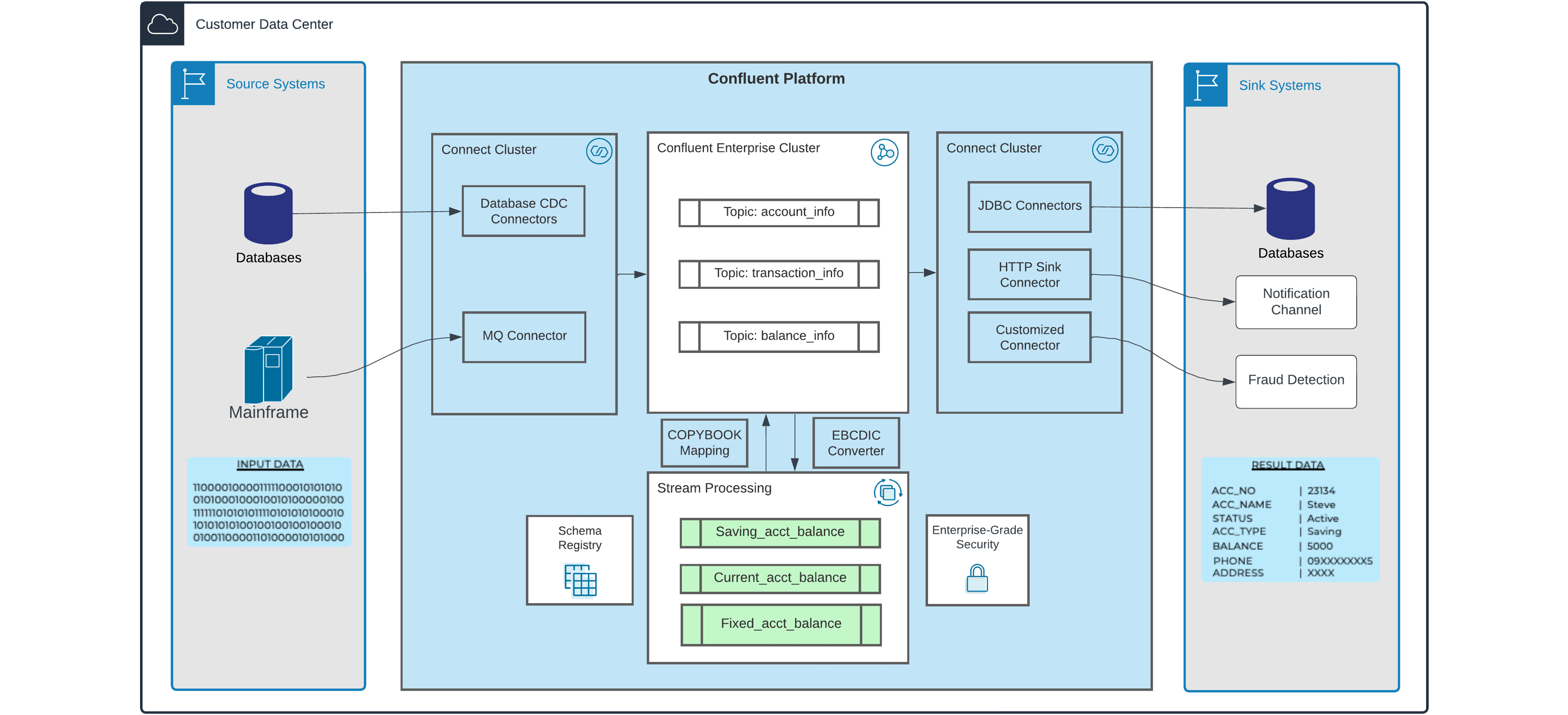

Centralize your data in only one data streaming platform from your source systems to target systems in real time. Reduce data silos and the development lifecycle with 120+ pre-built connectors. Prepare and transform data by joining, filtering, aggregating, and enriching before sending to your destination systems.

Reduce Data Silos and Centralize Data from a Variety of Sources and Legacy systems

Nowadays, data is stored across a wide variety of sources and legacy systems. Legacy systems, including mainframes, are known for their substantial costs, monolithic architectures, and significant challenges in achieving compatibility with contemporary applications. A primary hurdle in this context is the extraction of data from mainframes and the transformation of binary data formats used by these systems into formats that are readable by modern technologies. By utilizing Confluent for stream processing, along with pre-built solutions, MFEC’s Data Integration Hub can effectively address this issue.

MFEC’s Data Integration Hub allows organizations to:

- Easily manage data in one centralized real-time data platform.

- Get real-time data to use for several use cases e.g., detect abnormal behavior for fraud systems or send real-time transaction/data to notification channel immediately.

- Encryption data with customized SMTs (single message transforms).

Solution benefits:

Reduce mainframe cost, modernize architecture, and support new technology.

Integrate data from legacy systems and others to centralized Confluent Platform with pre-built connectors. No coding is required.

Supports stream processing to transform data on the fly (e.g., convert binary format to readable format).

Build with Confluent

This use case leverages the following building blocks in Confluent Platform:

Reference Architecture

Integrate Confluent Platform with external systems such as databases and mainframe (IBM MQ) with pre-built connectors including Database CDC, JDBC, HTTP, and others.

Join, enrich and aggregate data in real-time with Flink as the stream processing engine.

Leverage pre-built and custom connectors as well as SMT to send data from Confluent Platform to target systems such as databases, notification channels, and fraud detection systems.

Resources

Learn more at MFEC

Real-time data monitoring

Contact Us

Contact MFEC to learn more about this use case and get started.