[Webinar] AI-Powered Innovation with Confluent & Microsoft Azure | Register Now

Elastic storage scalability and Infinite data retention for Confluent with Pure Storage

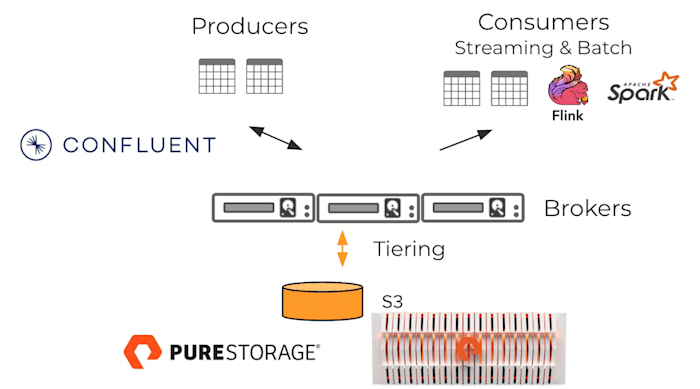

Apache Kafka and Confluent event streaming platforms enable users to react to events in real-time. As Kafka becomes a system of record for all event data in an enterprise, there is a need to scale and balance workloads, easily add Kafka brokers and retain data in Kafka for long periods of time. With Pure Storage and the tiered storage capabilities in Confluent, customers can easily move data to Pure FlashBlade for Kafka for infinite retention and scalability while maintaining superior performance.

Why Pure Storage and Confluent Platform?

Streamlined Simplicity

Dramatically simplify cluster management and consolidate data pipelines.

Efficient Scalability

Easily keep pace with the unrelenting growth of streaming data with elastic resources and independent scaling of compute and storage resources.

Complete Performance

Gain blazing performance for queries on historical data and always fast stream processing.

Infinite Retention

Kafka and Confluent event data can be stored for months, years or infinitely.

Tiered Storage with Pure Storage FlashBlade

Tiered storage allows data teams to architect a more scalable Kafka deployment. Instead of the data being stored on brokers, the data is moved to an S3 protocol compatible scalable object store - Pure FlashBlade. As messages come into the brokers they are processed and retained on the Brokers for a small period of time. Once enough data accumulates, the brokers offload the data to tiered storage, freeing up space on the brokers.