[Webinar] Shift Left to Build AI Right: Power Your AI Projects With Real-Time Data | Register Now

Connector

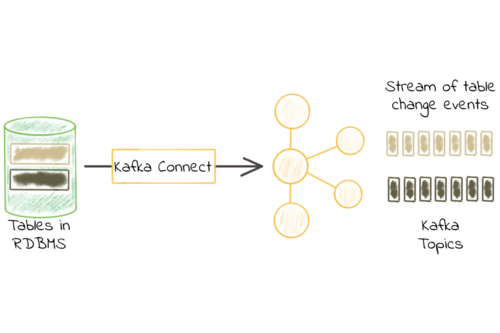

Kafka Connect Deep Dive – JDBC Source Connector

One of the most common integrations that people want to do with Apache Kafka® is getting data in from a database. That is because relational databases are a rich source […]

Easy Ways to Generate Test Data in Kafka

If you’ve already started designing your real-time streaming applications, you may be ready to test against a real Apache Kafka® cluster. To make it easy to get started with your […]

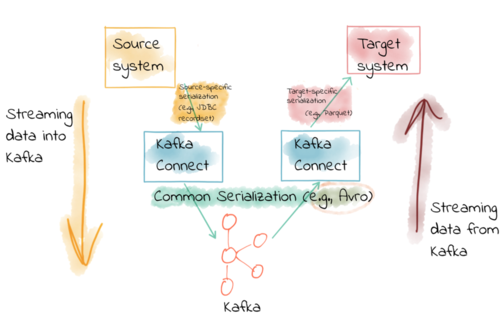

Kafka Connect Deep Dive – Converters and Serialization Explained

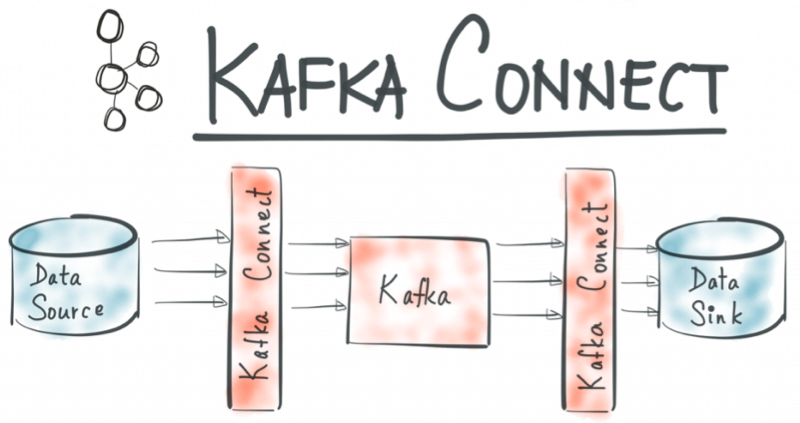

Kafka Connect is part of Apache Kafka®, providing streaming integration between data stores and Kafka. For data engineers, it just requires JSON configuration files to use. There are connectors for […]

Introducing Confluent Hub

Today we’re delighted to announce the launch of Confluent Hub. Confluent Hub is a place for the Apache Kafka and Confluent Platform community to come together and share the components […]

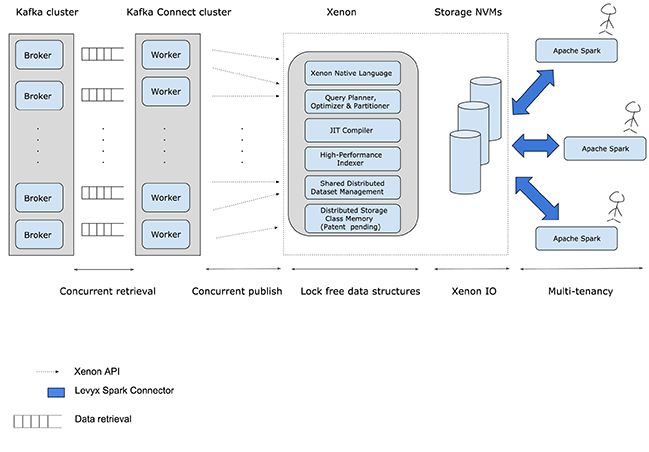

Analytics on Bare Metal: Xenon and Kafka Connect

The following post is a guest blog from Tushar Sudhakar Jee, Software Engineer, Levyx responsible for Kafka infrastructure. You may find this post also on Levyx’s blog. Abstract As part […]

KSQL in Action: Enriching CSV Events with Data from RDBMS into AWS

Life would be simple if data lived in one place: one single solitary database to rule them all. Anything that needed to be joined to anything could be with a […]

No More Silos: How to Integrate Your Databases with Apache Kafka and CDC

One of the most frequent questions and topics that I see come up on community resources such as StackOverflow, the Confluent Platform mailing list, and the Confluent Community Slack group, […]

Kinetica Joins Confluent Partner Program and Releases Confluent Certified Connector for Apache Kafka®

This guest post is written by Chris Prendergast, VP of Business Development and Alliances at Kinetica. Today, we’re excited to announce that we have joined the Confluent Partner Program and […]

The Connect API in Kafka Cassandra Sink: The Perfect Match

The Connect API in Kafka is a scalable and robust framework for streaming data into and out of Apache Kafka, the engine powering modern streaming platforms. At DataMountaineer, we have worked on many big data and fast data projects across many industries from financial...

Kafka Connect Sink for PostgreSQL from JustOne Database

Introducing a Kafka Sink Connector for PostgreSQL from JustOne Database, Inc. JustOne Database is great at providing agile analytics against streaming data and Confluent is an ideal complementary platform for delivering those messages...

Yes, Virginia, You Really Do Need a Schema Registry

I ran into the schema-management problem while working with my second Hadoop customer. Until then, there was one true database and the database was responsible for managing schemas and pretty […]