As part of our educational resources, Confluent Developer now offers a course designed to help you apply Confluent Cloud’s security features to meet the privacy and security needs of your organization. This blog post explores the need to implement security for your Apache Kafka® cluster, then briefly reviews the security features and advantages of using Confluent Cloud.

Remember, Kafka’s default is no security

By default, an open source Kafka cluster is unsecured. It’s worth remembering that, in addition to the obvious risks to your organization, failing to secure client data is against the law in many regions.

Securing a self-hosted Kafka cluster is a complex task that comes with high operational overhead. Any misconfiguration can leave your valuable data exposed, and your organization at risk of running afoul of the law.

Confluent Cloud automatically secures your cluster and client-broker traffic. In this course, we walk through the security features and advantages of using Confluent Cloud as your fully managed cloud-native Apache Kafka system.

Authenticating users

Unless your organization is very small, most organizations will want to use single sign-on (SSO) to authorize users.

You are probably very familiar with SSO and may have seen it being used with identity providers such as Okta, Active Directory, Google, or GitHub accounts for authentication. Confluent Cloud makes this easy, allowing for SSO using your existing SAML-based identity provider (IdP).

Authenticating applications

For authenticating applications or services, there are two options: API keys or connecting your applications via OAuth. Use cloud API keys to control access to Confluent Cloud resources and use the Confluent Cloud APIs available for environments, user accounts, service accounts, connectors, Metrics API, and other resources.

There are two types of API keys available:

-

Resource-specific API keys give access to individual resources in a particular environment. An environment is a collection of Kafka clusters and deployed components such as Connect, ksqlDB, and Schema Registry. An organization can contain multiple environments. You may have different environments for different departments or teams, for example, all belonging to the same organization.

-

Cloud API keys grant management access to the entire organization's Confluent Cloud instance, including all environments.

OAuth is the other way to provide access to your applications and services. OAuth allows you to access your resources and data without having to share or store user credentials. It’s built off cryptographically signed access tokens that allow your application or service to authenticate.

Whether you use API keys or OAuth, we highly recommend using role-based access control (RBAC) for authorization, something that is reviewed in-depth in the course.

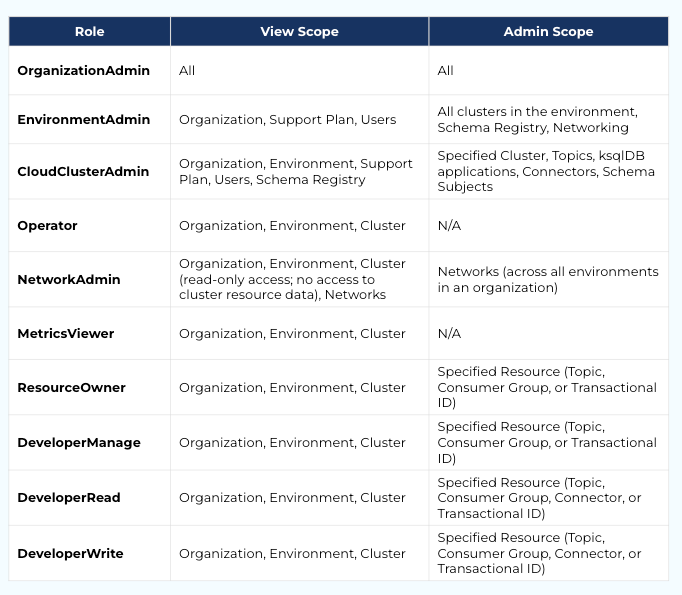

RBAC and ACLs

Your identities will need access to Confluent Cloud, whether it is to create applications or send and receive data. At the same time, your access model needs to be structured in a way where you can easily add, remove, change, and verify permissions.

ACLs are tables that store identities and what they can do or see (the resources they can access and permissions they have) within Confluent Cloud. The important thing to remember is that permissions are tied to each identity and linked to the access they have been given for each resource. If an identity changes teams or scope you will have to make sure to address things at an individual level. If at any point even one of these changes is missed, or configured incorrectly, you now have identities that have access to more than what they should.

This problem is only made worse as the number of identities in your organization increases. Verifying permissions for compliance with laws and regulations can become quite a labor-intensive and time-consuming process, not to mention a potential security risk.

ACLs are specific to Kafka resources and don’t extend to other Confluent Cloud concepts, such as environments and organizations. Managing ACLs for a small number of identities likely isn’t a big deal. However, if you are working with a large organization with hundreds or thousands of identities, using ACLs doesn’t scale. You’re left with the second option for organizing identities, role-based access.

RBACs allow you to configure predefined roles within your organization. Identities are assigned to a role and gain access to an organization, environment, cluster, or specific Kafka resources like topics, consumer groups, and transactional IDs based on that role.

As opposed to ACLs, RBAC integrates with a centralized identity management system and allows much simpler scaling for large organizations. From a compliance perspective, it’s safer and simpler to verify your RBAC roles to prove compliance than to attempt to confirm each individual ACL identity.

Understanding encryption

After the authentication of users, data encryption is the next necessary component of securing your organizational data. There are two main areas that are crucial to protect from attackers:

-

Your network traffic to and from clients, sometimes referred to as “data in motion”

-

The data that is stored in Kafka, sometimes referred to as “data at rest”

Confluent Cloud encrypts your data at rest by default and provides Transport Layer Security or TLS 1.2 encryption for your data in motion. In addition to these built-in security features, Confluent Cloud also provides the ability to bring your own key (BYOK) for data at rest, and secure networking options including VPC or VNet Peering, AWS and Azure Private Link, and AWS Transit Gateway.

BYOK

Bring your own key (BYOK) can be a powerful tool for ensuring the security of your data at rest. It addresses three main concerns for organizations:

-

It allows for encryption at the storage layer and lets a user revoke access to the key at will. This renders any encrypted data stored in the cloud vendors’ drives to be unreadable by them.

-

It helps secure data in a scenario where a server’s drive might be subpoenaed. Without access to the key, the data on the drive is essentially unreadable.

-

It checks a box for security compliance. In a few industries, BYOK is a must-have, including banking and finance, healthcare, and government. For others, it may be a requirement from the InfoSec team.

Audit logs

Audit logs record the runtime decisions of the permission checks that occur as users attempt to take actions that are protected by ACLs and RBAC. Audit logs track operations to create, delete, and modify Confluent Cloud resources, such as API keys, Kafka clusters, user accounts, service accounts, SSO connections, and connectors.

Apache Kafka doesn't provide audit logging out of the box. But within Confluent Cloud, audit logs are captured by default and stored in a Kafka topic, and can be queried or processed like any other Kafka topic. This allows for near-real-time detection of anomalous audit log events.

In the course, we go into detail about the different audit log types and how to use them specifically to protect your organization’s data.

Maintaining compliance and privacy

Certain highly regulated industries have specific regulations and mandates when it comes to customer, vendor, or confidential data. Confluent Cloud provides and maintains for:

-

SOC 1 Type 2, SOC 2 Type 2, and SOC 3 reports Service Organization Control compliance for service organizations.

-

PCI DSS The Payment Card Industry Data Security Standards, concerning the processing, storing, and transmitting of payment card information.

-

CSA The Cloud Security Alliance, the leading organization dedicated to secure cloud environment compliance. Confluent Cloud has received the CSA Star Level 1 distinction.

-

ISO 27001 The International Organization for Standardization 270001 framework, which includes annual surveillance audits for organizations based outside of the United States.

-

Financial Services Regulation A cross-functional stakeholder compliance initiative to evaluate the Confluent Cloud offering in the context of EMEA financial services regulations, in particular the European Banking Authority’s Guidelines on Outsourcing Arrangements as well as other financial services and insurance regulatory frameworks throughout the world. This includes:

-

Confluent Cloud – European Regulatory Positions Statement (EBA)

-

Confluent Cloud Offering Mapping – EBA Outsourcing Guidelines

-

AWS – EBA Financial Services Addendum – Summary and Customer Requests for Documentation

-

Microsoft Customer Agreement – Confluent ISV Financial Services Amendment (EBA) – Summary and Requests for Documentation

-

Confluent Cloud Services Agreement – Exit Assistance

-

TiSAX For those in the German Association of the Automotive Industry, Confluent has been audited and we can provide our TiSAX report upon request from: confluent.io/trust-and-security

-

GDPR The General Data Protection Regulation

-

CCPA The California Consumer Privacy Act

-

HIPAA The Health Insurance Portability and Accountability Act

Compliance and privacy are constantly evolving, with new regulations and mandates being developed on a regular basis. Take the cloud security course to get the most out of the security features offered by Confluent Cloud.

Take the Course