[Virtual Event] GenAI Streamposium: Learn to Build & Scale Real-Time GenAI Apps | Register Now

Is Event Streaming the New Big Thing for Finance?

While Silicon Valley is always awash with new technology buzzwords, one that has become increasingly dominant in recent years is stream processing: a type of software designed to transport, process and react in real time to the massive streams of event data at the heart of large internet companies. While it isn’t entirely surprising that a company like Netflix—responsible for 37% of U.S. downstream internet traffic—would be interested in technology that processes data as it moves, what is surprising is the uptake in traditional markets like finance, which don’t typically generate the trillions-of-events-per-day workloads seen at giant technology firms. In fact, this uptake demonstrates a subtle and intriguing shift in the way that companies are reshaping their IT functions. In much the same way that the internet transformed how people are connected, today applications are revolutionizing how big companies operate, interact and, most importantly, share data.

The digital customer experience

When a customer interacts with their bank to make a payment, withdraw cash or trade stock, that single action or event triggers a slew of activity: Accounts are updated; postings hit ledgers; fraud checks are run; risk is recalculated and settlement processes are initiated. So the value of these events to the organization is highest in the moment they are created, the moment in which a broad set of operations all need to happen at once. The more widely and immediately available these events are, the easier it is for a company to adapt and innovate.

A number of upstart financial services firms were quick to notice the importance of being event driven. The UK-based upstart bank Monzo—which focuses heavily on a mobile-first, immersive user experience—makes for a good example. When customers buy goods with their Monzo card, their mobile application alerts them of the transaction often before the receipt has finished printing at the checkout, complete with details of how much they spent and where they spent it. The bank performs real-time categorization on purchases so that users know what they are spending their money on, and the associated mobile notification even includes a purchase-related emoji: a coffee cup for Starbucks, a T-shirt for apparel, etc.

A cynic might view this as a gimmick, but Monzo’s thriving digital ecosystem is more likely reflective of a substantial shift in customer needs. Customers no longer drive to a branch and talk to their account manager face to face. Instead, they expect a rich and personal digital experience—one that makes them feel in touch with their finances and partnered with a bank that knows what they, the customer, is doing.

But knowing your customer isn’t simply about collecting their digital footprint—it’s about interacting with it and them in real time. Financial services companies like Funding Circle and Monzo achieve this by repurposing the same streaming technologies used by technology giants like Netflix and LinkedIn. Funding Circle use stream processing tools to, as they put it, “turn the database inside out,” a metaphor that illustrates how the technology lets them manipulate event streams as they move through the organization. In Monzo’s case, as soon as a payment is acquired and the balance updates, the payment event is stored in a streaming platform, pushing those events (or any previous ones) into a wide variety of separate IT services: categorization, fraud detection, notification, spending patterns and, of course, identifying the correct and all-important emoji.

But being able to adapt a stream of business events with a stream processor isn’t just about upstarts leveraging coffee cup GIFs and immersive user experiences. More traditional institutions like ING, Royal Bank of Canada (RBC) and Nordea have made the shift to streaming systems, too. What makes their approach interesting is their interpretation of the event stream, not as some abstract piece of technology infrastructure, but rather as a mechanism for modeling the business itself. All financial institutions have an intrinsic flow that is punctuated with real-world events, be it the processing of a mortgage application, a payment or the settlement of an interest rate swap. By rethinking their business not as a set of discrete applications or silos but rather as an evolving flow of business events, they have created a more streamlined and reactive IT service that better models how the business actually works in a holistic sense.

The introduction of the MiFID II regulation, which requires institutions to report trading activity within a minute of execution, presented a useful litmus test. This was notoriously difficult for many banks to adhere to due to their siloed, fragmented and batch-oriented IT systems. But for the banks that had already installed real-time event streaming and plumbed it across all their product-aligned silos, the task was far simpler.

From data warehousing to event streaming

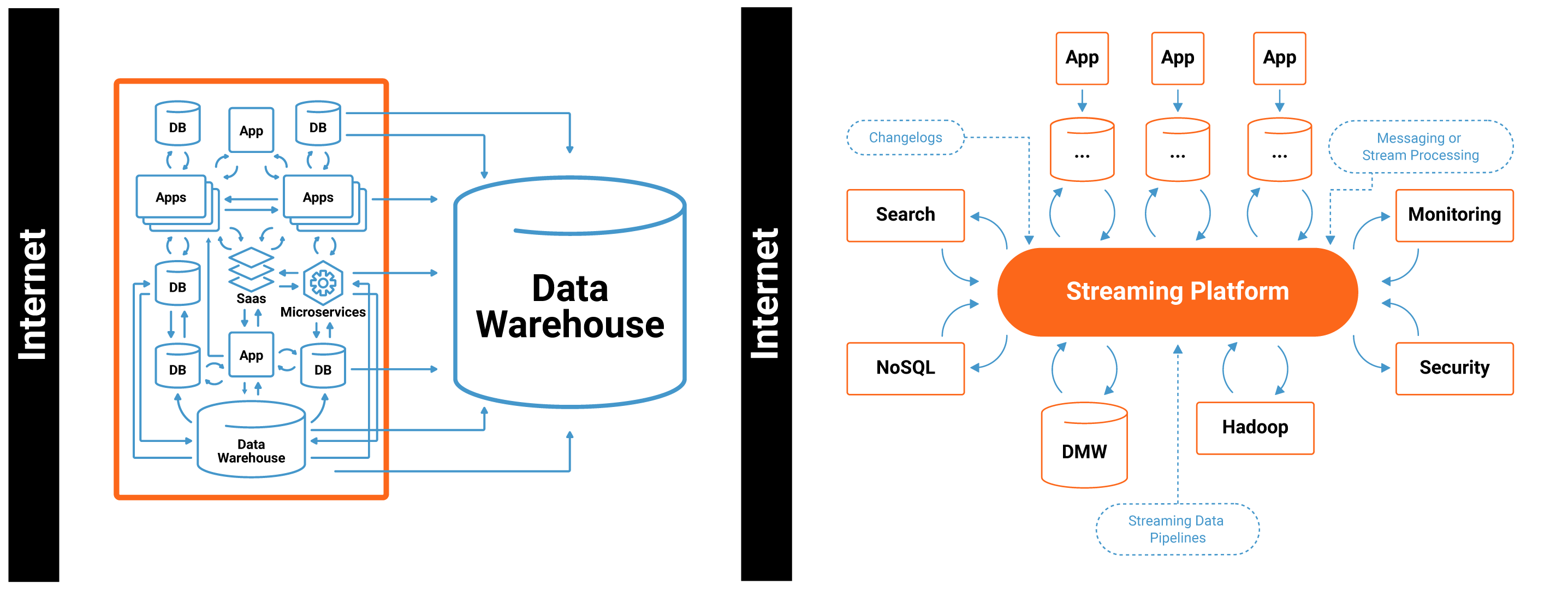

While streaming technologies change the way organizations communicate internally, they also change the way they store and share data. Today’s streaming systems have as much in common with distributed databases as they do with traditional messaging systems—they store data long term, support streaming SQL queries and scale almost indefinitely, leading to an entirely new category of data technology.

This is the most recent of a long line of data technology step-changes that finance firms have adopted, each correcting a problem left by the last. Data warehousing lets companies create a single joined-up, high-quality view from a plethora of databases dotted around the organization. Big data technologies refined this approach, but the implementation patterns remained broadly the same: large semi-static data repositories positioned in the aft of the company and crawled daily or monthly to produce management or analytic insight.

While this is acceptable for periodic reports, the role of data in the modern bank has expanded significantly. Finance firms today use machine learning models to automate decisions off data sourced from various corners of the company and create new applications and “microservices” that provide collaborative capabilities enterprise-wide, cutting across silos and unifying customer experiences. All these initiatives require rich, high-quality, real-time datasets to keep the time to market of these initiatives low.

To provide these efficiency gains, a number of financial institutions both big and small have repositioned their companywide datasets forward in the organization, shifting their focus away from collecting a high-quality dataset in one place, to delivering to many systems company-wide. For the companies that do this well, data becomes a self-service commodity.

In one large bank, starting a new project is as simple as logging into a portal and picking the relevant events: trades, books, cash flows, etc., and pushing them into an application or database located on their private cloud. This works across datacenters, geographies and in the future, cloud providers. So, while streaming systems bring real-time connectivity, they also provide unified data enablement, making data a self-service commodity available on a global scale.

Unlocking agility from the assets within

While streaming lets banks like ING or RBC be more nimble and respond instantaneously to their customers, a potentially greater benefit comes from an increased ability to adapt and evolve the investments they have already made.

For the last two decades, IT culture has shifted towards more agile approaches for building and delivering software. Waterfall processes, where requirements are locked down before software development commences, have been replaced with more iterative approaches where new software is developed in short, often week-long cycles, from where it is visible, testable and available for feedback. These methods are beneficial because they reassign the notion of value away from fixed goals (i.e., software that does ABC delivered on date XYZ) into process goals (i.e., a process that builds software incrementally), letting customers provide fast feedback and allowing the business to redefine and optimize the end product as the world around it changes.

This mindset tends to work well for individual software projects, but for most established enterprises—already backed by many billions of dollars of technology investment, built up over decades of existence—embracing the same kind of agility at an organizational level seems impossible. In fact, the boardrooms of financial services companies are far more likely to be graced with proposals for reinvention through the latest shiny new software platform than the less bonus-optimized steps needed to move an aging technology stack incrementally into the future.

But one of the greatest ills that face the IT industry today is attributing the dysfunction of an IT organization to “dysfunctional software,” when “dysfunctional data” is far more culpable. A company may have best-in-class software engineers, but if the first thing they have to do when they start a new project is identify, collect, import and translate a swathe of flawed and hard-to-access corporate datasets, it is reasonable to expect the same painful release schedules seen in the days of waterfall, not to mention the likelihood that those best-in-class software engineers will migrate elsewhere.

Companies that take the stream processing route observe a somewhat different dynamic, because the event streams breed agility. These platforms come with tools that transform static databases into event streams, unlocking data hidden deep inside legacy systems and connecting it directly to applications company-wide. For companies like RBC, this means pulling data out of their mainframe and making it available to any project that needs it both immediately as well as retrospectively. The insight is simple but powerful: If the data is always available, then the organization is always free to evolve.

The implication for the embattled incumbents of the finance industry is that their best strategy may not be to beat the upstarts at their own game. Instead, they are better off unlocking the hidden potential of the assets that they already own. As Mike Krolnik, RBC’s head of engineering for enterprise cloud, put it: “We needed a way to rescue data off of these accumulated assets, including the mainframe, in a cloud native, microservice-based fashion.” This means rethinking IT architectures, not as a collection of independent islands that feed some far-off data warehouse but as a densely connected organism, more like a central nervous system that collects and connects all company data and moves it in real time. So, while buzzword bingo might point to a future of internet velocity datasets, the more valuable benefits of streaming platforms lie in the subtler, systemic effects of denser data connectivity. This may well pass our data-obsessed technology community by, but the wise money is on the long game, and the long game is won with value.

Interested in more?

- Read the white paper Top 3 Use Cases for Real Time Streams in Financial Services Architectures

- Watch our video on digital replatforming in the financial services industry

- Learn about real-time streams for growth, regulation and risk

- Find out how streaming data empowers RBC to be data driven

- Download Confluent Platform, the leading distribution of Apache Kafka®

- See a version of this post originally featured on Finextra

Avez-vous aimé cet article de blog ? Partagez-le !

Abonnez-vous au blog Confluent

Unlocking Data Insights with Confluent Tableflow: Querying Apache Iceberg™️ Tables with Jupyter Notebooks

This blog explores how to integrate Confluent Tableflow with Trino and use Jupyter Notebooks to query Apache Iceberg tables. Learn how to set up Kafka topics, enable Tableflow, run Trino with Docker, connect via the REST catalog, and visualize data using Pandas. Unlock real-time and historical an...

Shifting Left: How Data Contracts Underpin People, Processes, and Technology

Explore how data contracts enable a shift left in data management making data reliable, real-time, and reusable while reducing inefficiencies, and unlocking AI and ML opportunities. Dive into team dynamics, data products, and how the data streaming platform helps implement this shift.