[Virtual Event] GenAI Streamposium: Learn to Build & Scale Real-Time GenAI Apps | Register Now

Apache Kafka

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

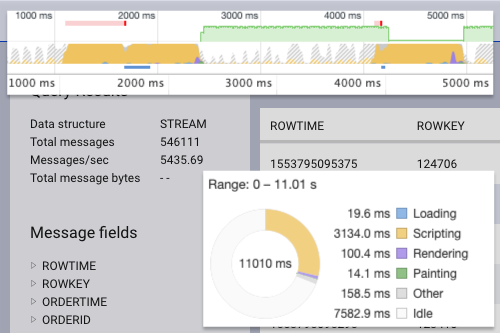

Consuming Messages Out of Apache Kafka in a Browser

Imagine a fire hose that spews out trillions of gallons of water every day, and part of your job is to withstand every drop coming out of it. This is […]

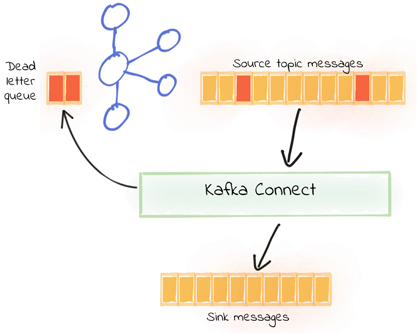

Kafka Connect Deep Dive – Error Handling and Dead Letter Queues

Kafka Connect is part of Apache Kafka® and is a powerful framework for building streaming pipelines between Kafka and other technologies. It can be used for streaming data into Kafka […]

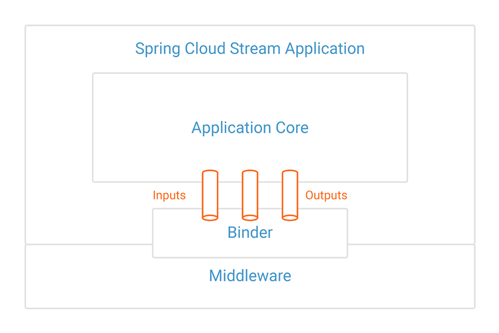

Spring for Apache Kafka Deep Dive – Part 2: Apache Kafka and Spring Cloud Stream

On the heels of part 1 in this blog series, Spring for Apache Kafka – Part 1: Error Handling, Message Conversion and Transaction Support, here in part 2 we’ll focus […]

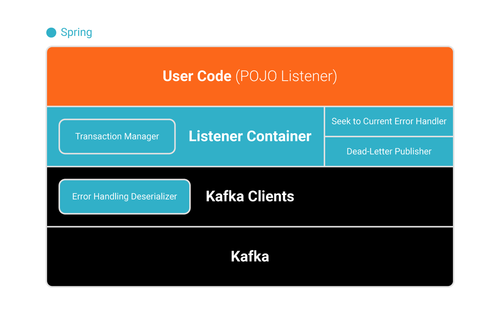

Spring for Apache Kafka Deep Dive – Part 1: Error Handling, Message Conversion and Transaction Support

Following on from How to Work with Apache Kafka in Your Spring Boot Application, which shows how to get started with Spring Boot and Apache Kafka®, here we’ll dig a […]

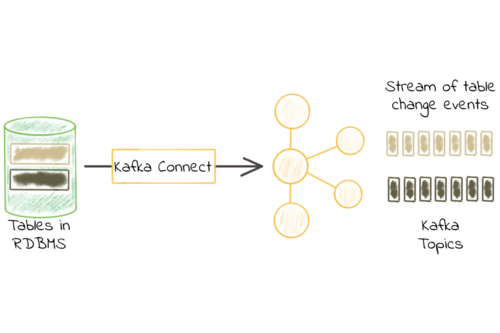

Kafka Connect Deep Dive – JDBC Source Connector

One of the most common integrations that people want to do with Apache Kafka® is getting data in from a database. That is because relational databases are a rich source […]

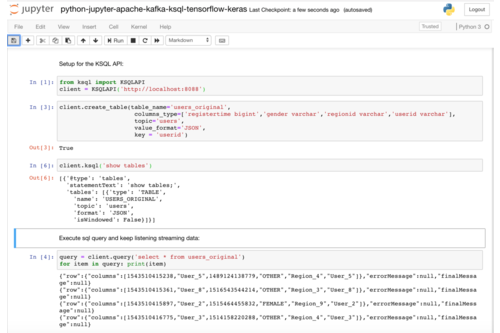

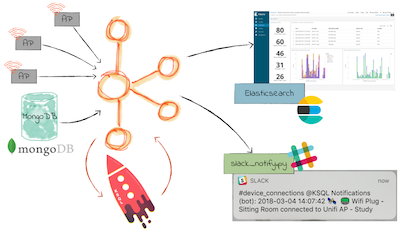

Machine Learning with Python, Jupyter, KSQL and TensorFlow

Building a scalable, reliable and performant machine learning (ML) infrastructure is not easy. It takes much more effort than just building an analytic model with Python and your favorite machine […]

Easy Ways to Generate Test Data in Kafka

If you’ve already started designing your real-time streaming applications, you may be ready to test against a real Apache Kafka® cluster. To make it easy to get started with your […]

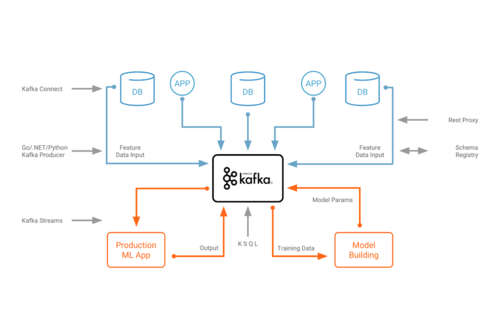

Using Apache Kafka to Drive Cutting-Edge Machine Learning

Machine learning and the Apache Kafka® ecosystem are a great combination for training and deploying analytic models at scale. I had previously discussed potential use cases and architectures for machine […]

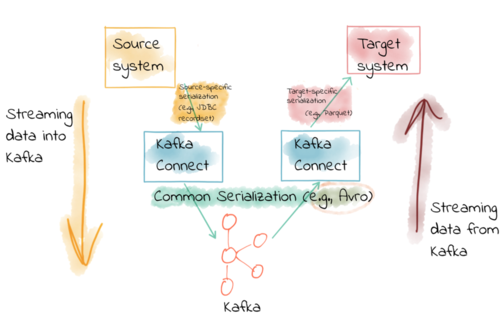

Kafka Connect Deep Dive – Converters and Serialization Explained

Kafka Connect is part of Apache Kafka®, providing streaming integration between data stores and Kafka. For data engineers, it just requires JSON configuration files to use. There are connectors for […]

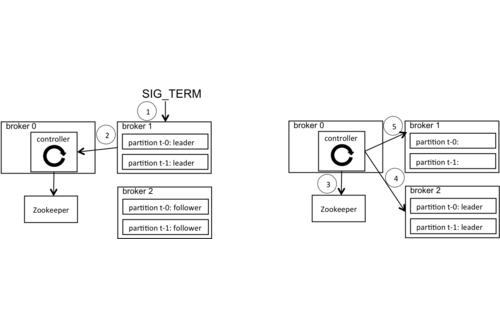

Apache Kafka Supports 200K Partitions Per Cluster

In Kafka, a topic can have multiple partitions to which records are distributed. Partitions are the unit of parallelism. In general, more partitions leads to higher throughput. However, there are […]

How to Work with Apache Kafka in Your Spring Boot Application

Choosing the right messaging system during your architectural planning is always a challenge, yet one of the most important considerations to nail. As a developer, I write applications daily that […]

Apache Kafka vs. Enterprise Service Bus (ESB) – Friends, Enemies or Frenemies?

Typically, an enterprise service bus (ESB) or other integration solutions like extract-transform-load (ETL) tools have been used to try to decouple systems. However, the sheer number of connectors, as well […]

We ❤️ syslogs: Real-time syslog processing with Apache Kafka and KSQL – Part 3: Enriching events with external data

Using KSQL, the SQL streaming engine for Apache Kafka®, it’s straightforward to build streaming data pipelines that filter, aggregate, and enrich inbound data. The data could be from numerous sources, […]

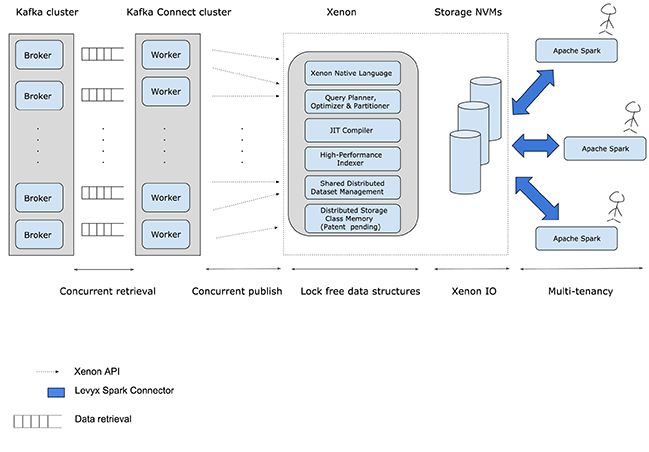

Analytics on Bare Metal: Xenon and Kafka Connect

The following post is a guest blog from Tushar Sudhakar Jee, Software Engineer, Levyx responsible for Kafka infrastructure. You may find this post also on Levyx’s blog. Abstract As part […]