Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

Analyze Factory Floor Telemetry Data for Anomalies in Real Time

Using Confluent Cloud's managed Kafka clusters and Flink service, we can analyze factory floor robot telemetry data to identify performance bottlenecks or potential equipment failures, enabling proactive maintenance and optimized robot performance.

Optimize Factory Floor Robots with Streaming Analytics Using Confluent Cloud’s Apache Kafka and Flink

Modern automated manufacturing factory floors can have hundreds of robots performing various tasks, including picking and placing objects, assembling parts, packaging, welding, painting, and cleaning. By effectively utilizing telemetry data and leveraging the power of real-time streaming analytics platforms like Confluent Kafka clusters and Flink SQL, valuable insights into robot health and performance can be gained.

- Joint Positions: Monitor the joint positions of the robot arm. Deviations from expected positions during movement can indicate binding or resistance.

- Motor Currents: Monitor the current draw of the motors responsible for robot arm movement. Increased current draw compared to baseline values for specific movements could signal higher friction or resistance.

- Cycle Time (seconds): Increased cycle times compared to normal operation could indicate binding or resistance slowing down the movement.

- Fluctuating Power Consumption (kW): Large deviations from the expected power consumption pattern could suggest internal component malfunction, causing increased energy usage.

- Throughput (units/minutes): Number of units processed per unit time. Lower throughput compared to normal operation can result in decreased performance.

- Identify and address potential issues before they escalate into major malfunctions.

- Optimize robot performance and efficiency.

- Schedule preventive maintenance to avoid costly downtime.

- Improve overall robot reliability and safety.

Build with Confluent

This use case leverages the following building blocks in Confluent Cloud:

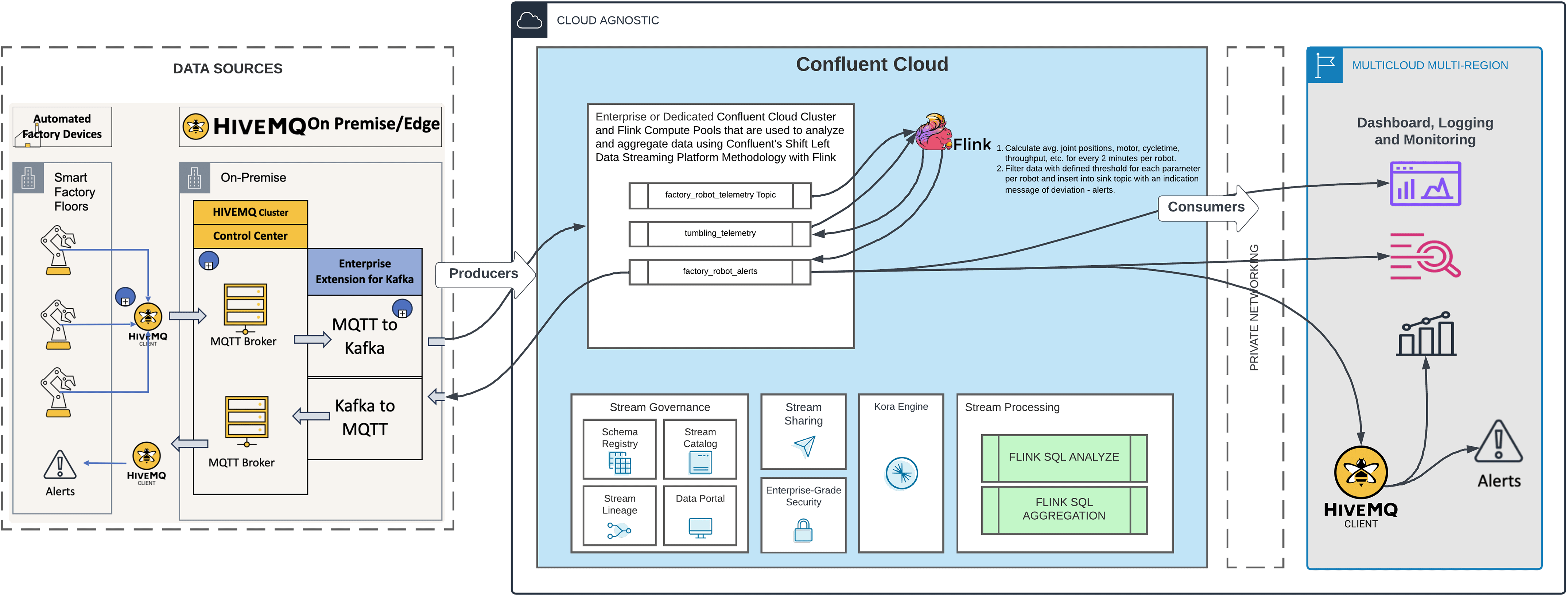

Reference Architecture

Here's a look at the reference architecture for optimizing robot performance on the factory floor for streaming analytics in real time with Confluent Cloud and Flink:

High-Level Architecture

1. HiveMQ, MQTT Broker: A central hub for robot communication. Resource-constrained robots can efficiently publish telemetry data (kinematics, motor and performance reading) and receive commands (e.g., anomaly or maintenance alerts) using the lightweight MQTT protocol. HiveMQ's scalability and security features ensure reliable data exchange for a large fleet of robots, keeping manufacturing operations running smoothly.

2. HiveMQ Clients: HiveMQ client libraries provide functionality to publish messages (simulating robot telemetry data) and subscribe to messages on a topic.

3. Enterprise Extension for Kafka: An extension for the HiveMQ MQTT broker bridges the gap between MQTT and Apache Kafka. This Enterprise Extension for Kafka enables bidirectional communication between devices and applications using different messaging protocols:

— MQTT to Kafka: Ingests robot telemetry data streams into Kafka.

— Kafka to MQTT: Subscribes to relevant Kafka topics to receive alerts.

4. Confluent Cloud: Confluent Cloud provides a hosted platform for the Kafka cluster and Flink compute pools that will be used to analyze and aggregate the data.

High-Level Process

For stream processing various functions of Flink SQL used as avg, windowing, group by, filtering, case-when, etc.

Part 1 - Setting Up Data Flow

- Configure HiveMQ-Kafka Extension: Configure the HiveMQ-Kafka Extension to ingest data from MQTT topics to Kafka topics (e.g., "factory_robot_telemetry") and vice versa.

- Start HiveMQ Broker and Data Simulator: Start the HiveMQ broker and the data simulator to produce robot telemetry data that will be published to MQTT topics.

Part 2 - Real-Time Anomaly Detection

- Process Telemetry Data: Use Flink SQL to process the incoming Kafka stream (factory_robot_telemetry) for real-time analytics.

- Anomaly Detection with Thresholds: Calculate average joint positions, motor values, and other performance parameters for every 2 minutes per robot. Define threshold values for each telemetry data attribute and use Flink SQL queries to identify deviations that exceed these thresholds.

- Alert Topic Creation: Create a new topic (factory_robot_alerts) to publish anomaly alerts for robots.

Part 3 - Alerting via MQTT

- Subscribe to Alert Topic: Start an MQTT subscriber to receive messages from the Kafka topic (factory_robot_alerts) containing robot anomaly alerts.

Resources

Contact Us

Contact Ness to learn more about this use case and get started.