Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

Agencies Win With Data Streaming: Evolving Data Integration to Enable AI

With data streaming, public sector organizations can better leverage real-time data and modernize applications. Ultimately, that means improving the reliability of services that agencies and citizens depend on, enhancing operational efficiency (therefore cutting costs), and delivering critical insights the moment they’re needed.

But what you might not yet realize is that data streaming is helping organizations, across the public sector and beyond, unlock new opportunities with artificial intelligence (AI). Data streaming—combined with timely stream processing and data governance—allows you to shift data transformation left in your data pipelines, to unlock its value in your data warehouse or data lake.

The concept of “shifting left” was popularized in relation to cybersecurity strategies. This approach involved moving processes like testing, security checks, and quality assurance earlier in the development timeline—which is represented visually as shifting these activities to the left side of a traditional left-to-right project timeline.

Now, we’re seeing shift-left integration being used to power other innovative solutions, including generative AI (GenAI) applications. And public sector leaders are asking how they can do the same.

At the 2025 Confluent Public Sector Summit many speakers talked about the need to shift data processing and governance left, closer to the data source. This shift allows organizations to globally address common data quality issues, reduce data duplication, and cut processing costs. These benefits are critical for the public sector, given the demand to build safe, secure, and trustworthy AI applications. Bad data will result in bad AI. To be AI-ready, data must be accessible, high quality, continuously governed, and fresh.

Missed the 2025 Public Sector Summit? Join the Data Streaming World Tour in a city near you.

Why Agencies Should Prep Data Before It Hits the Data Lake

At the Public Sector Summit, Confluent Field CTO Will LaForest opened the event with an overview of how data streaming enables government agencies to overcome their biggest data challenges.

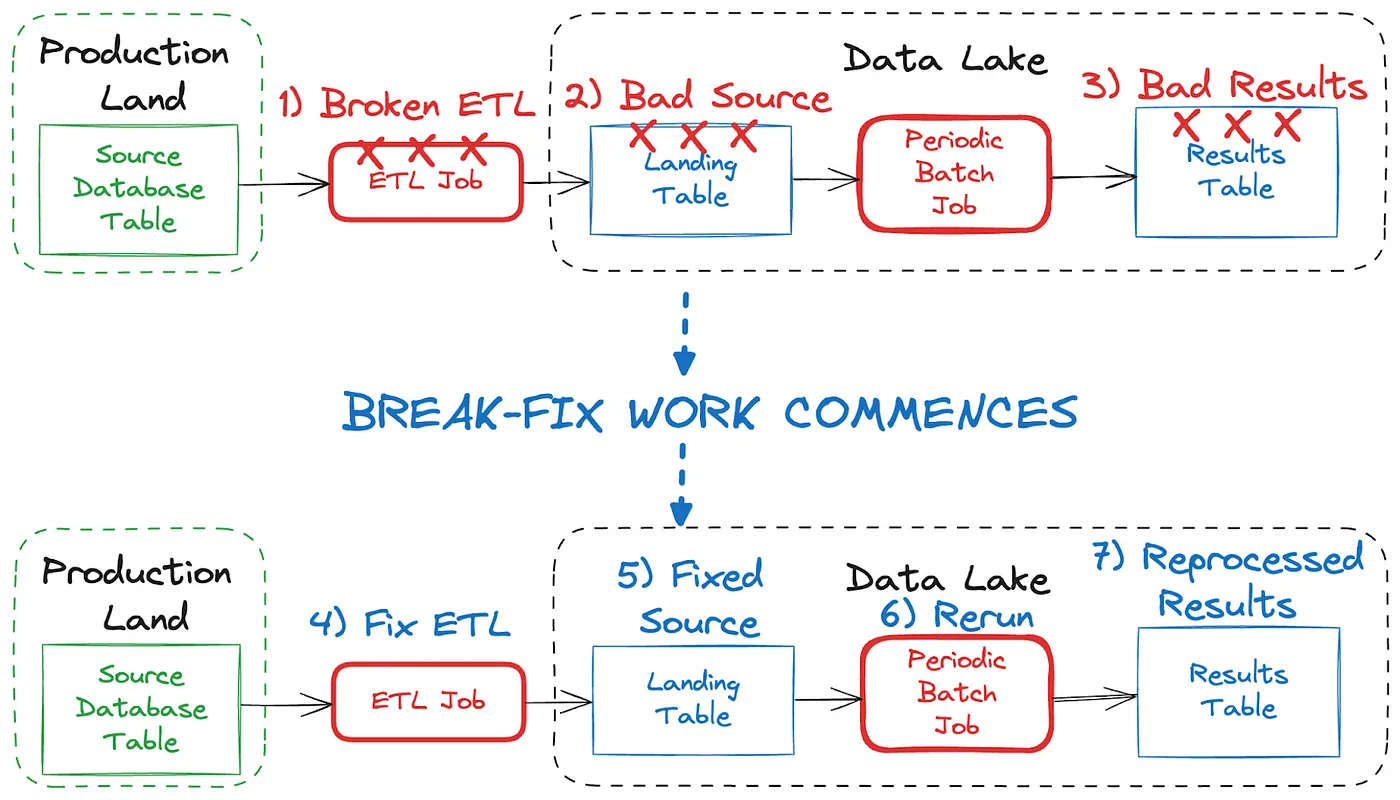

He introduced the concept of shifting left, showing how building data products in a data lakehouse is not the most effective approach, due to batch processing, the unreliability of an interdependent architecture, the low quality of data sets, and the potential for even more data silos. Instead, moving data quality efforts closer to the source addresses issues, and ensures that the data in your data lake is as fresh and usable as possible for downstream consumers.

Will highlighted the recent partnership with Databricks, which helps data move seamlessly between operational and analytical systems, while preserving its trust, context, and usability for AI.

Fostering Agency Agility With a “What If” Mindset

Dr. Donald High shared his perspective on the shifting left of data from his long and storied career working with data-dependent organizations, including Walmart and the IRS. To respond to today’s “instant society,” he detailed how organizations have to move to an event-driven architecture to meet customer expectations. This focus on real-time allows for a shift from being responsive to proactive. For example, Walmart was able to begin detecting fraudulent barcodes at checkout, allowing associates to step in and let the customer know that there is an issue with their product, and that they will resolve it. This eliminated a TV being sold for the price of a candle, and did so with minimal confrontation and conflict.

Getting to this real-time visibility can be difficult, especially when dealing with legacy systems that hold needed data. Dr. High encouraged everyone to adopt a “what if” mindset in order to set the end goal for data use, and work backwards to get there.

A CDO’s Perspective: Shifting Government Agencies From IT-Centric to Data-Centric

Adita Karkera, CDO at Deloitte, joined Will to discuss the evolution of data governance. She detailed the shift from a focus on the IT processes needed to protect and preserve data to a data-first approach that meets the various needs across an enterprise.

Adita invoked the Spiderman quote, “With great power comes great responsibility.” Government leaders see the potential in AI and feel the pressure to get it implemented, but not at the expense of data governance. Only about one third of AI projects move from pilot to production.

Compliance is what is holding back many projects, and hesitations around data not being right, or models needing more work are valid concerns. She suggests a shift left approach for developing risk frameworks alongside the development of AI. Additionally, she stressed the importance of improving data literacy across government organizations to better support AI deployments.

Where GenAI Fits in the Evolution of AI

Sean Falconer, Confluent’s AI Entrepreneur in Residence, spoke to how and why GenAI has been a key step in unlocking the future of AI. He walked the audience through the stages of AI’s evolution:

Purpose-built models: Traditional machine learning took domain and application-specific data, and aggregated it to lakehouses, where models were built. These models are hard to use for multiple purposes. They act like a reflex, learning once and staying hardwired.

Generative AI: GenAI changed the approach with a model that can be used for many things. The output is content with real-time contextualization. GenAI is more like the brain— reasoning and responding in real time.

Agentic AI: The next evolution will be AI-driven agents that can reason and act—and organizations are already making significant headway on these. With agentic AI, the system can determine if a person should get a refund, and then kick off a workflow to make that happen.

Sean went on to explain why he believes that AI is not replacing people but rather empowering and augmenting knowledge workers. It will be critical for democratizing and accelerating access to important government services.

The Role of Data Modernization in Realizing CJADC2

Rear Admiral Bill Chase (Retired) and Rear Admiral Vincent Tionquiao, Deputy Director, C4/Cyber (J6), Joint Staff, discussed the Department of Defense’s (DoD) CJADC2, the DoD concept that will connect data-centric information from all branches of service, partners, and allies, into an internet of military things, making information accessible anywhere and anytime—for quick decisions on the battlefield.

The goal of CJADC2 is to get capabilities into the hands of warfighters as quickly as possible. This is being achieved through a wide variety of projects across the forces including:

Project Overmatch: The Navy’s initiative aimed at improving the ability to conduct distributed maritime operations by developing a secure, resilient, and highly connected battle network among U.S. forces, partners, and allies

Global Information Dominance Experiments (GIDE): Led by the DoD Chief Digital and Artificial Intelligence Office (CDAO), GIDE is a series of experiments designed to create a mission partner environment that can integrate and leverage data across all domains—land, sea, air, space, and cyberspace

They addressed the need for both a culture shift and an architectural shift, from being network-centric to data-centric, as key to achieving the goals of CJADC2. Doing so means overcoming existing technical debt and assuming more risk than traditionally would be permissible, in order to get data where it needs to be.

Data Modernization for the Government–The Shift Left Impact

Confluent partners AWS, Swish Data, Databricks, and Data Surge closed the event with details regarding how their utilization of data streaming has helped fuel data-centric approaches to operational challenges, from enabling decision superiority in DoD, to providing a “clean room” for cross agency collaboration, to predictive maintenance, to entity resolution.

For more on how Confluent is powering the shift left of data, check out our latest resources for the public sector.

Thank you to sponsors Carahsoft, Swish Data, AWS, WWT and MongoDB.

Apache®, Apache Iceberg™️, and the Iceberg logo are trademarks of the Apache Software Foundation in the United States and/or other countries. No endorsement by the Apache Software Foundation is implied by the use of these marks. All other trademarks are the property of their respective owners.

¿Te ha gustado esta publicación? Compártela ahora

Suscríbete al blog de Confluent

Shifting Left: How Data Contracts Underpin People, Processes, and Technology

Explore how data contracts enable a shift left in data management making data reliable, real-time, and reusable while reducing inefficiencies, and unlocking AI and ML opportunities. Dive into team dynamics, data products, and how the data streaming platform helps implement this shift.

Shift Left: Bad Data in Event Streams, Part 1

At a high level, bad data is data that doesn’t conform to what is expected, and it can cause serious issues and outages for all downstream data users. This blog looks at how bad data may come to be, and how we can deal with it when it comes to event streams.