Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

Technology

Data Products, Data Contracts, and Change Data Capture

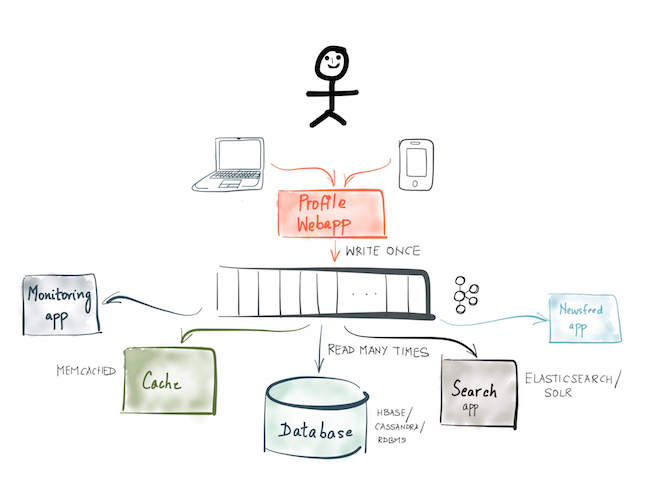

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

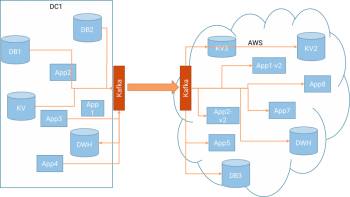

Want to migrate to AWS Cloud? Use Apache Kafka.

Amazon’s AWS cloud is doing really well. Doing well to the tune of making $2.57 Billion in Q1 2016. That’s 64% up from Q1 last year. Clearly a lot of […]

Log Compaction | Highlights in the Apache Kafka and Stream Processing Community | September 2016

It is September and it’s evident that everyone is back from their summer vacation! We released Apache Kafka 0.10.0.1 which includes fixes of the bugs in the 0.10.0 release. In […]

The Connect API in Kafka Cassandra Sink: The Perfect Match

The Connect API in Kafka is a scalable and robust framework for streaming data into and out of Apache Kafka, the engine powering modern streaming platforms. At DataMountaineer, we have worked on many big data and fast data projects across many industries from financial...

Event sourcing, CQRS, stream processing and Apache Kafka: What’s the connection?

Event sourcing as an application architecture pattern is rising in popularity. Event sourcing involves modeling the state changes made by applications as an immutable sequence or “log” of events. Instead […]

Announcing Confluent Platform 3.0.1 & Apache Kafka 0.10.0.1

A few months ago, we announced the release of open-source Confluent Platform 3.0 and Apache Kafka 0.10, marking the availability of Kafka Streams — the new stream processing engine of […]

Flink and Kafka Streams: a Comparison and Guideline for Users

This blog post is written jointly by Stephan Ewen, CTO of data Artisans, and Neha Narkhede, CTO of Confluent. Stephan Ewen is PMC member of Apache Flink and co-founder and CTO […]

Sharing is Caring: Multi-tenancy in Distributed Data Systems

Most people think that what’s exciting about distributed systems is the ability to support really big applications. When you read a blog about a new distributed database, it usually talks […]

Data Reprocessing with the Streams API in Kafka: Resetting a Streams Application

This blog post is the third in a series about the Streams API of Apache Kafka, the new stream processing library of the Apache Kafka project, which was introduced in Kafka v0.10.

Log Compaction | Highlights in the Apache Kafka and Stream Processing Community | August 2016

It is August already, and this marks exactly one year of monthly “Log Compaction” blog posts – summarizing news from the very active Apache Kafka and stream processing community. Hope […]

Secure Stream Processing with the Streams API in Kafka

This blog post is the second in a series about the Streams API of Apache Kafka, the new stream processing library of the Apache Kafka project, which was introduced in Kafka v0.10. Current […]

Elastic Scaling in the Streams API in Kafka

This blog post is the first in a series about the Streams API of Apache Kafka, the new stream processing library of the Apache Kafka project, which was introduced in Kafka v0.10. Current […]

Log Compaction | Highlights in the Apache Kafka and Stream Processing Community | July 2016

Here comes the July 2016 edition of Log Compaction, a monthly digest of highlights in the Apache Kafka and stream processing community. Want to share some exciting news on this […]

Build and monitor Kafka pipelines with Confluent Control Center

On May 24, we announced Confluent Control Center, an application for managing and monitoring a Kafka-based streaming platform. Control Center has a beautiful user interface, and under the surface we […]

Distributed, Real-time Joins and Aggregations on User Activity Events using Kafka Streams

In previous blog posts we introduced Kafka Streams and demonstrated an end-to-end Hello World streaming application that analyzes Wikipedia real-time updates through a combination of Kafka Streams and Kafka Connect. […]