Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

Technology

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

Introducing Self-Service Apache Kafka for Developers

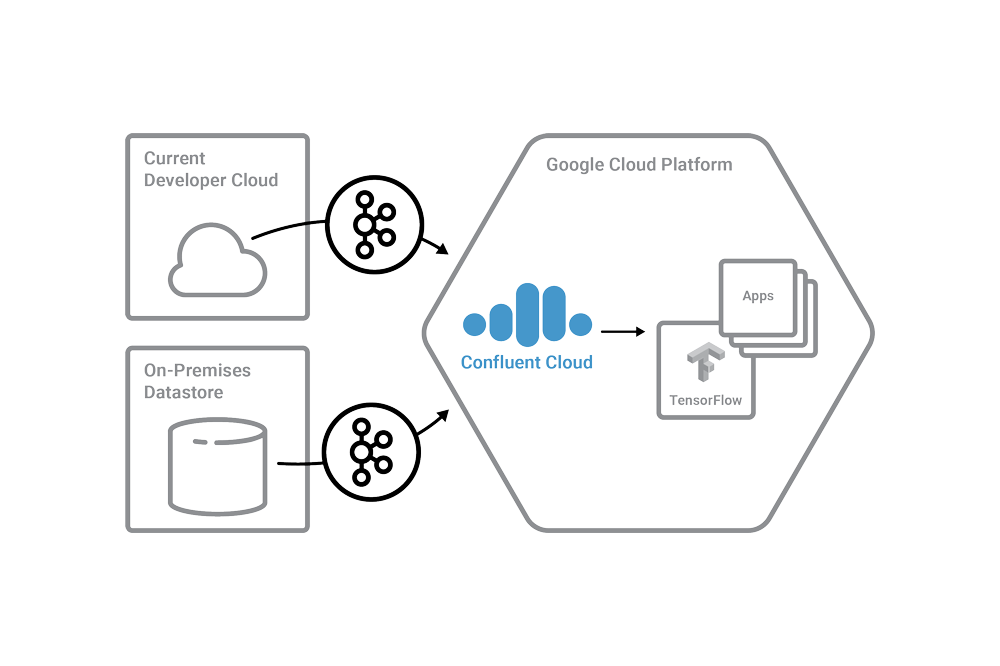

New Confluent Cloud and Ecosystem Expansion to Google Cloud Platform The vision for Confluent Cloud is simple. We want to provide an event streaming service to support all customers on […]

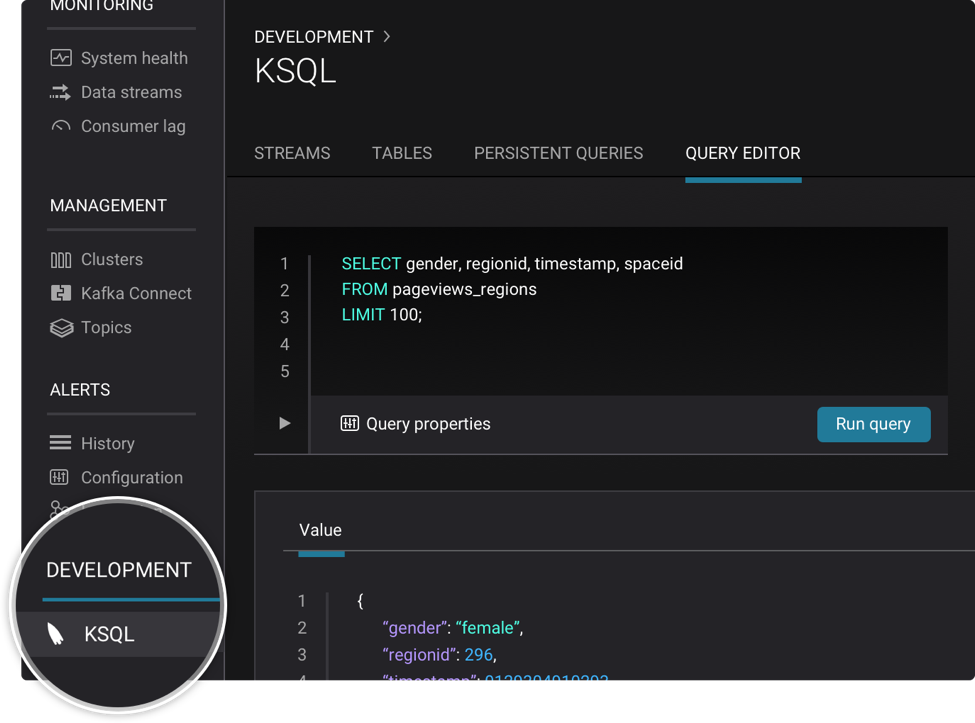

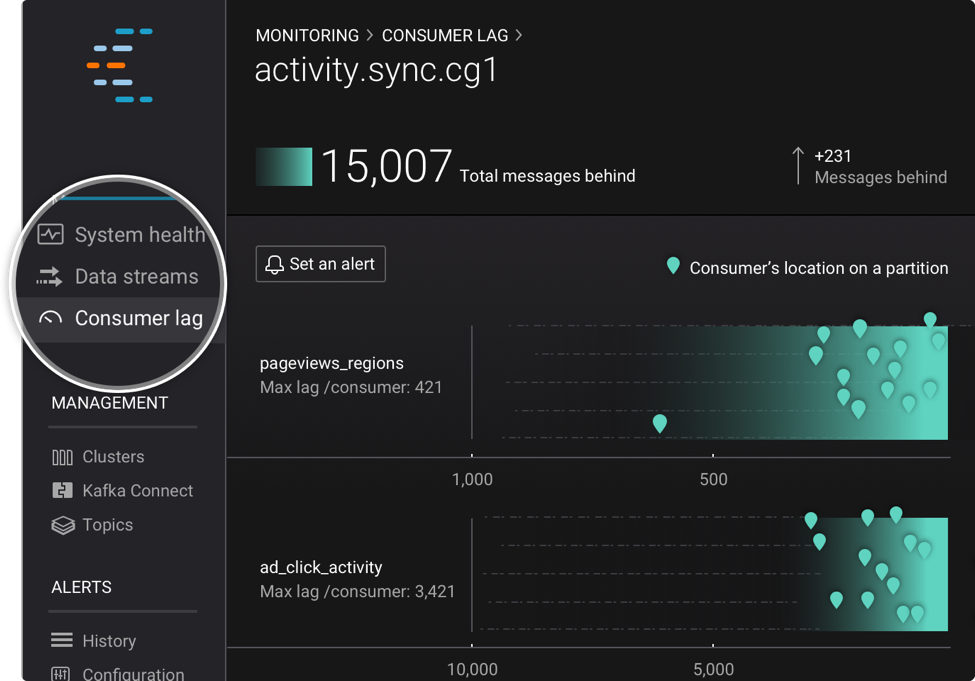

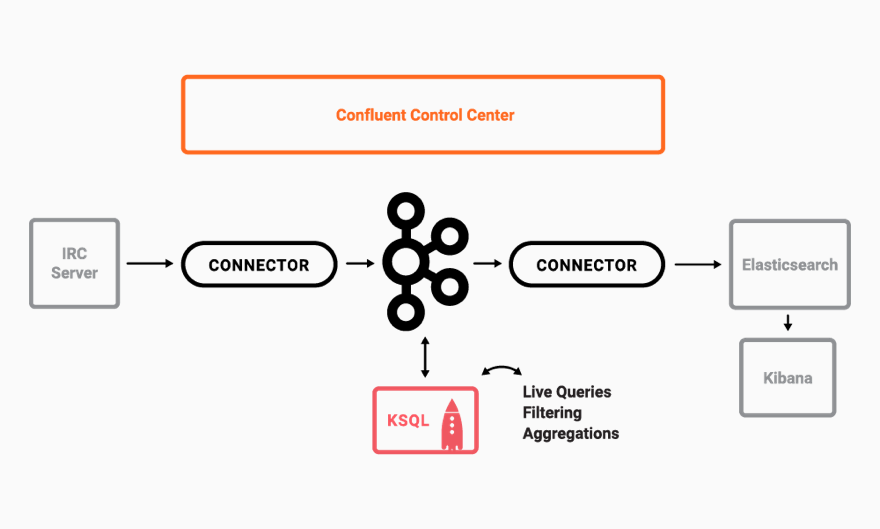

Visualizations on Apache Kafka Made Easy with KSQL

We’re pleased to welcome our partner Arcadia Data to the Confluent blog today. Shant Hovsepian is the CTO and co-founder of Arcadia Data, and is going to tell us about […]

Level Up Your KSQL

Now that KSQL is available for production use as a part of the Confluent Platform, it has never been easier to run the open-source streaming SQL engine for Apache Kafka®. […]

Introducing the Confluent Operator: Apache Kafka on Kubernetes Made Simple

At Confluent, our mission is to put a Streaming Platform at the heart of every digital company in the world. This means, making it easy to deploy and use Apache […]

Introducing Confluent Platform Preview Releases

Download Confluent Platform preview release Historically, Confluent delivers a new release of Confluent Platform three times per year. While this cadence meets the needs of a meaningful portion of our […]

Confluent Platform 4.1 with Production-Ready KSQL Now Available

We are pleased to announce that Confluent Platform 4.1, our enterprise streaming platform built on Apache Kafka®, is available for download today. With Confluent Platform, you get the latest in […]

We ❤ syslogs: Real-time syslog Processing with Apache Kafka and KSQL – Part 2: Event-Driven Alerting with Slack

In the previous article, we saw how syslog data can be easily streamed into Apache Kafka® and filtered in real time with KSQL. In this article, we’re going to see how […]

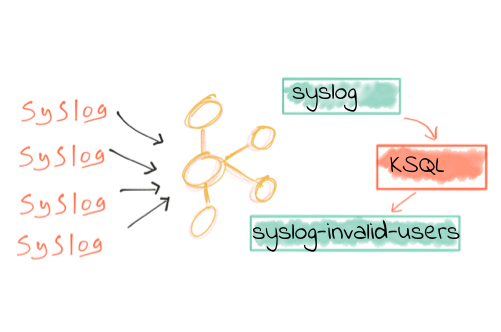

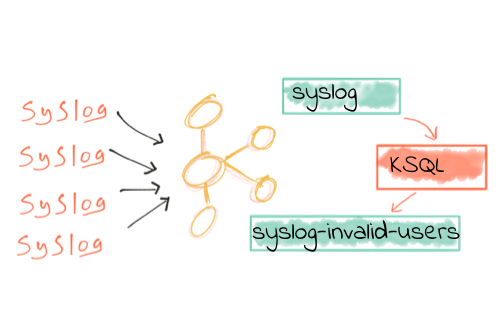

We ❤️ syslogs: Real-time syslog Processing with Apache Kafka and KSQL – Part 1: Filtering

Syslog is one of those ubiquitous standards on which much of modern computing runs. Built into operating systems such as Linux, it’s also commonplace in networking and IoT devices like […]

KSQL in Action: Enriching CSV Events with Data from RDBMS into AWS

Life would be simple if data lived in one place: one single solitary database to rule them all. Anything that needed to be joined to anything could be with a […]

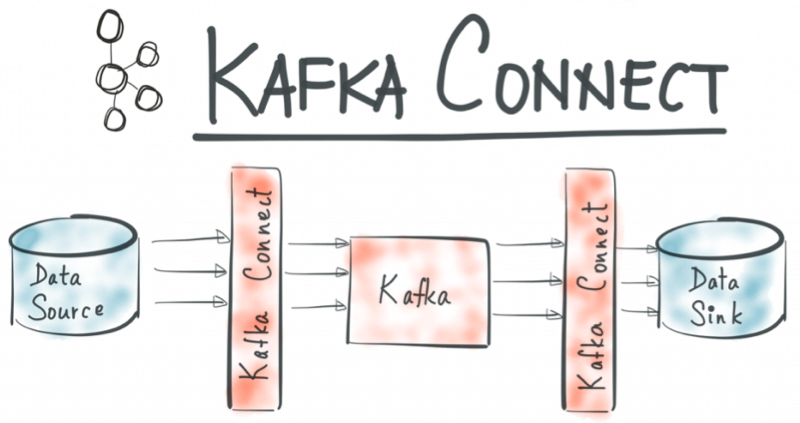

No More Silos: How to Integrate Your Databases with Apache Kafka and CDC

One of the most frequent questions and topics that I see come up on community resources such as StackOverflow, the Confluent Platform mailing list, and the Confluent Community Slack group, […]

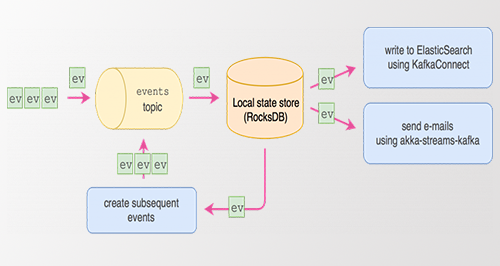

Event Sourcing Using Apache Kafka

Adam Warski is one of the co-founders of SoftwareMill, where he codes mainly using Scala and other interesting technologies. He is involved in open-source projects, such as sttp, MacWire, Quicklens, […]

KSQL February Release: Streaming SQL for Apache Kafka

We are pleased to announce the release of KSQL v0.5, aka the February 2018 release of KSQL. This release is focused on bug fixes and as well as performance and stability […]

Secure Stream Processing with Apache Kafka, Confluent Platform and KSQL

In this blog post, we first look at stream processing examples using KSQL that show how companies are using Apache Kafka® to grow their business and to analyze data in […]

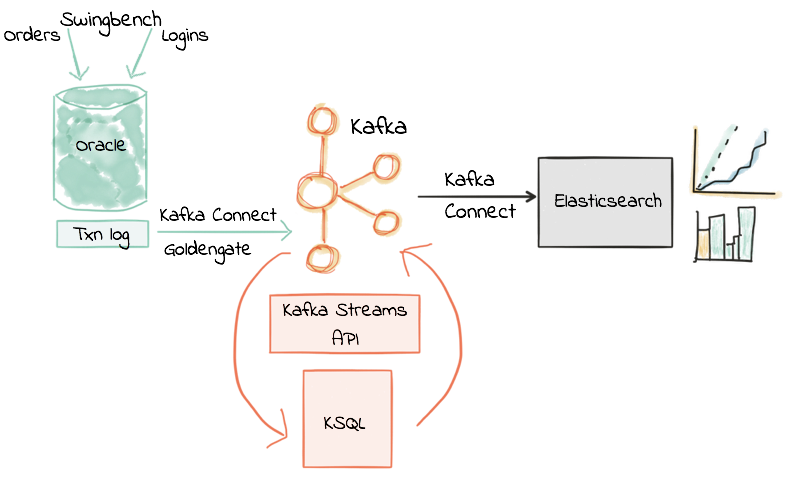

KSQL in Action: Real-Time Streaming ETL from Oracle Transactional Data

In this post I’m going to show what streaming ETL looks like in practice. We’re replacing batch extracts with event streams, and batch transformation with in-flight transformation. But first, a […]