Ahorra un 25 % (o incluso más) en tus costes de Kafka | Acepta el reto del ahorro con Kafka de Confluent

Confluent Blog

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

Reflections on Event Streaming as Confluent Turns Five – Part 2

When people ask me the very top-level question “why do people use Kafka,” I usually lead with the story in my last post, where I talked about how Apache Kafka® […]

Multi-Region Clusters with Confluent Platform 5.4

Running a single Apache Kafka® cluster across multiple datacenters (DCs) is a common, yet somewhat taboo architecture. This architecture, referred to as a stretch cluster, provides several operational benefits and […]

Reflections on Event Streaming as Confluent Turns Five – Part 1

For me, and I think for you, technology is cool by itself. When you first learn how consistent hashing works, it’s fun. When you finally understand log-structured merge trees, it’s […]

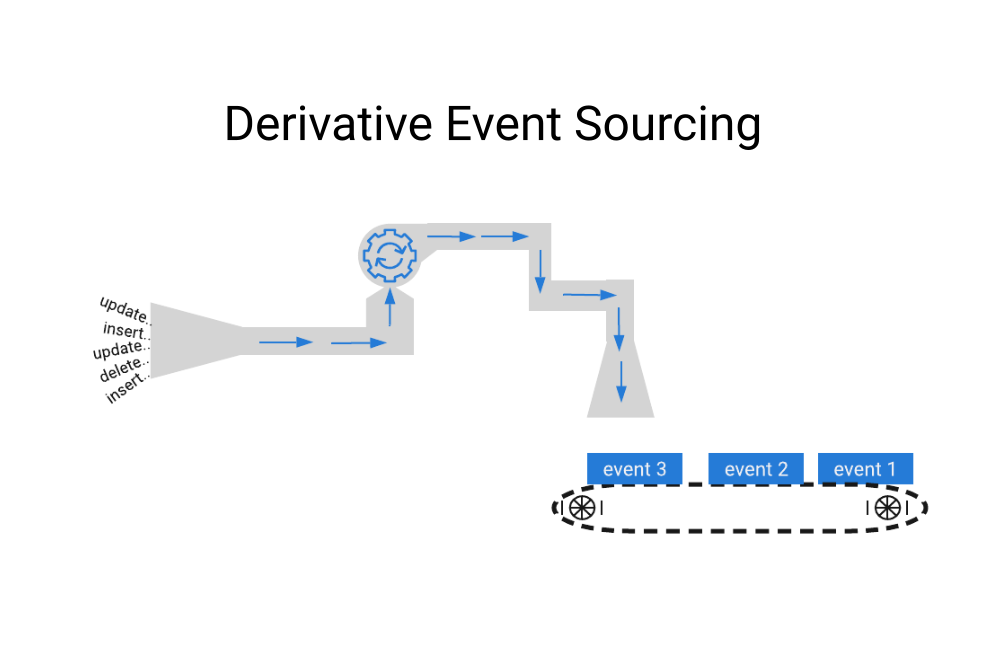

Introducing Derivative Event Sourcing

First, what is event sourcing? Here’s an example. Consider your bank account: viewing it online, the first thing you notice is often the current balance. How many of us drill […]

How to Use Schema Registry and Avro in Spring Boot Applications

TL;DR Following on from How to Work with Apache Kafka in Your Spring Boot Application, which shows how to get started with Spring Boot and Apache Kafka®, here I will […]

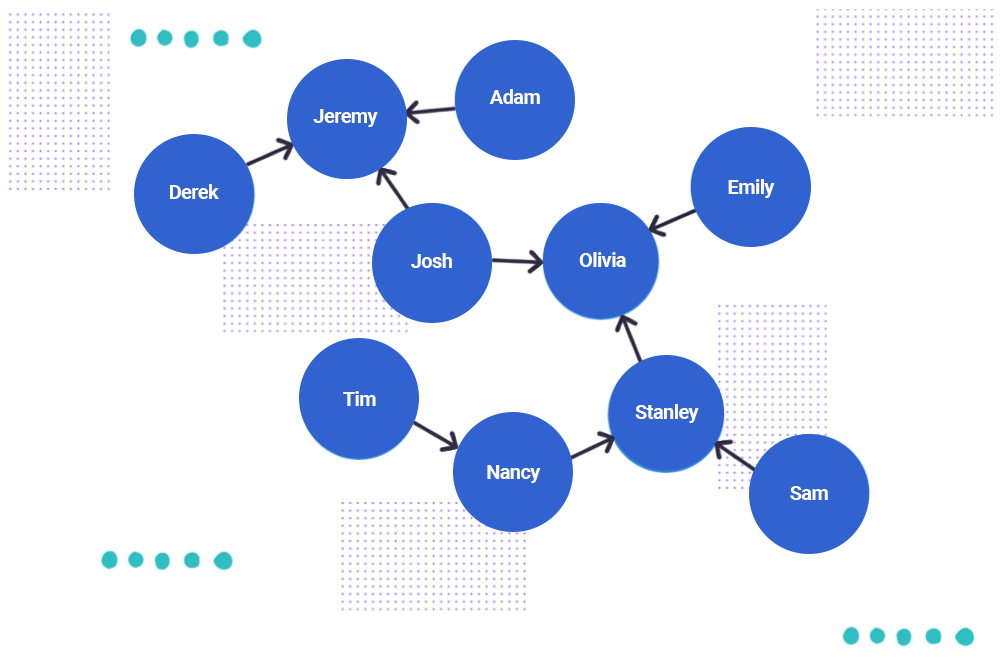

Using Graph Processing for Kafka Streams Visualizations

We know that Apache Kafka® is great when you’re dealing with streams, allowing you to conveniently look at streams as tables. Stream processing engines like ksqlDB furthermore give you the […]

Confluent Cloud Schema Registry is Now Generally Available

We are excited to announce the release of Confluent Cloud Schema Registry in general availability (GA), available in Confluent Cloud, our fully managed event streaming service based on Apache Kafka®. […]

Building Transactional Systems Using Apache Kafka

Traditional relational database systems are ubiquitous in software systems. They are surrounded by a strong ecosystem of tools, such as object-relational mappers and schema migration helpers. Relational databases also provide […]

A Guide to the Confluent Verified Integrations Program

When it comes to writing a connector, there are two things you need to know how to do: how to write the code itself, and helping the world know about […]

Kafka Connect Improvements in Apache Kafka 2.3

With the release of Apache Kafka® 2.3 and Confluent Platform 5.3 came several substantial improvements to the already awesome Kafka Connect. Not sure what Kafka Connect is or need convincing […]

Shoulder Surfers Beware: Confluent Now Provides Cross-Platform Secret Protection

Compliance requirements often dictate that services should not store secrets as cleartext in files. These secrets may include passwords, such as the values for ssl.key.password, ssl.keystore.password, and ssl.truststore.password configuration parameters […]

Top 10 Reasons to Attend Kafka Summit

Yes, the other definition of event sourcing. 1. Keynotes from leading technologists At Kafka Summit SF, you’ll get to hear incredible keynotes from leading technologists, including Jay Kreps and Neha […]

Announcing Tutorials for Apache Kafka

We’re excited to announce Tutorials for Apache Kafka®, a new area of our website for learning event streaming. Kafka Tutorials is a collection of common event streaming use cases, with […]

ksqlDB UDFs and UDAFs Made Easy

One of ksqlDB’s most powerful features is allowing users to build their own ksqlDB functions for processing real-time streams of data. These functions can be invoked on individual messages (user-defined […]