Kafka in the Cloud: Why it’s 10x better with Confluent | Find out more

Reduce Fraud Losses with Real-Time Data Streaming

Outdated fraud detection systems result in overlooked fraudulent activities, affecting revenues and eroding customer trust. Additionally, these systems often fail to comply with stringent regulatory standards for fraud prevention and data management, leading to high-profile fraud incidents that attract negative attention and scrutiny over perceived security weaknesses. However, by leveraging GFT's expertise in fraud modeling, Confluent's capabilities in real-time data streaming, and AWS's scalable machine learning technologies, a financial institution can significantly reduce fraud losses and enhance its security posture.

Delivering a Robust Resolution to Fraud — The “Power of 3”

According to the Financial Conduct Authority, banks must refund their depositors for unauthorized transactions. The fraud landscape is constantly shifting as bad actors look for new ways to slip through security loopholes in financial products and services and target consumers with scams. Although achieving zero fraud is an unrealistic goal, banks are still required to show that they are reducing fraud to the lowest feasible level by implementing robust controls.

Benefits of GFT’s Fraud Data Engine Solution:

By utilizing GFT’s fraud modeling proficiency, Confluent’s real-time data streaming and AWS’s scalable machine learning capabilities, a financial institution can realize reduced fraud losses.

With automation, you can reduce costs through the minimization of false positives while also improving security and resilience.

Keep pace with business growth whilst proactively addressing new fraud tactics. Higher customer satisfaction is achieved through smoother experiences with fewer mistaken fraud flags.

Build with Confluent

This use case leverages the following building blocks in Confluent Cloud:

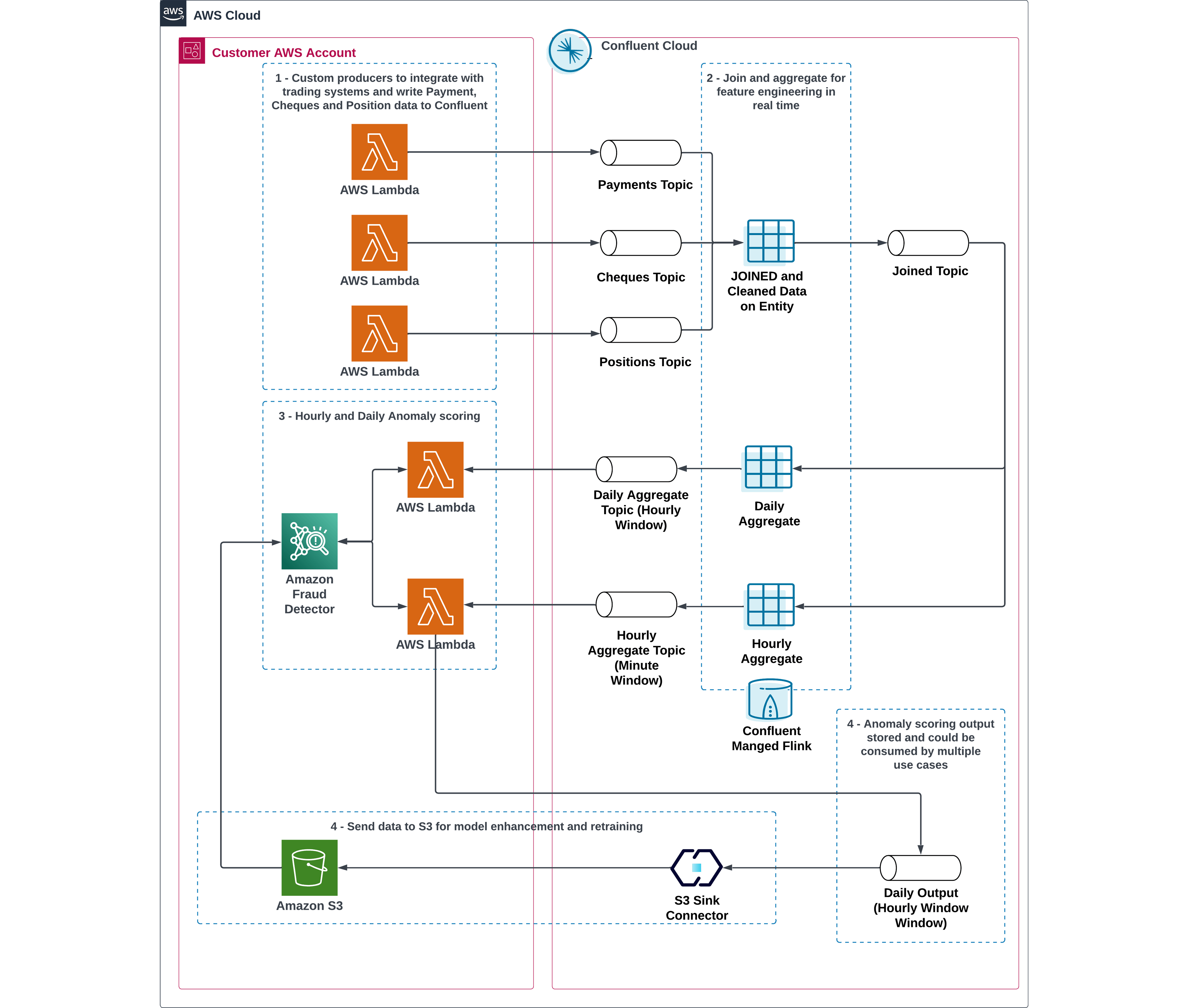

Reference Architecture

Integrate custom producers with trading systems and write Payment, Cheques and Position data to Confluent.

Join and aggregate data for feature engineering in real time.

Enable hourly and daily anomaly scoring, whose outputs are stored and can be consumed by multiple use cases.

Send data to S3 for model enhancement and retraining.

Resources

Contact Us

Contact GFT to learn more about this use case and get started.