Kafka in the Cloud: Why it’s 10x better with Confluent | Find out more

Stream Processing

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

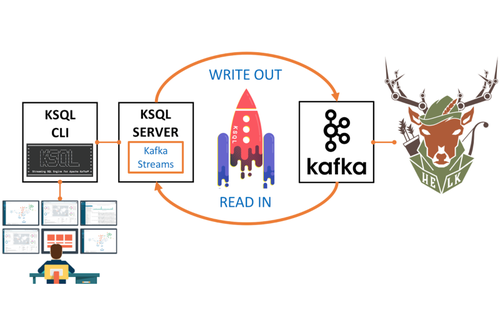

KSQL Training for Hands-On Learning

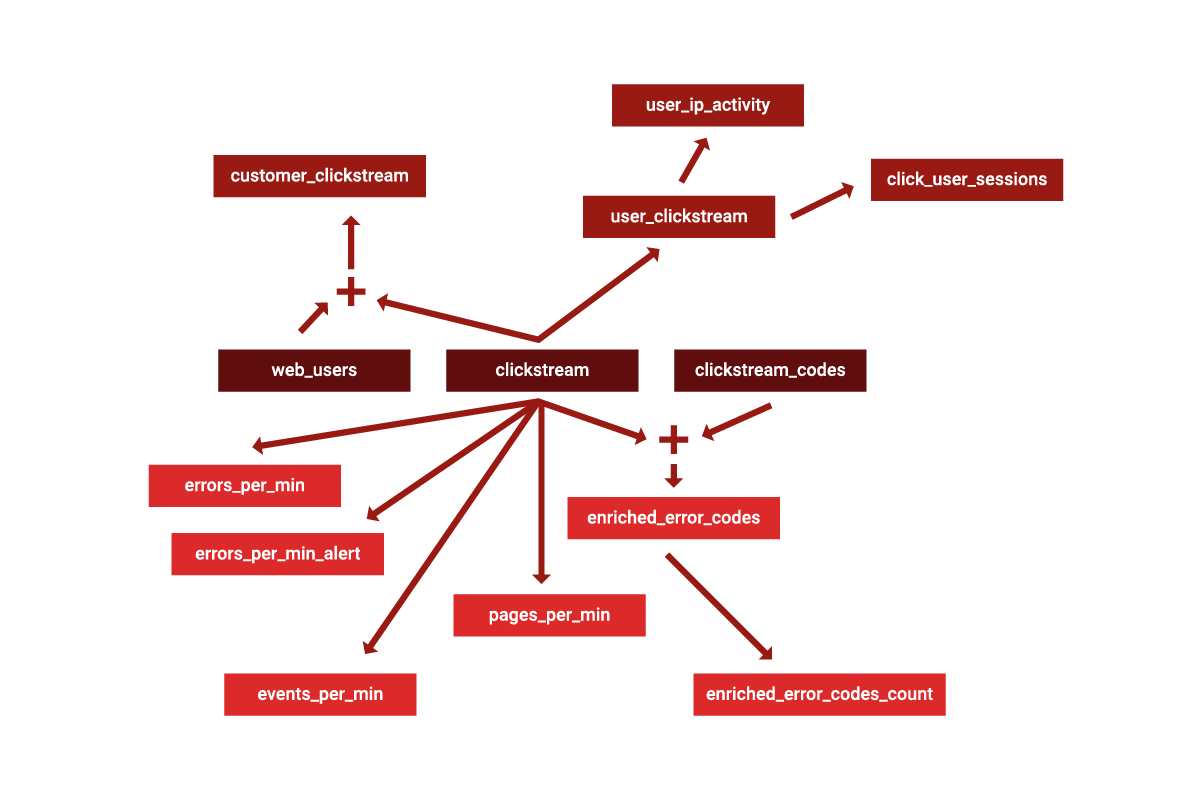

I’ve been using KSQL from Confluent since its first developer preview in 2017. Reading, writing, and transforming data in Apache Kafka® using KSQL is an effective way to rapidly deliver […]

Deploying Kafka Streams and KSQL with Gradle – Part 3: KSQL User-Defined Functions and Kafka Streams

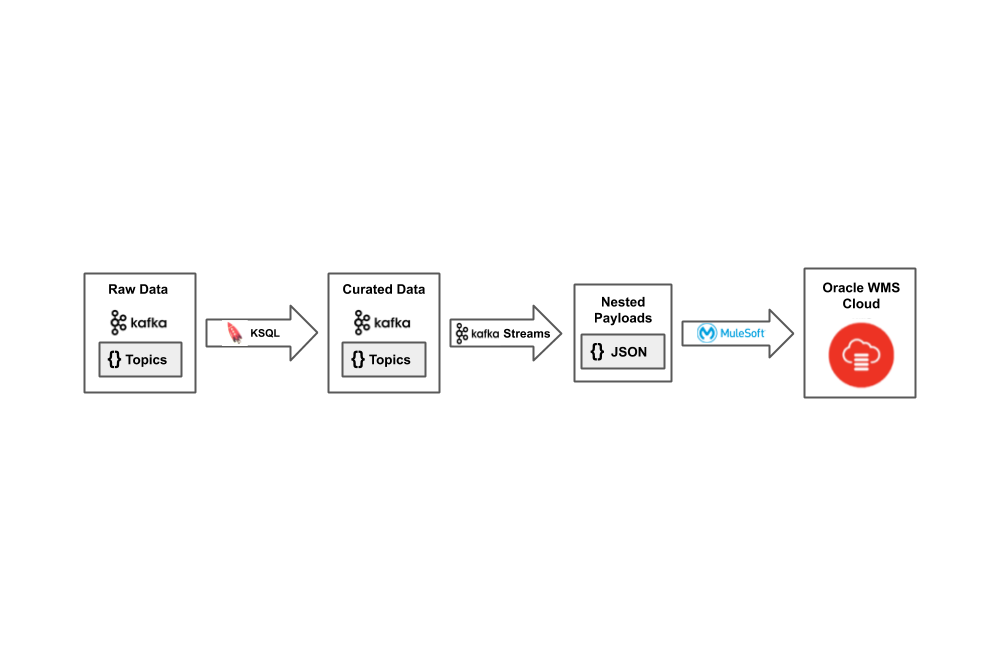

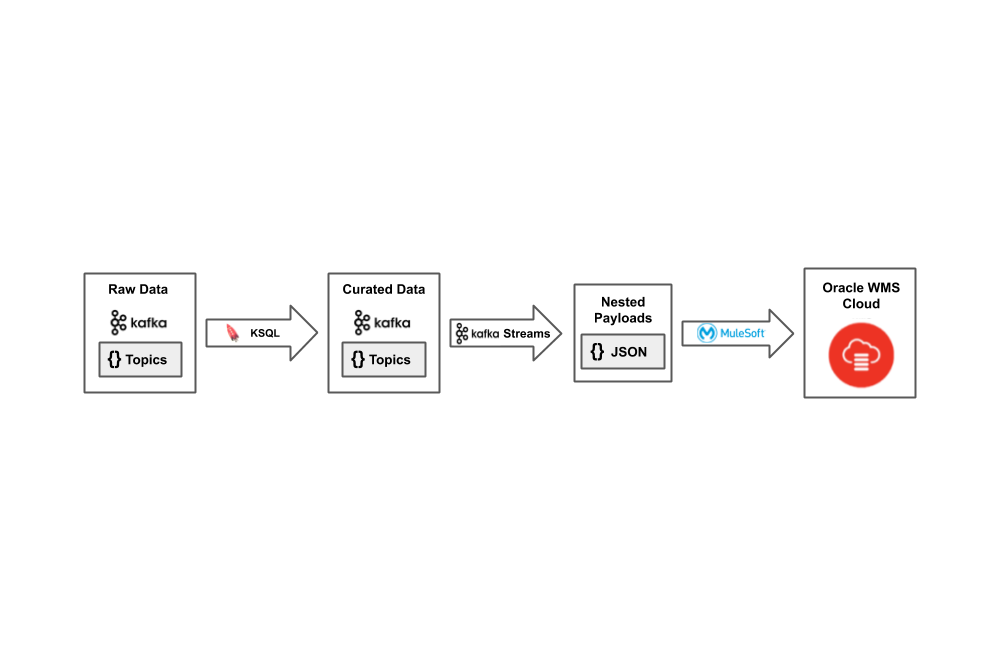

Building off part 1 where we discussed an event streaming architecture that we implemented for a customer using Apache Kafka, KSQL, and Kafka Streams, and part 2 where we discussed […]

Deploying Kafka Streams and KSQL with Gradle – Part 2: Managing KSQL Implementations

In part 1, we discussed an event streaming architecture that we implemented for a customer using Apache Kafka®, KSQL from Confluent, and Kafka Streams. Now in part 2, we’ll discuss […]

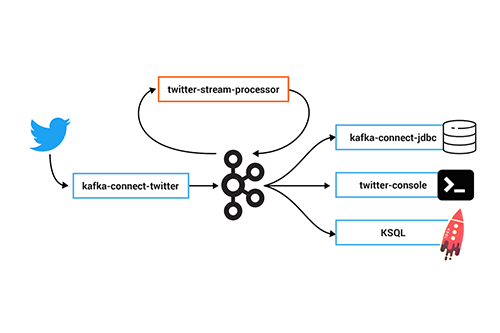

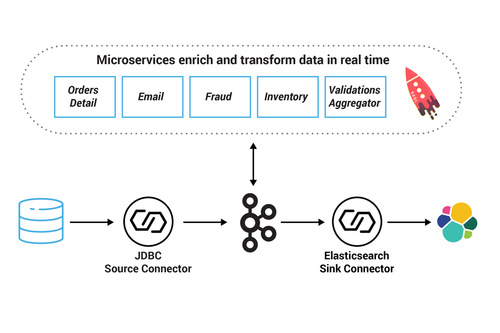

Deploying Kafka Streams and KSQL with Gradle – Part 1: Overview and Motivation

Red Pill Analytics was recently engaged by a Fortune 500 e-commerce and wholesale company that is transforming the way they manage inventory. Traditionally, this company has used only a few […]

Optimizing Kafka Streams Applications

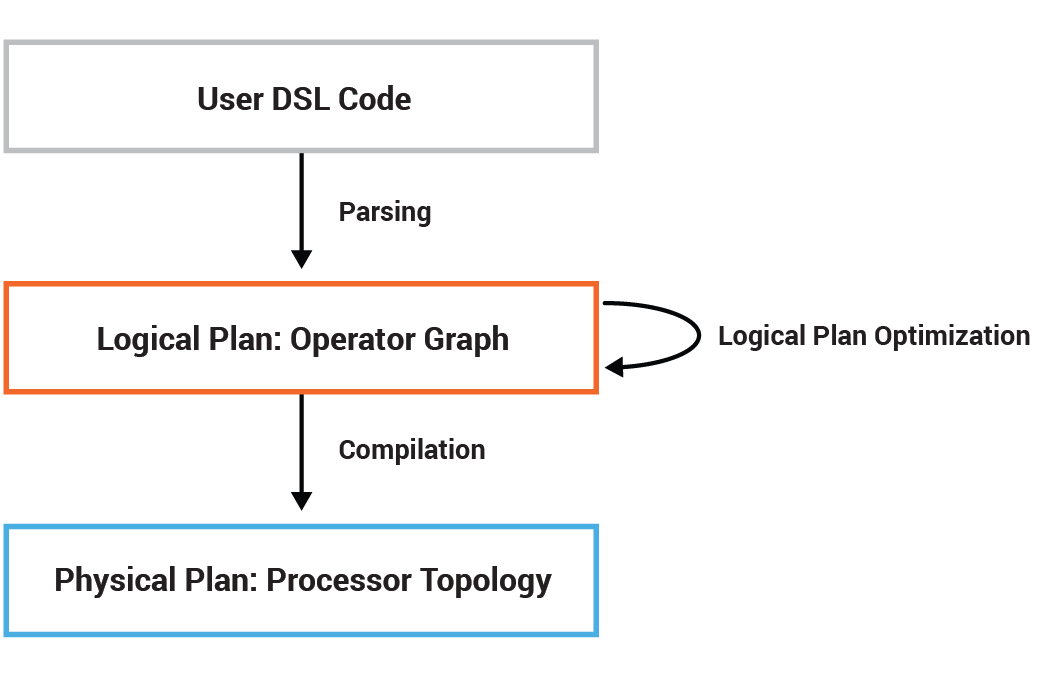

With the release of Apache Kafka® 2.1.0, Kafka Streams introduced the processor topology optimization framework at the Kafka Streams DSL layer. This framework opens the door for various optimization techniques […]

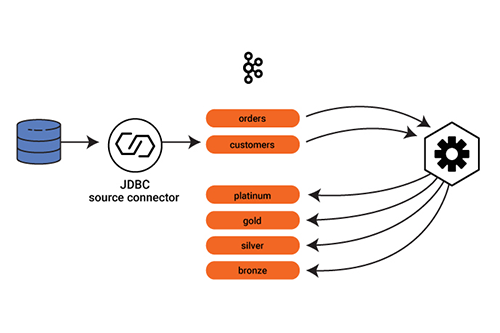

Putting Events in Their Place with Dynamic Routing

Event-driven architecture means just that: It’s all about the events. In a microservices architecture, events drive microservice actions. No event, no shoes, no service. In the most basic scenario, microservices […]

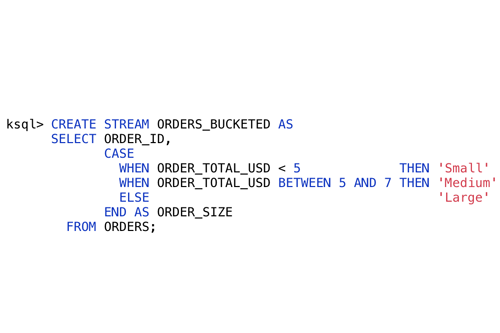

KSQL: What’s New in 5.2

KSQL enables you to write streaming applications expressed purely in SQL. There’s a ton of great new features in 5.2, many of which are a result of requests and support […]

The Importance of Distributed Tracing for Apache Kafka Based Applications

Apache Kafka® based applications stand out for their ability to decouple producers and consumers using an event log as an intermediate layer. One result of this is that producers and […]

Kafka Streams’ Take on Watermarks and Triggers

Back in May 2017, we laid out why we believe that Kafka Streams is better off without a concept of watermarks or triggers, and instead opts for a continuous refinement […]

Sysmon Security Event Processing in Real Time with KSQL and HELK

During a recent talk titled Hunters ATT&CKing with the Right Data, which I presented with my brother Jose Luis Rodriguez at ATT&CKcon, we talked about the importance of documenting and […]

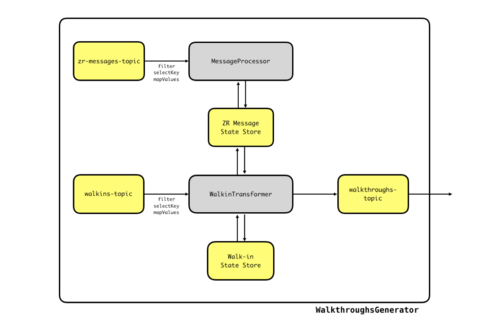

A Beginner’s Perspective on Kafka Streams: Building Real-Time Walkthrough Detection

Here at Zenreach, we create products to enable brick-and-mortar merchants to better understand, engage and serve their customers. Many of these products rely on our capability to quickly and reliably […]

Getting Your Feet Wet with Stream Processing – Part 2: Testing Your Streaming Application

Part 1 of this blog series introduced a self-paced tutorial for developers who are just getting started with stream processing. The hands-on tutorial introduced the basics of the Kafka Streams […]

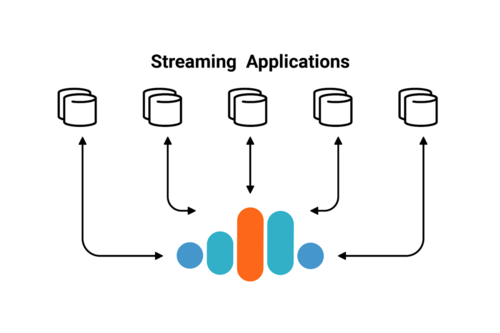

Getting Your Feet Wet with Stream Processing – Part 1: Tutorial for Developing Streaming Applications

Stream processing is a data processing technology used to collect, store, and manage continuous streams of data as it’s produced or received. Stream processing (also known as event streaming or […]

Deep Dive into ksqlDB Deployment Options

The phrase time value of data has been used to demonstrate that the value of captured data diminishes by time. This means that the sooner the data is captured, analyzed […]