Kafka in the Cloud: Why it’s 10x better with Confluent | Find out more

Confluent Blog

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

Building AI Agents and Copilots with Confluent, Airy, and Apache Flink

Airy helps developers build copilots as a new interface to explore and work with streaming data – turning natural language into Flink jobs that act as agents.

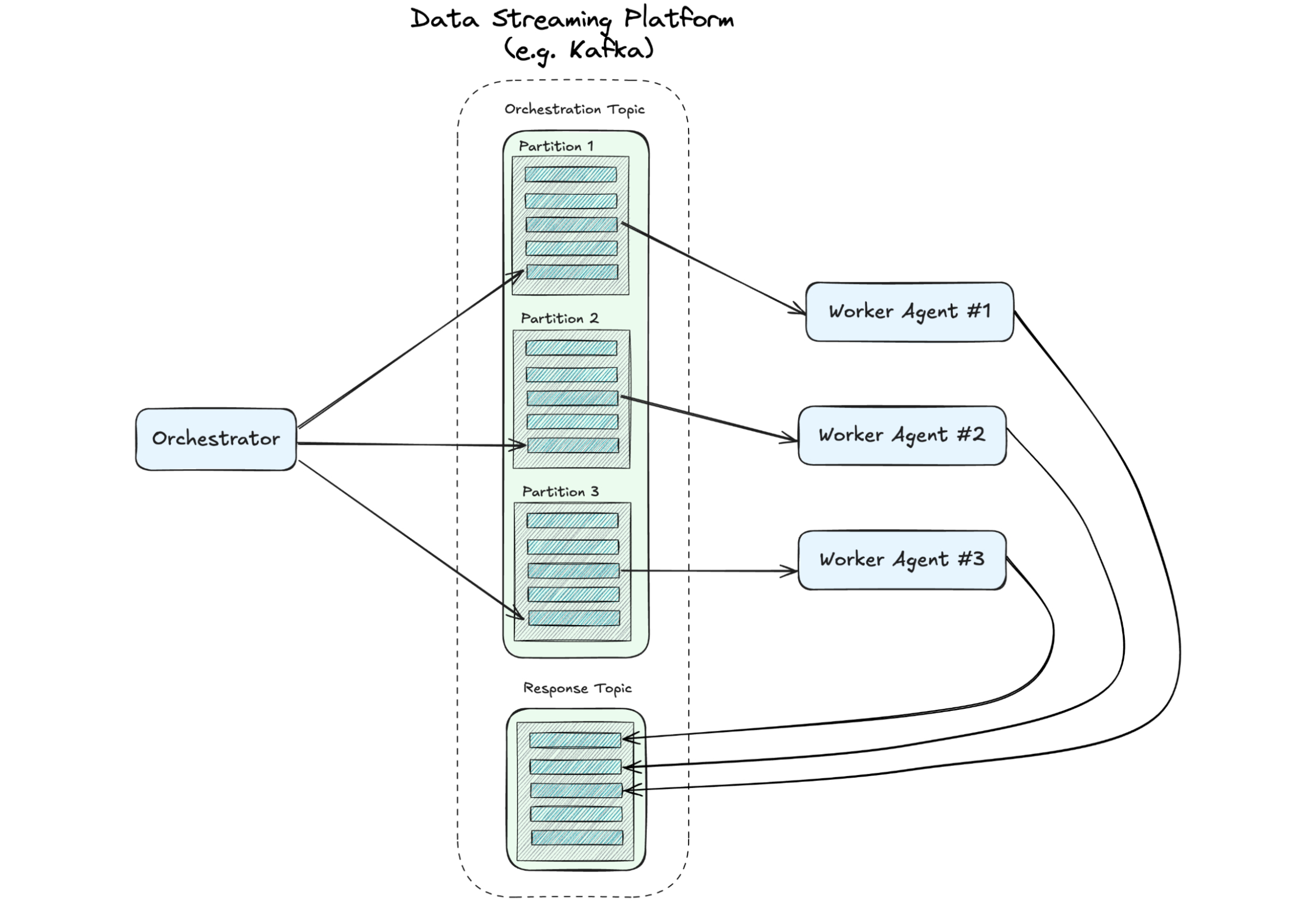

A Distributed State of Mind: Event-Driven Multi-Agent Systems

This article explores how event-driven design—a proven approach in microservices—can address the chaos, creating scalable, efficient multi-agent systems. If you’re leading teams toward the future of AI, understanding these patterns is critical. We’ll demonstrate how they can be implemented.

How Real-Time Data Streaming with GenAI Accelerates Singapore’s Smart Nation Vision

Real-time data streaming and GenAI are advancing Singapore's Smart Nation vision. As AI adoption grows, challenges from data silos to legacy infrastructure can slow progress - but Confluent, through IMDA's Tech Acceleration Lab, is helping orgs overcome hurdles and develop smarter citizen services.

Using Apache Flink® for Model Inference: A Guide for Real-Time AI Applications

Learn how Flink enables developers to connect real-time data to external models through remote inference, enabling seamless coordination between data processing and AI/ML workflows.

Building High Throughput Apache Kafka Applications with Confluent and Provisioned Mode for AWS Lambda Event Source Mapping (ESM)

Learn how to use the recently launched Provisioned Mode for Lambda’s Kafka ESM to build high throughput Kafka applications with Confluent Cloud’s Kafka platform. This blog also exhibits a sample scenario to activate and test the Provisioned Mode for ESM, and outline best practices.

Motivating Engineers to Solve Data Challenges with a Growth Mindset

Learn how Confluent Champion Suguna motivates her team of engineers to solve complex problems for customers—while challenging herself to keep growing as a manager.

Confluent Cloud for Government Is Now FedRAMP Ready

Confluent achieves FedRAMP Ready status for its Confluent Cloud for Government offering, marking an essential milestone in providing secure data streaming services to government agencies, and showing a commitment to rigorous security standards. This certification marks a key step towards full...

Bridging the Data Divide: How Confluent and Databricks Are Unlocking Real-Time AI

An expanded partnership between Confluent and Databricks will dramatically simplify the integration between analytical and operational systems, so enterprises spend less time fussing over siloed data and governance and more time creating value for their customers.

Confluent Announces New Cohort for AI Accelerator Program Focused on Real-Time Generative AI Applications

Confluent is thrilled to announce the newest cohort of early-stage startups joining the Confluent for Startups AI Accelerator program. This 10-week virtual program is designed to support the next generation of AI innovators that are developing real-time generative AI (GenAI) applications.

Automating Podcast Promotion with AI and Event-Driven Design

We built an AI-powered tool to automate LinkedIn post creation for podcasts, using Kafka, Flink, and OpenAI models. With an event-driven design, it’s scalable, modular, and future-proof. Learn how this system works and explore the code on GitHub in our latest blog.

Revolutionizing Failure Management in Apache Flink: Meet FLIP-304's Pluggable Failure Enrichers

FLIP 304 lets you customize and enrich your Flink failure messaging: Assign types to failures, emit custom metrics per type, and expose your failure data to other tools.

Unlock the Power of Data Streaming: Introducing The Ultimate Guide

Discover how to unlock the full potential of data streaming with Confluent's "Ultimate Data Streaming Guide." This comprehensive resource maps the journey to becoming a Data Streaming Organization (DSO), with best practices, industry success stories, & insights to scale your data streaming strategy.

Agentic AI: The Top 5 Challenges and How to Overcome Them

Before deploying agentic AI, enterprises should be prepared to address several issues that could impact the trustworthiness and security of the system.

Optimize SaaS Integration with Fully Managed HTTP Connectors V2 for Confluent Cloud

Learn how an e-commerce company integrates the data from its Stripe system with the Pinecone vector database using the new fully managed HTTP Source V2 and HTTP Sink V2 Connectors along with Flink AI model inference in Confluent Cloud to enhance its real-time fraud detection.