[Virtuelles Event] GenAI Streamposium: Lerne, Echtzeit-GenAI-Apps zu entwickeln und zu skalieren | Jetzt registrieren

Secure Stream Processing with Apache Kafka, Confluent Platform and KSQL

In this blog post, we first look at stream processing examples using KSQL that show how companies are using Apache Kafka® to grow their business and to analyze data in real time. These use cases establish KSQL as part of critical business workflows, which means that securing KSQL is also critical. Then we look at how to secure KSQL and the entire Confluent Platform with encryption, authentication, and authorization.

KSQL Use Cases

Kafka enables companies to transform their business with event-driven architectures. They can deploy real-time data pipelines that organize all of an enterprise’s data around a single source of truth, and then use stream processing to enable new business opportunities and new methods of real-time analysis and decision-making. KSQL is the streaming SQL engine for Apache Kafka that makes it very easy to read, write, and process streaming data in real time, at scale, using a SQL-like syntax. There’s no need to write any code in a programming language like Java or Scala.

Below is a KSQL expression that analyzes customer behavior in a hypothetical financial services business. It automatically qualifies residential mortgage clients who have good credit scores and captures those clients in a stream called qualified_clients. This produces a list of customers that a sales representative could reach out to to offer new (and hopefully valuable!) services.

CREATE STREAM qualified_clients AS SELECT client_id, city, action FROM mortgages m LEFT JOIN clients c ON m.client_id = c.user_id WHERE c.type = 'Residential' AND c.credit_score > 750;

Companies that have a massive amount of data to process, such as those in the Internet of Things (IoT) domain, rely on Kafka for real-time stream processing, operations, and alerting. Below is another KSQL expression that identifies devices reporting a saturation level more than three times in less than five seconds and creates events in a table called possible_malfunction. Then system can decide to send alerts on those events.

CREATE TABLE possible_malfunction AS SELECT device_id, count(*) FROM saturation_events WINDOW TUMBLING (SIZE 5 SECONDS) GROUP BY device_id HAVING count(*) > 3;

The KSQL examples above demonstrate how important stream processing is to companies across multiple domains—and security is critical to businesses like the ones we’ve illustrated. Financial services need to protect sensitive customer data and IoT companies need to protect their deployments from security vulnerabilities to ensure their operations continue to function and are not compromised by malicious code at the edge.

Securing KSQL

To connect to a secured Kafka cluster, Kafka client applications need to provide their security credentials. In the same way, we configure KSQL such that the KSQL servers are authenticated and authorized, and data communication is encrypted when communicating with the Kafka cluster. We can configure KSQL for:

Here is a sample configuration for a KSQL server:

Save your KSQL configuration parameters in a text file, and then pass this properties file to KSQL as a command line argument when you start it:

$ ksql-server-start ksql.properties

Once you have started KSQL, you can review settings for the configuration parameters:

ksql> SHOW PROPERTIES;

Additionally, KSQL natively supports data in the Avro format through integration with Confluent Schema Registry. If you are using KSQL with HTTPS to Confluent Schema Registry, you need to set the ksql.schema.registry.url to HTTPS, as shown in the properties file above. Additionally, set the KSQL environment variable KSQL_OPTS to define the credentials to use when communicating with the Confluent Schema Registry:

Securing Confluent Platform

Security is not a bolt-on feature—it’s a mindset and a process. With Confluent, your organization can deploy an enterprise solution that supports your organization’s process of greater security awareness. Every single component in the Confluent Platform has been designed with end-to-end security in mind:

From your clients…

- KSQL

- Kafka Streams API

- Confluent Python, Go, .NET, C/C++, JMS clients

…to your production infrastructure…

- Kafka brokers

- Kafka Connect

- Confluent REST proxy

- Confluent Schema Registry

- ZooKeeper

…to your monitoring infrastructure…

- Confluent Control Center

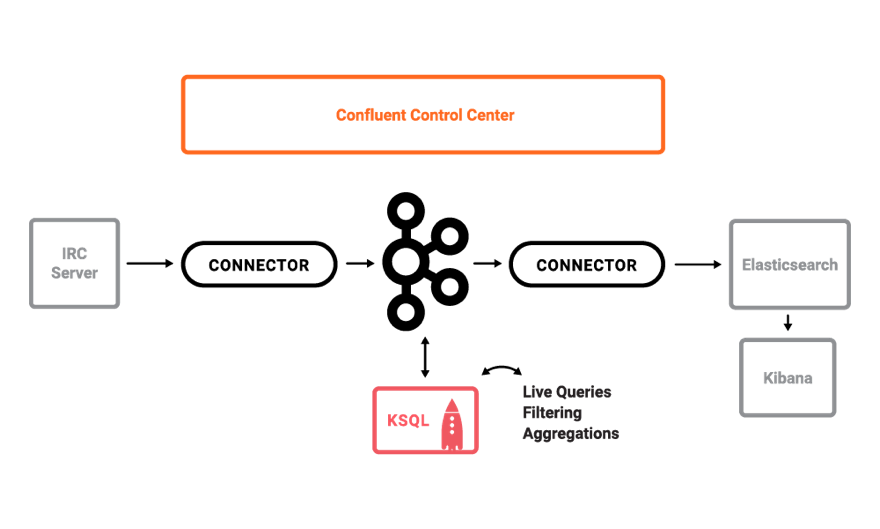

Check out the Confluent Platform demo to see security features working together across the platform. The use case in the demo is a Kafka streaming ETL deployment on live edits to real Wikipedia pages:

You can bring up this demo in less than five minutes, with security enabled on the Kafka cluster, and observe the components working end-to-end:

- KSQL

- Kafka Connect

- Confluent Replicator

- Confluent Schema Registry

- Kafka command line tools

- Confluent Control Center, including Confluent Metrics Reporter and Confluent Monitoring Interceptors

The demo also comes with a playbook of scenarios to get a technical understanding of how Kafka works. The playbook has a security section with an accompanying video series to go through some basic scenarios. For example, you can investigate what happens if a user tries to connect to a Kafka cluster to list topics without providing the proper authentication credentials:

Error: Executing consumer group command failed due to Request METADATA failed on brokers List(kafka1:9091 (id: -1 rack: null))

Or investigate how a broker logs an unauthorized client trying to consume messages from a topic in a Kafka cluster, and then configure ACLs to fix it:

INFO Principal = User:CN=client,OU=TEST,O=CONFLUENT,L=PaloAlto,ST=Ca,C=US is Denied Operation = Describe from host = 172.23.0.7 on resource = Group:test (kafka.authorizer.logger)

The demo also includes security for Confluent Control Center, which is the management and monitoring solution for Kafka. The same business security requirements that apply to the underlying infrastructure (e.g, producer and consumer clients, KSQL servers, etc.) also apply to monitoring infrastructure. This is because the data itself, as well as topic names, consumer group names, etc., may all include sensitive information. Access to it should be protected and must not be compromised.

Control Center has a unified configuration experience in supporting the same encryption, authentication, and authorization feature sets as your production Kafka cluster. In contrast, if you are using third-party monitoring tools, it is painful to configure and maintain security in a totally different interface from your production Kafka deployment, if those monitoring tools even support security features at all.

Security Resources

To make configuring Kafka security easier, we have recently improved our security configuration documentation. We have also added a security tutorial as a step-by-step guide for bringing up a secure cluster with Kafka brokers, ZooKeeper, Kafka Connect, Confluent Replicator, and optional monitoring via Confluent Control Center.

The tutorial walks through one example configuration and is great starting point for learning how to configure security on the Confluent Platform. The tutorial takes you through these steps:

- creating SSL keys and certificates

- configuring SSL encryption and SASL authentication on brokers

- configuring clients with the right credentials and security settings

- using kafka-acls for authorization

- troubleshooting commands

Using this as a reference, you can then customize your deployment per your own requirements:

- The tutorial authenticates with SASL/PLAIN but if your organization uses Active Directory with Kerberos, you can similarly configure SASL/GSSAPI authentication.

- The tutorial configures super users and uses Kafka’s out-of-the-box SimpleAclAuthorizer to add ACLs for other users. But the Authorizer interface is extensible, and you can plug in your own authorizer implementation.

- The tutorial configures a mix of SSL and SASL_SSL listeners on the broker, but you can also add an unsecured PLAINTEXT listener if you are migrating your cluster.

- You can enable security plugins for Confluent Schema Registry or Confluent REST Proxy to propagate authenticated principals to the Kafka cluster.

Confluent Summary

Companies are transforming their businesses with event-driven architectures. Confluent Platform provides end-to-end security for the streaming platform that enables those architectures to be the central nervous system for their companies.

Take the next steps to see how all the Confluent Platform components work securely together:

Interested in Learning More?

If you’d like to learn more about the Confluent Platform, here are some resources for you:

- Get started with KSQL to process and analyze your company’s data in real time

- Watch our three-part online talk series for the ins and outs behind how KSQL works, and learn how to use it effectively to perform monitoring, security and anomaly detection, online data integration, application development, streaming ETL and more

- Check out the Confluent Platform Demo and the Security Tutorial described in this post

- And be sure to watch the video series on Confluent Platform Security

Ist dieser Blog-Beitrag interessant? Jetzt teilen

Confluent-Blog abonnieren

Stop Treating Your LLM Like a Database

GenAI thrives on real-time contextual data: In a modern system, LLMs should be designed to engage, synthesize, and contribute, rather than to simply serve as queryable data stores.

Generative AI Meets Data Streaming (Part III) – Scaling AI in Real Time: Data Streaming and Event-Driven Architecture

In this final part of the blog series, we bring it all together by exploring data streaming platforms (DSPs), event-driven architecture (EDA), and real-time data processing to scale AI-powered solutions across your organization.