[Virtuelles Event] GenAI Streamposium: Lerne, Echtzeit-GenAI-Apps zu entwickeln und zu skalieren | Jetzt registrieren

Apache Kafka as a Service with Confluent Cloud Now Available on AWS Marketplace

You may already know that Confluent Cloud is available across AWS, Azure, and Google Cloud, allowing you to access the amazing stack built by Confluent including a battle-tested version of Apache Kafka®, Confluent Schema Registry, ksqlDB, and Kafka Connect as managed services on your cloud of choice.

You may also know that Confluent Cloud allows Azure and Google Cloud developers to use the service via their marketplaces to take advantage of a unified billing model where Confluent Cloud is just another item in their cloud bill. What you don’t know is that this is now possible for AWS developers too. Today, we are delighted to share that Confluent Cloud is now available on AWS Marketplace with the options of Annual Commits and Pay As You Go (PAYG) to pick from.

Why is this announcement important?

There has been tremendous adoption of Apache Kafka throughout the years, and it is increasingly becoming the foundation for event streaming applications. For this reason, it is important to have access to a fully managed Apache Kafka service that frees you from operational complexities, so you don’t need KafkaOps pros in order to use the technology.

While Confluent Cloud has always been available on AWS, before making it available through the marketplace, you needed to sign up and provide a credit card as a form of payment to get started. This meant having to manage an additional bill in addition to your AWS bill. This could be a point of friction since those of us who are familiar with AWS want flexibility to focus on development when launching services that are available for consumption through our existing accounts.

I am happy to say that having two separate bills is no longer necessary, and you can now subscribe to Confluent Cloud with your existing AWS account. And just like that, any consumption of Confluent Cloud will be reflected on your monthly AWS bill.

But having a managed service for Apache Kafka through a single, integrated billing model is not the only advantage. Confluent Cloud is a complete event streaming platform that offers you the tools to build event streaming applications with ease. Also available as managed services, tools like Confluent Schema Registry, connectors for Amazon S3, Amazon Kinesis, Amazon Redshift, and ksqlDB allow you to focus on creating and inventing things rather than handling plumbing.

For instance, if you are developing a use case that is all about streaming data into a data lake with Amazon S3 and events need to be curated (e.g., filtered, partitioned, adjusted, aggregated, or enriched) before getting into the S3 bucket, Confluent Cloud can help you to cut the complexity of building this in more than half. While AWS developers may be used to implementing use cases like this using services such as Amazon Managed Streaming for Kafka, Amazon Kinesis Data Analytics, and Amazon Kinesis Data Firehose, Confluent Cloud greatly simplifies the development experience by providing all these capabilities with a single service.

Getting started with Confluent Cloud on AWS Marketplace

In honor of Linus Torvalds, the creator of Linux kernel who once said, “Talk is cheap, show me the code,” let’s see how AWS developers can start streaming with Confluent Cloud.

Step 1: Search for Confluent Cloud

First, go to the AWS Marketplace and search for Confluent Cloud as shown in Figure 1. Alternatively, you can go directly to the options Annual Commits or PAYG.

Figure 1. Searching for Confluent Cloud in the Marketplace

Figure 1. Searching for Confluent Cloud in the Marketplace

Step 2: Selecting the subscription type

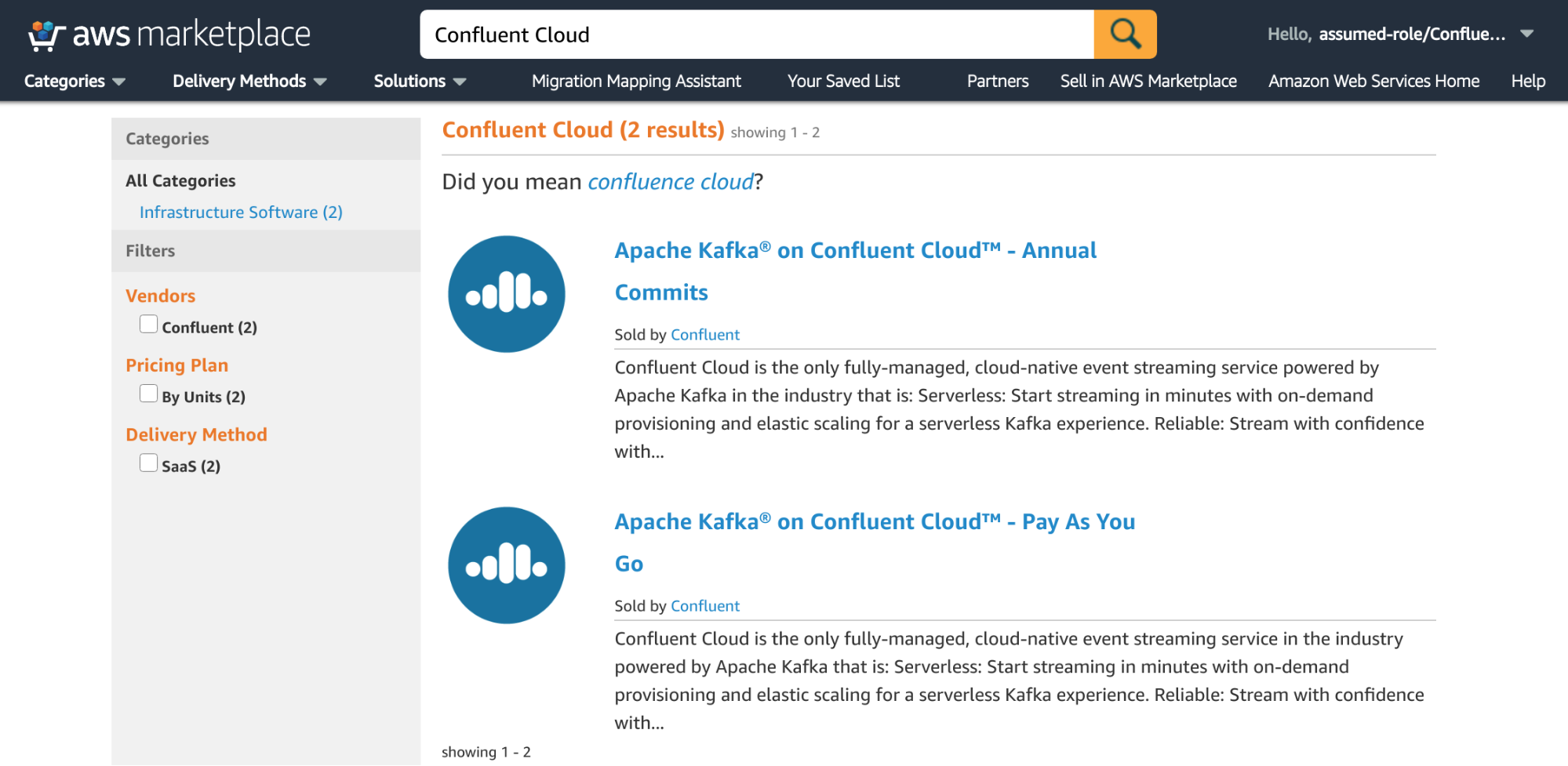

As a result of the search done in step 1, two entries will be returned. While both of them represent Confluent Cloud, each option differs in terms of charging methods and which cluster types will be available. Select Annual Commits if you want to prepay for your usage for one whole year. This is a great option if you know up front how much of the service you are going to use and would love to get a discount on that usage.

Select PAYG to get started without any commitments. With this subscription option, there are no upfront payments and usage is tracked and billed on a monthly basis. The product capabilities are the same as the Annual Commit option.

Figure 2. Selecting the subscription type

Figure 2. Selecting the subscription type

For the sake of this blog, I will select the PAYG option, but keep in mind that whatever you select, the subscribing flow will work the same way. The only difference though is when you are going to be charged. With PAYG, you will be charged at the end of the month for any incurred charges due to service usage, whereas with Annual Commits, you will be charged immediately and for the full amount once you complete the subscription.

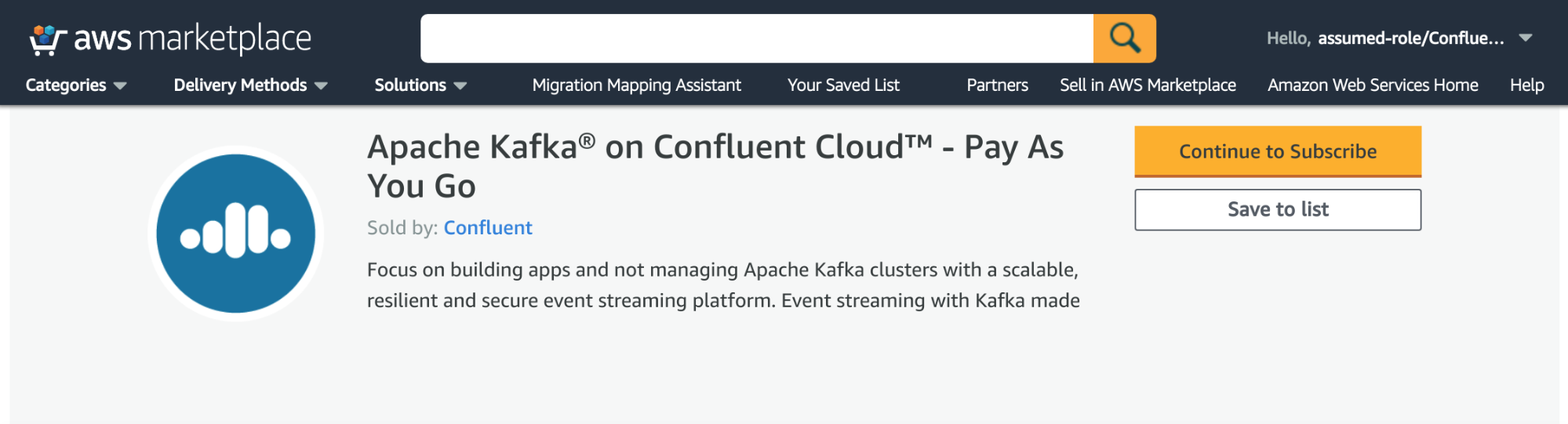

After selecting the subscription type, click on the Continue to Subscribe button to confirm your selection, as shown in Figure 3.

Figure 3. Confirming the selection

Figure 3. Confirming the selection

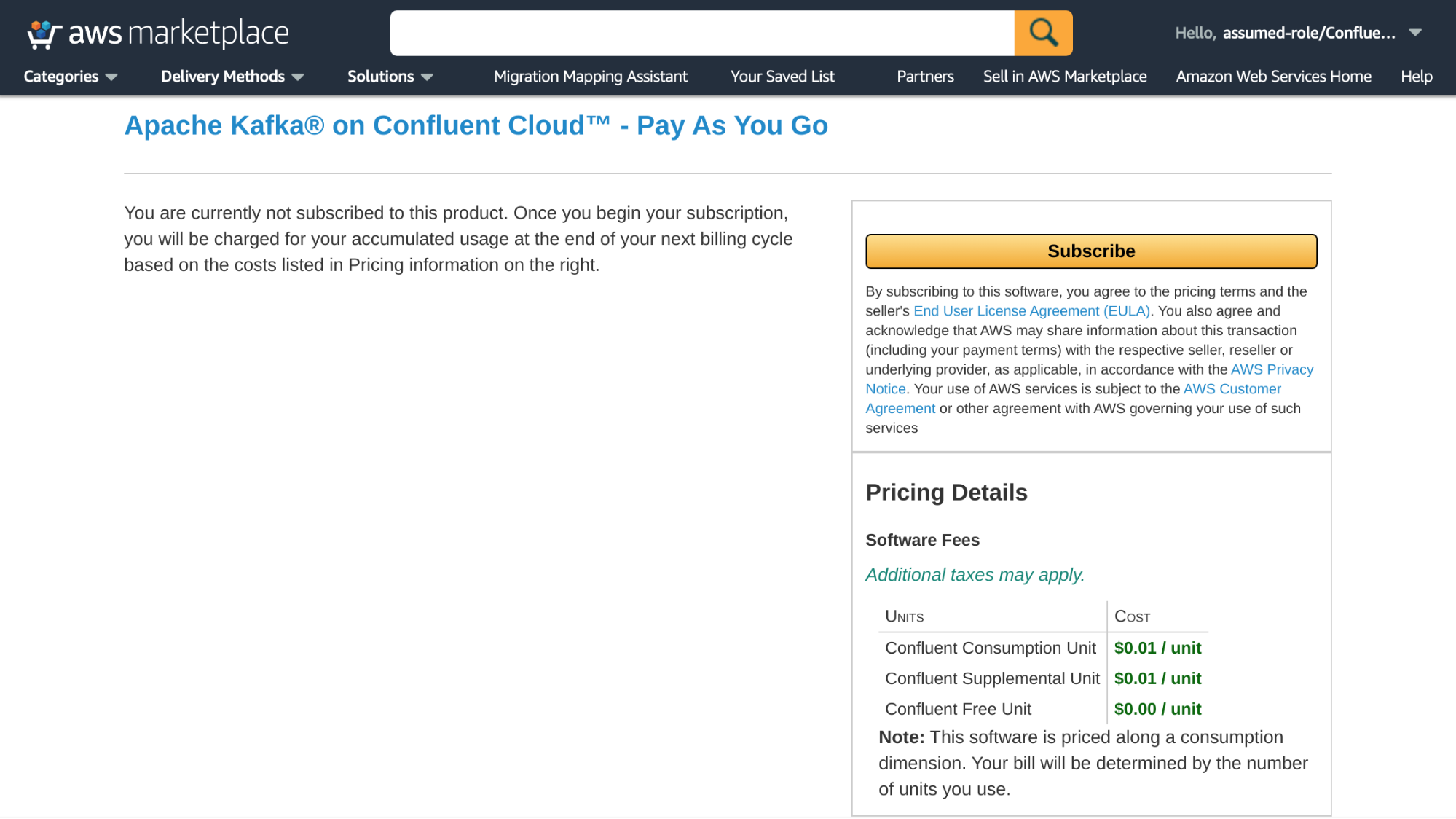

You will be presented with a page that summarizes the selection. On this page, you will find details about whether or not you have already subscribed to the service, as well as a review of the software fees. Click on the Subscribe button to subscribe to Confluent Cloud using your AWS account, as shown in Figure 4. If you are not yet logged in to the AWS account that you want to use with Confluent Cloud, then the UI will ask you to log in.

Figure 4. Confirming the subscription

Figure 4. Confirming the subscription

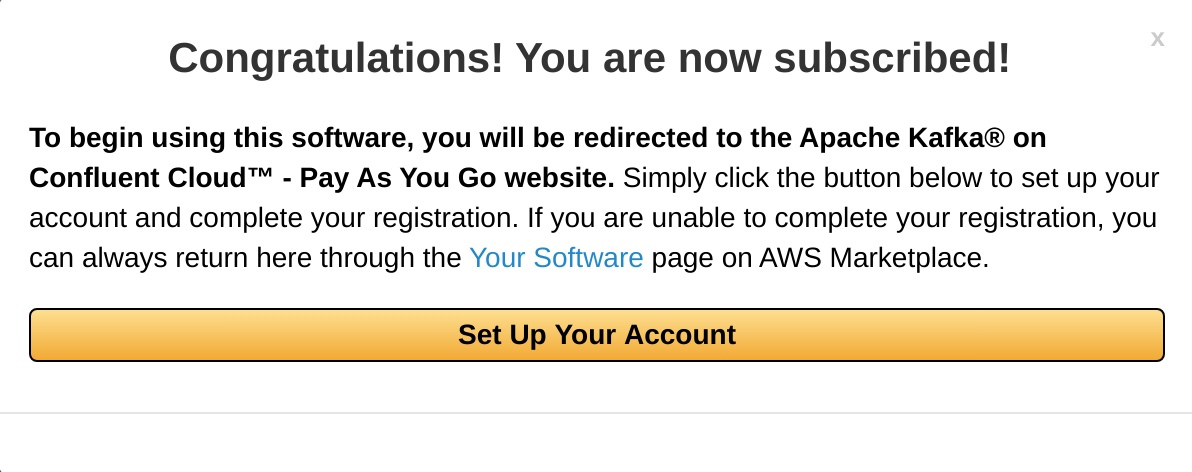

After clicking the Subscribe button, you will see a confirmation dialog similar to the one shown in Figure 5, saying that you have successfully subscribed to the service. From this point on, Confluent Cloud is part of your AWS costs. To use the service, set up the account that you will use to administer the service. Go ahead and click the Set Up Your Account button. A new page will open in your browser where you will have the chance to register the admin user.

Figure 5. Successfully completing the subscription

Figure 5. Successfully completing the subscription

Step 3: Register the admin user

To register the admin user, use an email that has never been registered with Confluent Cloud. Otherwise, the system will tell you that the user already exists. Provide an email address, your first and last name, your company (if applicable), and a password. Then click on the Submit button as shown in Figure 6.

Figure 6. Registering the admin user for Confluent Cloud

Figure 6. Registering the admin user for Confluent Cloud

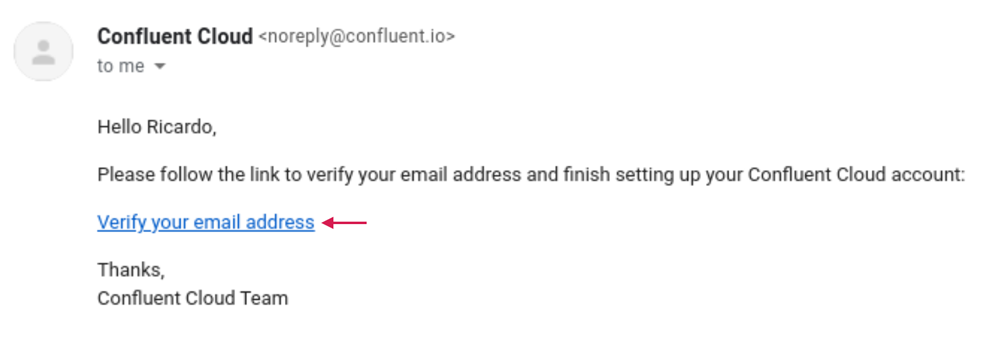

Step 4: Verify your email

You should receive an email asking you to verify the email address provided. This step is required for security purposes, so Confluent can verify if you are the person who initiated the registration process. Click on the Verify your email address link as shown in Figure 7.

Figure 7. Verifying your email address

Figure 7. Verifying your email address

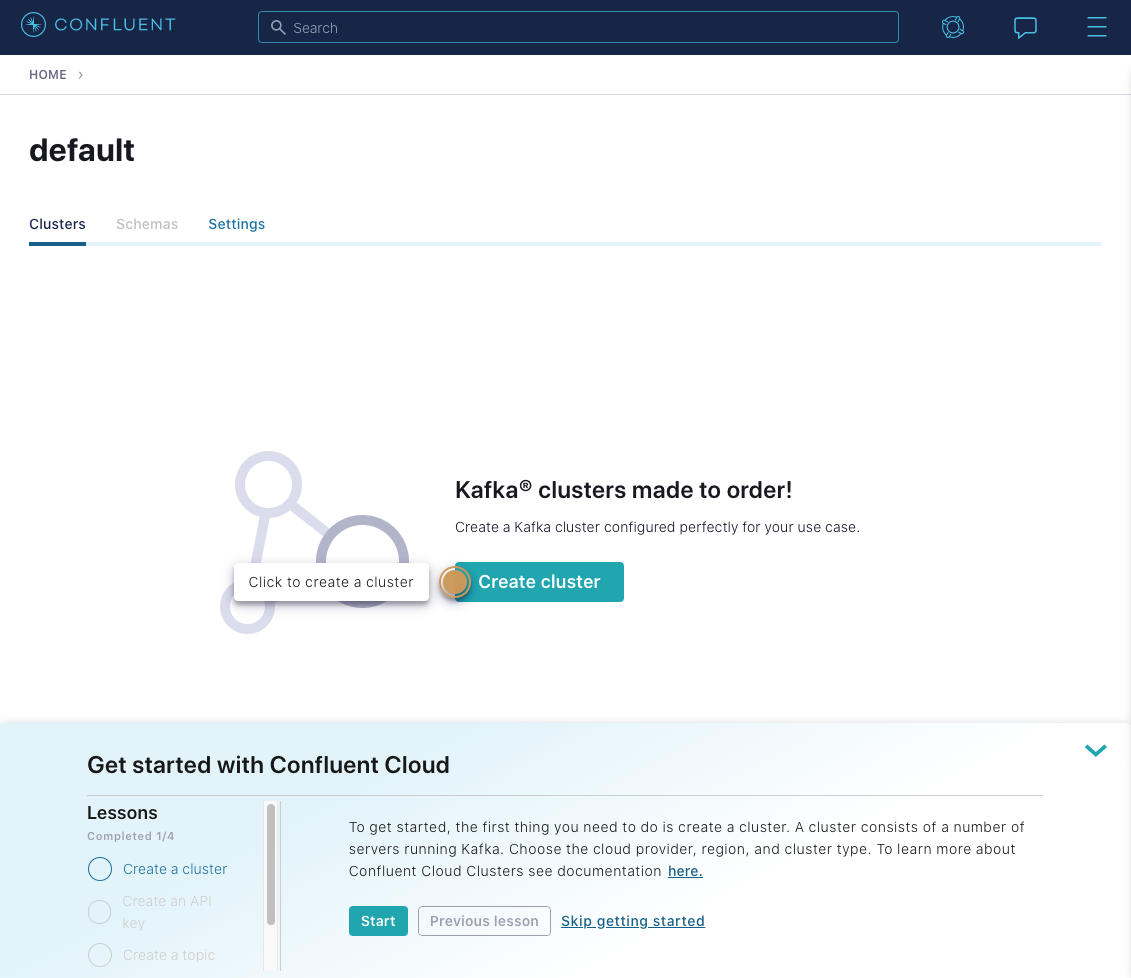

Step 5: Create Kafka clusters

After verification, you will be automatically logged in to Confluent Cloud with your new account (which happens to be the administrator of the account), and you can start playing with the service immediately. Note that as admin, you can register one or more users who will receive email invitations to join your account and be able to create resources as well.

Figure 8. Now the fun begins. Create as many clusters you need.

Figure 8. Now the fun begins. Create as many clusters you need.

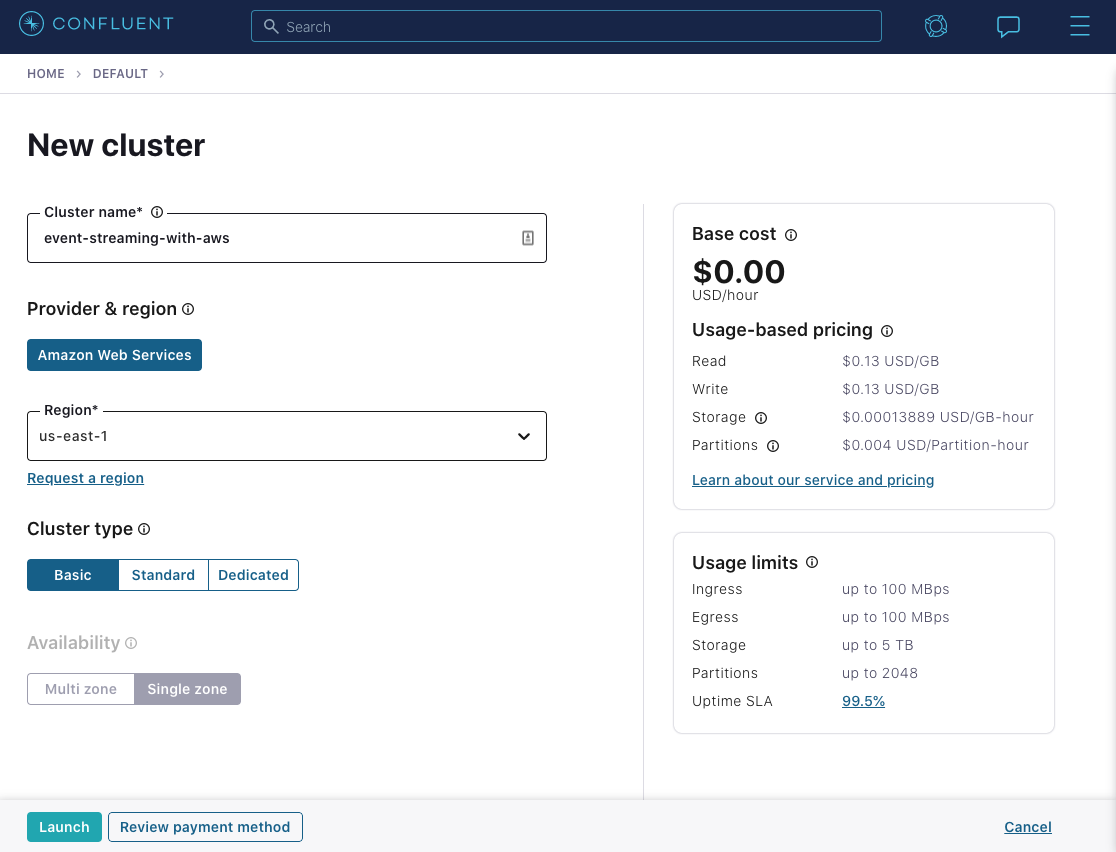

When it comes to creating clusters, there are some decisions to make but none that are as scary as they would be if you were managing Apache Kafka by yourself. Instead, you just have to name your cluster (it can be any string of your choice), choose which region from AWS to spin up the cluster from (it should be as close as possible to your apps to minimize latency), and choose a cluster type. Figure 9 shows an example of cluster creation.

Figure 9. Creating a new cluster in Confluent Cloud

Figure 9. Creating a new cluster in Confluent Cloud

Creating a cluster in Confluent Cloud enabled by AWS is no different than if users were to sign up directly with Confluent, except for the fact that any cluster you create won’t ask for credit card details. The Confluent Cloud documentation provides more details on how to use the service.

Summary

Confluent Cloud makes it easy and fun to develop event streaming applications with Apache Kafka. Now, Confluent is taking another step towards putting Kafka at the heart of every organization by making Confluent Cloud available on the AWS Marketplace, so you can subscribe to the service with your existing AWS account.

If you haven’t already, sign up for Confluent Cloud today! Use the promo code 60DEVADV for $60 of free Confluent Cloud usage.* If you’re a new user, you’ll also receive $400 to spend within Confluent Cloud during your first 60 days.

Ist dieser Blog-Beitrag interessant? Jetzt teilen

Confluent-Blog abonnieren

New with Confluent Platform 7.9: Oracle XStream CDC Connector, Client-Side Field Level Encryption (EA), Confluent for VS Code, and More

This blog announces the general availability of Confluent Platform 7.9 and its latest key features: Oracle XStream CDC Connector, Client-Side Field Level Encryption (EA), Confluent for VS Code, and more.

Meet the Oracle XStream CDC Source Connector

Confluent's new Oracle XStream CDC Premium Connector delivers enterprise-grade performance with 2-3x throughput improvement over traditional approaches, eliminates costly Oracle GoldenGate licensing requirements, and seamlessly integrates with 120+ connectors...