[Webinar] From Fire Drills to Zero-Loss Resilience | Register Now

Bust the Burglars – Machine Learning with TensorFlow and Apache Kafka

Have you ever realized that, according to the latest FBI report, more than 80% of all crimes are property crimes, such as burglaries? And that the FBI clearance figures indicate that only 13% of all burglaries in 2017 were cleared due to lack of witnesses and/or physical evidence?

How cool would it be to build your own burglar alarm system that can alert you before the actual event takes place simply by using a few network-connected cameras and analyzing the camera images with Apache Kafka®, Kafka Streams, and TensorFlow? I will show how to implement this use case in this blog post. For general information about how to build scalable and reliable machine learning infrastructures with Apache Kafka ecosystem, check out the article Using Apache Kafka to Drive Cutting Edge Machine Learning.

Setting up your burglar alarm

First of all, you will need one or more IP cameras to retrieve the images for processing. There are a couple of key features to look out for when selecting a camera for your burglar alarm.

Using the Java interface to OpenCV, it should be possible to process a RTSP (Real-Time Streaming Protocol) image stream, extract individual frames, and detect motion. If that does not sound appealing, you should select a camera with built-in support for motion detection and the ability to upload any images where motion was detected to a file server.

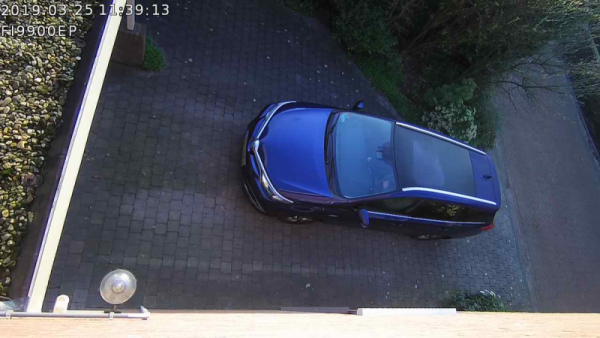

It’s important to ensure that the area of the image used for motion detection is configurable as well. For example, in the image below, the street should be excluded from motion detection to prevent people and cars passing by from activating your burglar alarm.

A convenient option is using Power-over-Ethernet-enabled (PoE-enabled) cameras. This reduces the amount of cables to a single Ethernet cable. If you choose this option, make sure that your network switch is capable of providing PoE.

The Foscam FI9900EP camera and Netgear GS305P both satisfy the conditions above.

Before mounting your cameras, do take some time to look for the best place to mount them. They should have a clear vision of your property and preferably exclude public areas. Common entry points for burglars are the front door (34%), a first-floor window (23%), and the back door (22%). Based on this information, it’s wise to ensure that at least your front door is under constant surveillance.

Uploading your images into Kafka

Assuming a setup has been arranged where the images to be analyzed for potential burglars are constantly uploaded onto a file server, we can reuse the same server to download the images into Kafka. A viable option for this would be a Kafka Connect FTP connector. By using Kafka Connect, we can take advantage of Single Message Transform (SMT) or converters to transform the images before they are loaded into Kafka.

Looking back at the image above, it is important to realize that only webcam snapshots taken during daylight will be in full color, but those taken at night use infrared sensors and will be in grayscale. To improve the burglar detection process, we must transform all images to grayscale. If you have an HD camera, it makes sense to reduce the image size as well by scaling the image proportionally. A third option could be to remove the parts that are irrelevant for burglar detection or may produce false alerts.

By applying these transformations, which I implemented using a custom Kafka Connect converter, the image above has been transformed from 1280 x 720 pixels in full color to 659 x 476 pixels by removing the street and applying a grayscale conversion. This also reduced the image size from 71 to 37 KB.

Building a TensorFlow model to analyze your images

The next step to creating an automated burglar alarm is to build your own TensorFlow model in the Kafka Streams pipeline to detect burglars.

Unfortunately, this is a tedious and time-consuming process. First of all, you will need a lot of images from your webcam, and you will have to classify them manually into at least two classes of images. I recommend distinguishing images as burglar and not burglar.

Both classes should contain the greatest realistic variety of images imaginable. Therefore, it’s a good idea to include images with passing animals like cats and dogs in the not burglar class. Similarly, the burglar class should contain a variety of potential burglars. To increase the variety, you can include people from the INRIA Person Dataset as “fake” burglars, as I have done in my TensorFlow demo-model project on GitHub.

Unless you have the time and (GPU) computing resources available to build a neural network model from scratch, the fastest way to build a model from your classified dataset is with transfer learning. This process reuses a saved neural network that was previously trained on a large dataset, and retrains only the last set of layers, thus preserving the first layers that are trained for feature detection. The advantage of this process is that retraining a few layers is reasonably fast and yields strong results if the trained features of the reused model were sufficiently generic for the image classification.

You can leverage the code in my sample demo-model project on GitHub to train your own model by forking the repository, replacing the images with your own transformed webcam images, and following the instructions in the README file. Afterwards, you should have a saved, frozen version of the neural network model, which can be imported by TensorFlow for Java in your Kafka Streams application. Then, you can classify the images from your webcam as burglar or not a burglar.

Receiving burglar alerts from Kafka

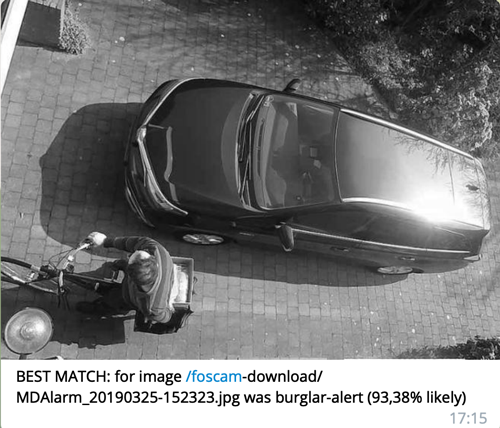

With the camera image processing complete, it’s time to configure the second connector in our Kafka Streams application: a sink connector that will consume all images that have been classified as burglar and send the corresponding burglar alert messages. I have used the Telegram sink connector for this, which can be easily installed from the Confluent Hub.

Because the Telegram connector uses the Telegram bot API to send messages, you will need to create a bot using the BotFather before you can use this connector. After that, the configuration of this connector is pretty straightforward and well documented.

A nice feature that the Telegram connector provides is the ability to send other types of messages than mere text messages. It can take advantage of Avro to process image messages, complete with captions. The domain classes to achieve this were generated from an Avro schema file and are available on Maven Central.

An alert message from the Telegram connector for an image classified as burglar looks like this:

Eventually, these alerts can be useful for improving your TensorFlow model by adding wrongly classified images to your training set and retraining the model.

Nice-to-have: Use your smart locks for additional filtering

A perfectly trained model should be able to distinguish you or your family from other people and send burglar alerts only for unfamiliar persons. Unfortunately, there’s no such thing as a perfect model of our world. Just imagine what will happen when you organize a barbecue or a birthday party: your guests will be identified as burglars, and you will receive a lot of burglar alerts.

In such a situation, one approach would be to disarm the system if it knows that you are at home. I use a smart lock system for this, the Nuki Smart Lock, to ensure that I do not receive any burglar alert messages when one or more smart locks are unlocked. The rationale behind this is that a smartlock should only be in the unlocked state when at least one family member is at home.

If the state of your smart lock system can be retrieved using a (REST) API, such as the Nuki Web API, you can use the REST source connector to poll your smart lock(s) every one to five minutes and use the last polled state to filter out any unwanted burglar alerts.

Pipeline configuration

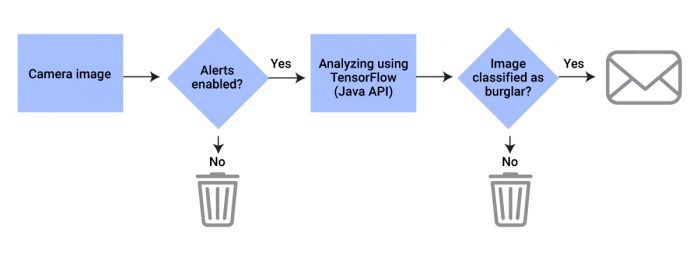

Now that all components are in place, we can put them together and build an event streaming pipeline as shown in the flowchart below, where the alerts enabled state is a reflection of the latest poll result of the smart lock state—or any other additional filtering method you prefer instead.

Because the publication and consumption of messages from Kafka is transparently handled by Kafka Connect, this flow is trivially simple to implement using Kafka Streams with two exceptions: calling out to TensorFlow and the state of the alerts enabled flag.

Although the TensorFlow for Java API is not officially announced as stable, extensive documentation and clear examples can be found on the TensorFlow website. You can either use these pointers to get started and write your own code from scratch, or you can adapt the TensorFlow matcher code from my demo application.

I have found that the easiest way to determine and preserve the state of the alerts enabled flag is by using the GlobalKTable concept, and making sure the value is updated after each poll to the smartlock REST API.

The complete source code of the demo application can be found on GitHub.

Wrap up

If you are willing to spend some time transforming and classifying images from your webcam and building a TensorFlow model from them, it’s perfectly feasible to build your own burglar alert detection system. You can use Kafka Connect to load and transform your images before sending them into Kafka, allowing you to focus solely on the message transformation pipelines. This greatly simplifies your Kafka Streams applications.

About Apache Kafka’s Streams API

If you have enjoyed this article, you might want to continue with the following resources to learn more about Apache Kafka’s Streams API:

- Get started with the Kafka Streams API to build your own real-time applications and microservices

- Walk through the Confluent tutorial for the Kafka Streams API with Docker and play with the Confluent demo applications

Ist dieser Blog-Beitrag interessant? Jetzt teilen

Confluent-Blog abonnieren

How to Build Autonomous Data Systems for Real-Time Decisioning

Learn how real-time decisioning and autonomous data systems enable organizations to act on data instantly using streaming, automation, and AI.

How to Break Off Your First Microservice

Learn how to safely break off your first microservice from a monolith using a low-risk, incremental migration approach.