[Virtual Event] GenAI Streamposium: Learn to Build & Scale Real-Time GenAI Apps | Register Now

Best Practices to Secure Your Apache Kafka Deployment

For many organizations, Apache Kafka® is the backbone and source of truth for data systems across the enterprise. Protecting your event streaming platform is critical for data security and often required by governing bodies. This blog post reviews five security categories and the essential features of Kafka and Confluent Platform that enable you to secure your event streaming platform.

Authentication

![]() Authentication verifies the identity of users and applications connecting to Kafka and Confluent Platform. There are three main areas of focus related to authentication: Kafka brokers or Confluent Servers, Apache ZooKeeper™ servers, and HTTP-based services. HTTP services include Kafka Connect workers, ksqlDB servers, Confluent REST Proxy, Schema Registry, and Control Center. Authenticating all connections to these services is foundational to a secure platform.

Authentication verifies the identity of users and applications connecting to Kafka and Confluent Platform. There are three main areas of focus related to authentication: Kafka brokers or Confluent Servers, Apache ZooKeeper™ servers, and HTTP-based services. HTTP services include Kafka Connect workers, ksqlDB servers, Confluent REST Proxy, Schema Registry, and Control Center. Authenticating all connections to these services is foundational to a secure platform.

Kafka brokers and Confluent Servers

Kafka brokers and Confluent Servers authenticate connections from clients and other brokers using Simple Authentication and Security Layer (SASL) or mutual TLS (mTLS).

SASL is a framework for authentication and provides a variety of authentication mechanisms. The SASL mechanisms currently supported include PLAIN, SCRAM, GSSAPI (Kerberos), and OAUTHBEARER (Note: Users of OAUTHBEARER must provide the code to acquire and verify credentials). Kafka brokers can enable multiple mechanisms simultaneously, and clients can choose which to utilize for authentication. A Kafka listener is, roughly, the IP, port, and security protocol on which a broker accepts connections. SASL configuration is slightly different for each mechanism, but generally the list of desired mechanisms are enabled and broker listeners are configured. The following is an excerpt of a GSSAPI configuration:

sasl.enabled.mechanisms=GSSAPI sasl.mechanism.inter.broker.protocol=GSSAPI listeners=SASL_SSL://kafka1:9093 advertised.listeners=SASL_SSL://localhost:9093

See the SASL documentation for full details.

When utilizing mTLS for authentication, SSL/TLS certificates provide basic trust relationships between services and clients. However mTLS can’t be used in association with any SASL mechanisms. Once SASL is enabled for a given listener, the SSL/TLS client authentication will be disabled.

Of the supported authentication methods, mTLS, SASL SCRAM, and SASL GSSAPI are the current suggested authentication methods to use with Kafka brokers. With Confluent Server, you can also use the PLAIN mechanism when paired with the Confluent-provided LDAP callbacks and SSL/TLS. These methods are suggested as they lend themselves to better security practices for an enterprise production environment. It’s also highly recommended to always incorporate SSL/TLS to protect authentication credentials on the network. The Encryption section below will further expand on protecting data in transit.

ZooKeeper servers

Kafka utilizes ZooKeeper for a variety of critical cluster management tasks. Preventing unauthorized modifications in ZooKeeper is paramount to maintaining a stable and secure Kafka cluster. The Kafka 2.5 release began shipping with ZooKeeper versions that support mTLS authentication. You can enable ZooKeeper authentication with or without SASL authentication. Prior to the 2.5 release, only the DIGEST-MD5 and GSSAPI SASL mechanisms were supported.

To enable mTLS authentication for ZooKeeper, you must configure both ZooKeeper and Kafka.

The following is a sample ZooKeeper configuration fragment enabling mTLS:

zookeeper.connect=zk1:2182,zk2:2182,zk3:2182 zookeeper.ssl.client.enable=true zookeeper.clientCnxnSocket=org.apache.zookeeper.ClientCnxnSocketNetty zookeeper.ssl.keystore.location=<path-to-kafka-keystore> zookeeper.ssl.keystore.password=<kafka-keystore-password> zookeeper.ssl.truststore.location=<path-to-kafka-trustore> zookeeper.ssl.truststore.password=<kafka-truststore-password> zookeeper.set.acl=true

HTTP services

Kafka Connect, Confluent Schema Registry, REST Proxy, MQTT Proxy, ksqlDB, and Control Center all support authentication on their HTTP(S) listeners, REST APIs, and user interfaces. Basic authentication and mTLS are supported by Kafka and Confluent Platform. However, Confluent Platform also provides options for authentication to LDAP via HTTP basic authentication.

Authorization

Whereas authentication verifies the identity of users and applications, authorization allows you to define the actions they are permitted to take once connected.

ACLs

Apache Kafka ships with an out-of-box authorization mechanism to establish ACL configurations to protect specific Kafka resources. Kafka ACLs are stored within ZooKeeper and are managed with the kafka-acls command. With Kafka ACLs, you can use the SimpleAclAuthorizer, which verifies the principal that was identified during authentication. Clients can be identified with the SCRAM username, Kerberos Principal, or client certificate. Confluent Platform also provides the legacy LDAP Authorizer and its replacement Confluent Server Authorizer, which can be used to integrate with existing LDAP infrastructure.

Kafka ACLs provide a granular approach to managing access to a single Kafka cluster, but do not extend to additional services like Kafka Connect, Confluent Schema Registry, Control Center, or ksqlDB. ZooKeeper-based ACLs are also cluster specific, so if you are managing multiple clusters, you will be required to manage the ACLs across all your clusters independently. Finally, ACLs may prove to be difficult to manage with a large set of principals (users, groups, or applications) and controls as there is no concept of roles.

Confluent Platform helps address some of these ACL management shortcomings with the inclusion of centralized ACLs. This allows you to manage the ACLs for multiple clusters from one centralized, authoritative cluster using the same applications and foundations as Role-Based Access Control.

Role-Based Access Control (RBAC)

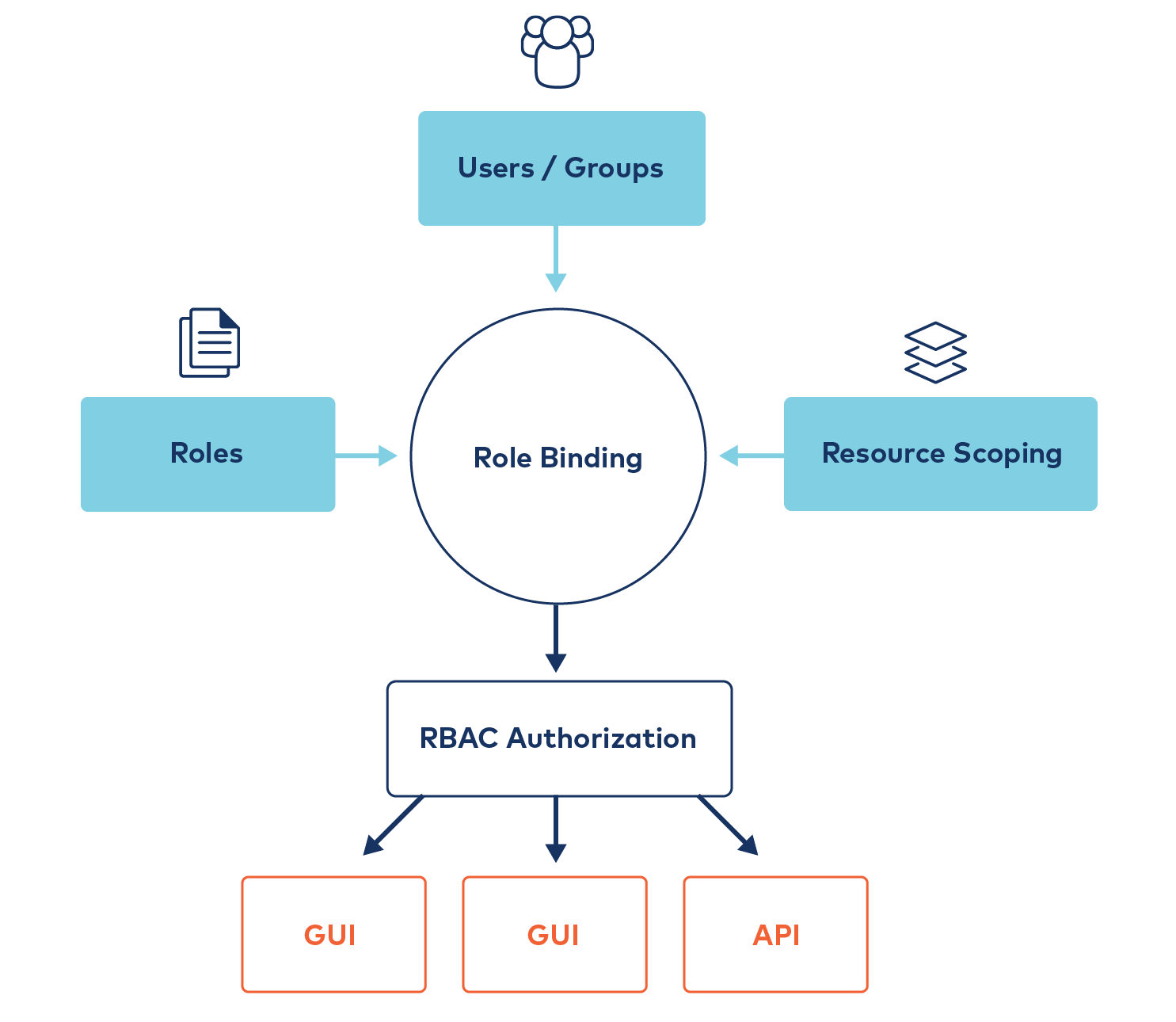

Confluent Platform provides Role-Based Access Control (RBAC), which addresses the gaps listed above. RBAC and ACLs perform similar functions but approach the problem differently and can be used independently or cooperatively. RBAC is powered by Confluent’s Metadata Service (MDS), which integrates with LDAP and acts as the central authority for authorization and authentication data. RBAC leverages role bindings to determine which users and groups can access specific resources and what actions can be performed within those resources (roles). RBAC is empowered on the Kafka cluster by way of Confluent Server. Confluent Server is a fully compatible Kafka broker which integrates commercial security features like RBAC.

An administrator assigns predefined roles to users and groups on various resources; each user can be assigned multiple roles on each resource. With resources being things such as a Kafka cluster, a consumer group, or simply a topic. Administrators create role bindings that map together the principal, the roles, and the resources. Role bindings can be managed with Control Center or with the Confluent CLI using the iam rolebinding sub-command, for example:

confluent iam rolebinding create --principal User:ConnectAdmin --role ResourceOwner --resource Topic:connect-statuses --kafka-cluster-id abc123

RBAC also integrates tightly with many of the other applications in Confluent Platform to provide both cluster-level and resource-level bindings tailored to the specific application. For example, Kafka Connect with RBAC allows you to control connectors as a resource and manage access to a whole distributed Kafka Connect cluster with cluster-level role bindings. The RBAC Schema Registry integration also allows you to treat schema subjects as resources and a set of Schema Registries as a cluster to bind roles against.

Encryption

Protecting data in transit

Encrypting data in transit between brokers and applications mitigates risks for unintended interception of data across the network. Authentication and encryption are defined in Kafka per listener. By default, Kafka listeners use an unsecured PLAINTEXT protocol that is acceptable for development environments; however, all production environments should enable encryption. Encryption on brokers is configured using the listener configuration and managing truststores and keystores. This snippet shows two listeners utilizing SSL and SASL over SSL together on the broker:

listeners=SSL://:9093,SASL_SSL://:9094

The keys and trusted certificates are configured using the

ssl configuration key. For example:

ssl.truststore.location=/var/private/ssl/kafka.server.truststore.jks ssl.truststore.password=test1234 ssl.keystore.location=/var/private/ssl/kafka.server.keystore.jks ssl.keystore.password=test1234 ssl.key.password=test1234

SSL/TLS can also be used for client authentication by distributing trusted certificates across clients and services. See the Confluent Security Tutorial for more details on this approach.

To assist with migrating an unprotected cluster to a protected one, Kafka supports multiple listeners. Simply configure a new protected listener and migrate client applications to utilize it. Once all clients are migrated, you can decommission the unprotected listener. The security tutorial walks you through configuring new and existing clusters with SSL security.

Once brokers are secured, clients must be configured for security as well. Following a similar procedure as the brokers, configure encryption for all clients including ksqlDB, Kafka Connect, and Kafka Streams.

Secret Protection

Enabling encryption and security features requires the configuration of secret values, including passwords, keys, and hostnames. Kafka does not provide any method of protecting these values and users often resort to storing them in cleartext configuration files and source control systems. It’s common to see a configuration with a secret like this:

ssl.key.password=test1234

Common security practices dictate that secrets should not be stored as cleartext in configuration files as well as redacted from application logging output, preventing unintentional leakage of their value. Confluent Platform provides Secret Protection, which leverages envelope encryption, an industry standard for protecting encrypted secrets through a highly secure method.

Applying this feature results in configuration files containing instructions for a ConfigProvider to obtain the secret values instead of the actual cleartext value:

ssl.key.password=${securepass:secrets.file:server.properties/ssl.key.password}

This blog post on Secret Protection shows you how to leverage this feature.

Connect Secret Registry

When using Kafka Connect, you’ll be configuring connectors with a variety of secrets to securely connect with external systems. Similar to the previous section on secrets, you can use external configuration providers to read the secret values at runtime instead of configuring them in cleartext. Confluent supplies an extension to Kafka Connect, the Connect Secret Registry, which serves as a secret provider that runs in the same process as Kafka Connect workers. The registry provides a mechanism for managing secrets through a REST API and securely storing them in a Kafka topic. Once this feature is enabled, you can configure your connectors with the Secret Registry as the configuration provider, similar to a secure file described above:

ssl.key.password=${registry:my-connector:ssl.key.password}

Observability

![]()

Observing and reacting to security events and service metrics help to maintain a reliable and secure cluster. Service disruptions can be caused by misconfigurations, faulty applications, or bad actors using brute force techniques. Confluent Platform provides key features that enable operators to observe and alert on events occurring within the platform.

Audit logs

Kafka does not provide a mechanism for maintaining a record of authorization decisions out of the box. Operators are required to capture and parse unstructured logs from brokers in order to build observations on ACL decisions.

Confluent Platform complements Kafka with structured audit logs, which provide a way to capture, protect, and preserve authorization activity into Kafka topics. Specifically, audit logs record the runtime decisions of the permission checks that occur as users/applications attempt to take actions that are protected by ACLs and RBAC. Auditable events are recorded in chronological order, although it is possible for a consumer of audit log messages to fetch them out of order. These structured events provide operators the ability to survey actionable security events in real time on their cluster. Audit logs are structured using CloudEvents, a vendor-neutral specification that defines the schema of the event data, allowing for integration with a variety of industry tools.

Monitoring

Monitoring and tracking cluster metrics is key to detecting anomalies, which may include security breaches. With Kafka, you can configure JMX ports and implement a custom metrics and monitoring solution, or integrate with various third-party monitoring solutions.

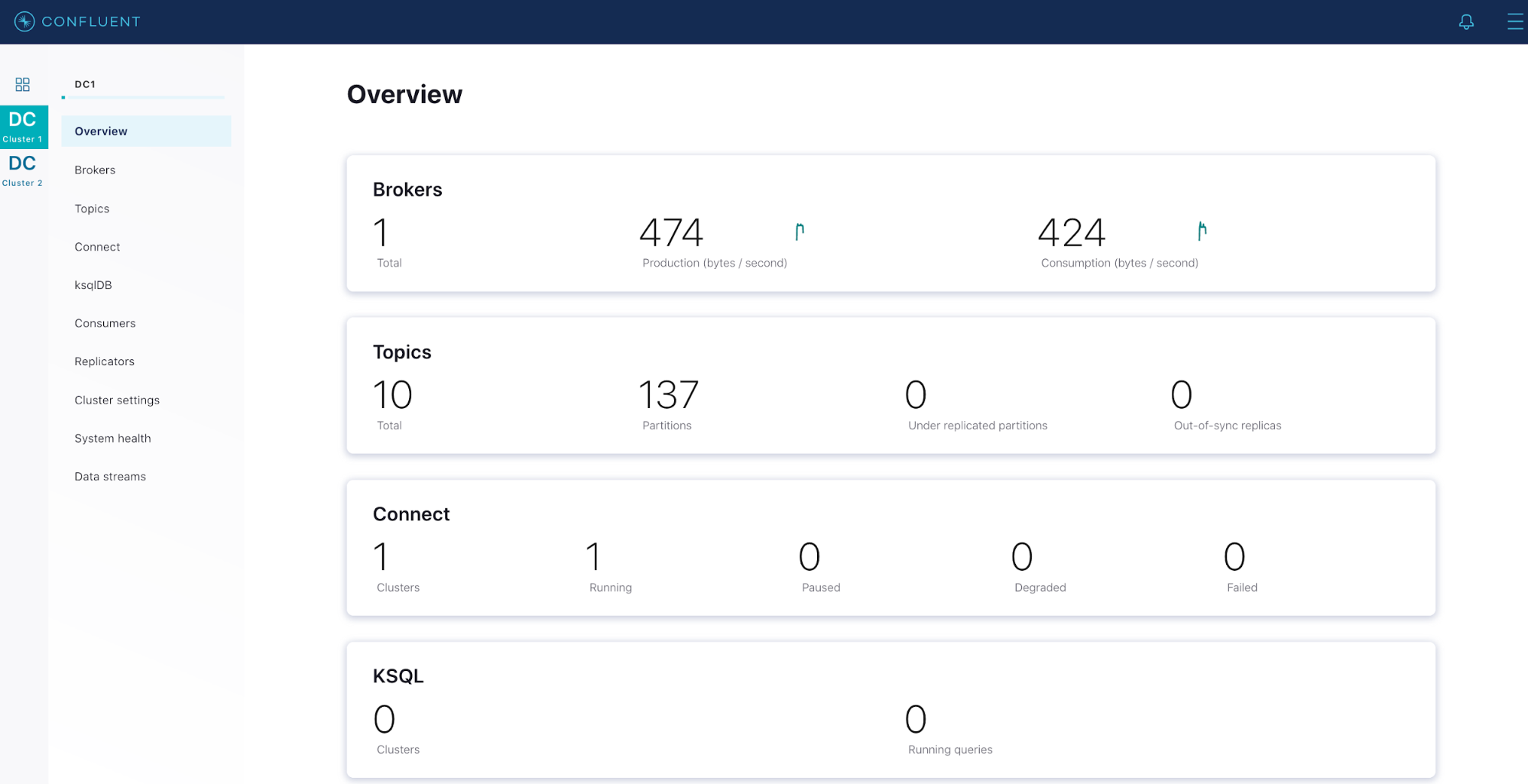

With Confluent Platform, monitoring functionality is tightly integrated across the components out of the box. The Confluent Metrics Reporter collects various metrics from Kafka brokers and produces the values to a Kafka topic. The Confluent Metrics Reporter can produce values to a different cluster than it is monitoring, reducing operational load on the production cluster. The Confluent Metrics Reporter integrates with Confluent Control Center to provide multi-cluster, dashboard-style views.

Confluent Control Center integrates with RBAC, which allows users to view their own permissions, manage other user’s role bindings when they have the permissions to do so, and altogether maintain visibility over key operational metrics. This simplifies security management for the entire organization.

Confluent Monitoring Interceptors are client-side plugins that capture client performance statistics and forward them to a Kafka topic. Like the Confluent Metrics Reporter, the monitoring interceptors can forward these records to a different cluster to reduce operational load on the production cluster. Confluent Control Center integrates with the monitoring interceptors to provide a full view of client status along with the broker data.

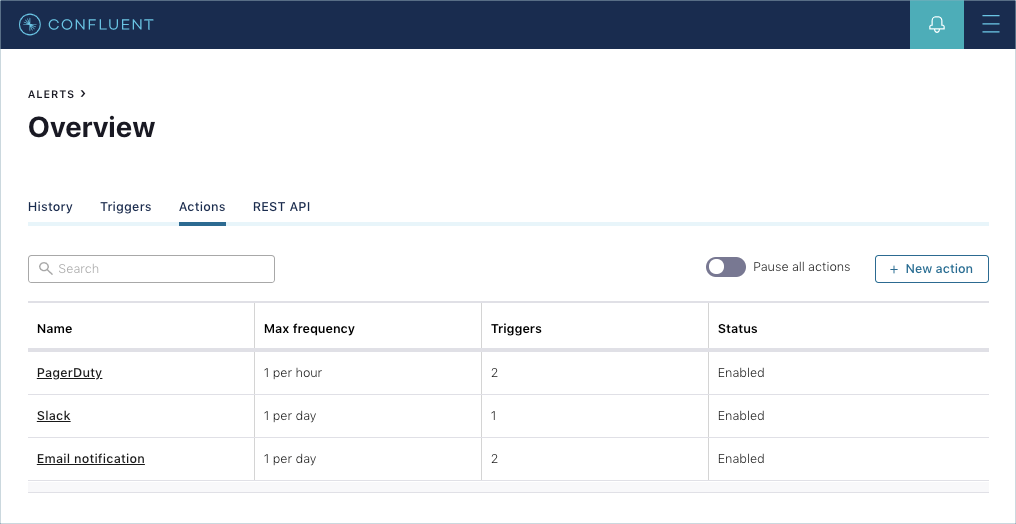

Alerts

Metrics and data are key to understanding the health and security of your cluster, but without action, they lose effectiveness. Confluent Control Center provides an alerts functionality, which is key for driving awareness of the state of your cluster. Alerts work by defining triggers and actions. Triggers are metrics paired with conditions that define when a trigger should be fired. Actions are associated with triggers and define what occurs when the trigger is fired. Actions can integrate with email, Slack, or the popular PagerDuty incident response platform.

Data governance

Data governance includes organizational policies and controls to ensure highly available and quality data systems. Consuming applications within data systems depends on well-formatted data records and reliable connections. Confluent Platform extends Kafka to provide strong enhancements in this area.

Data governance includes organizational policies and controls to ensure highly available and quality data systems. Consuming applications within data systems depends on well-formatted data records and reliable connections. Confluent Platform extends Kafka to provide strong enhancements in this area.

Data quality

Confluent Schema Validation provides record-level data integrity by enforcing schema adherence for producers of data. With Schema Validation, producers are prevented from writing records that do not provide a valid schema identifier from a central Schema Registry. This strategy is commonly known as schema on write and in addition to protecting data integrity, frees consumer applications from the burden of verifying violations of the schema at read time. To help build flexible systems, Schema Validation supports the concept of schema evolution, allowing consumers and producers to communicate across versions. With Confluent Server, Schema Validation is enabled in Confluent Control Center or on the command line by topic:

kafka-topics --create --bootstrap-server localhost:9092 --replication-factor 1 --partitions 1 --topic test-validation-true --config confluent.value.schema.validation=true

With the Confluent Platform 5.5 release, Protobuf and JSON Schema formats are now supported to go along with the existing Apache Avro™ support. Schema Validation fully supports these new formats.

Quotas

Client applications may produce or consume data at such a high rate that they monopolize cluster resources, resulting in resource starvation for remaining clients. Kafka quotas protect resources for all clients by placing upper bounds on resource utilization for offending applications. When brokers detect quota violations, they attempt to slow down the clients by introducing an artificial delay to bring the offending client under the threshold. These configurations can protect the cluster from resource starvation by clients that are well known but experiencing spikes in traffic.

Default quotas set at the broker level can also protect brokers from malicious clients trying denial-of-service style attacks. Default quotas can be configured in the brokers, such as the following, which sets the default producer and consumer rate to 10 MBps*:

quota.producer.default=10485760 quota.consumer.default=10485760

(*Update: Note that default quotas were removed in Kafka 3.0).

Custom quotas can be assigned per client ID or user while the cluster is operational. The following example sets the producer and consumer rate thresholds for client clientA to 1 KB and 2 KB, respectively, along with a request percentage of 200:

bin/kafka-configs --bootstrap-server localhost:9092 --alter --add-config 'producer_byte_rate=1024,consumer_byte_rate=2048,request_percentage=200' --entity-name clientA --entity-type clients

Compliance

Many industries require additional regulation compliance, enforced by governmental bodies. Confluent Platform supports HIPAA and SSAE 18 SOC2 compliance certifications, Confluent Cloud supports GDPR, ISO 27001, PCI level 2, SOC 1, 2, and 3 standards, and Confluent Cloud Enterprise additionally supports HIPAA compliance.

Summary

Security is a continuous function, and this guide provides a framework for you to begin securing your event streaming platform. In addition to compliance-conforming software and enterprise support, Confluent offers Professional Services to review and assist with secure deployments and architectures. Finally, Confluent’s security documentation provides in-depth details for securing both Confluent Platform and Apache Kafka.

Did you like this blog post? Share it now

Subscribe to the Confluent blog

New with Confluent Platform 7.9: Oracle XStream CDC Connector, Client-Side Field Level Encryption (EA), Confluent for VS Code, and More

This blog announces the general availability of Confluent Platform 7.9 and its latest key features: Oracle XStream CDC Connector, Client-Side Field Level Encryption (EA), Confluent for VS Code, and more.

Meet the Oracle XStream CDC Source Connector

Confluent's new Oracle XStream CDC Premium Connector delivers enterprise-grade performance with 2-3x throughput improvement over traditional approaches, eliminates costly Oracle GoldenGate licensing requirements, and seamlessly integrates with 120+ connectors...