It’s Here! Confluent’s 2026 Data + AI Predictions Report | Download Now

Putting Apache Kafka to REST: Confluent REST Proxy 6.0

Confluent Platform 6.0 was released last year bringing with it many exciting new features to Confluent REST Proxy. Before we dive into what was added, let’s first revisit what REST Proxy is, how it works, and the problems that REST Proxy is trying to solve.

Why Confluent REST Proxy?

Apache Kafka®, like any client-server application, offers access to its functionality through a well-defined set of APIs. These APIs are exposed via the Kafka wire protocol, an optimized but Kafka-specific, binary protocol over TCP.

The best way to interact with the Apache Kafka APIs is to make use of a client library that works with the Kafka wire protocol. The Apache Kafka project only officially supports a client library for Java, but in addition to that, Confluent officially supports client libraries for C/C++, C#, Go, and Python. The community supports many additional ones as well. The Apache Kafka project also offers a set of CLI tools, which are just thin wrappers over the Java client library.

Unfortunately, some programming languages lack officially supported, production-grade client libraries for Kafka. For the ones that do exist, not all features are covered equally across all client libraries.

That’s where Confluent REST Proxy comes in. HTTP is a widely available, universally supported protocol. Confluent REST Proxy exposes Kafka APIs via HTTP so that these APIs are easy to use from anywhere.

New Improvements for Apache Kafka with Confluent REST Proxy

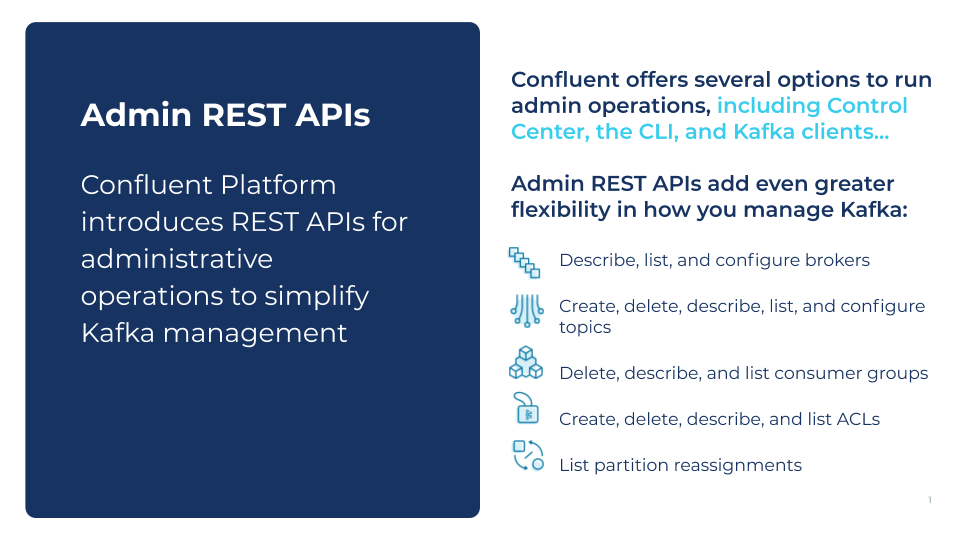

Confluent Platform 6.0 introduces two notable improvements in Confluent REST Proxy. The first relates to admin functionality. Historically, Confluent REST Proxy has been focused on produce/consume use cases. Starting with Confluent Platform 6.0, you will also be able to administer Apache Kafka via RESTful HTTP APIs for the following resources:

- ACLs

- Brokers

- Broker configs

- Clusters

- Cluster configs

- Topics

- Topic configs

- Partitions

- Replicas

- Consumer groups

- Consumers

The second big change has to do with operating Confluent REST Proxy itself. Prior to this release, REST Proxy could only be run as a separate server, connecting to Apache Kafka via the network. That meant managing a separate set of nodes/containers in your cluster just to be able to use the Apache Kafka APIs via HTTP.

In Confluent Platform 6.0, we integrated the REST Proxy APIs into Confluent Server itself. Now, you will be able to interact with Confluent Server via HTTP directly—no Confluent REST Proxy nodes required.

Simple setup and REST Proxy API integration (with examples)

Let’s go through some examples of what you can achieve with the new APIs. For the examples below, assume we have a Kafka cluster running locally, and one of the Confluent Server instances is listening for HTTP requests on port 8082. Note that this is the default development configuration. For production setup, see the documentation on production deployment.

We can ask for information about the Kafka cluster:

$ curl http://localhost:8082/kafka/v3/clusters | jq

{ "kind": "KafkaClusterList", "metadata": { "self": "http://localhost:8082/kafka/v3/clusters", "next": null }, "data": [ { "kind": "KafkaCluster", "metadata": { "self": "http://localhost:8082/kafka/v3/clusters/TGYwIOuFTIiQivcapW5DyQ", "resource_name": "crn:///kafka=TGYwIOuFTIiQivcapW5DyQ" }, "cluster_id": "TGYwIOuFTIiQivcapW5DyQ", "topics": { "related": "http://localhost:8082/kafka/v3/clusters/TGYwIOuFTIiQivcapW5DyQ/topics" } // a bunch more properties... } ] }

The response will include links to several related resources (which we have omitted here for clarity). One of them is a link to the topics resource. We can use that link to create a new topic:

$ curl -X POST -H 'Content-Type: application/json' \

http://localhost:8082/kafka/v3/clusters/TGYwIOuFTIiQivcapW5DyQ/topics \

--data '{ "topic_name": "topic-1", "partitions_count": 3, "replication_factor": 3, "configs": [ { "name": "compression.type", "value": "gzip" } ] }' | jq

{

"kind": "KafkaTopic",

"metadata": {

"self": "http://localhost:9391/kafka/v3/clusters/TGYwIOuFTIiQivcapW5DyQ/topics/topic-1",

"resource_name": "crn:///kafka=TGYwIOuFTIiQivcapW5DyQ/topic=topic-1"

},

"cluster_id": "TGYwIOuFTIiQivcapW5DyQ",

"topic_name": "topic-1",

"is_internal": false,

"replication_factor": 3,

"configs": {

"related": "http://localhost:9391/kafka/v3/clusters/TGYwIOuFTIiQivcapW5DyQ/topics/topic-1/configs"

}

// a bunch more properties...

}

Notice that we passed a configuration override when creating the topic: compression.type should now be gzip for the newly created topic. Let’s say we changed our mind, and now we want to revert that configuration to its default value. We can reset the config:

$ curl -X DELETE http://localhost:8082/kafka/v3/clusters/TGYwIOuFTIiQivcapW5DyQ/topics/topic-1/configs/compression.type

Finally, if our Kafka cluster has authorization configured, we can control access to our topic by updating the ACLs. You can create an ACL for your topic:

$ curl -X POST -H 'Content-Type: application/json' http://localhost:8082/kafka/v3/clusters/TGYwIOuFTIiQivcapW5DyQ/acls --data '{ "resource_type": "TOPIC", "resource_name": "topic-1", "pattern_type": "LITERAL", "principal": "User:alice", "host": "*", "operation": "READ", "permission": "ALLOW" }'

Let’s take a look at our brokers now:

$ curl http://localhost:9193/kafka/v3/clusters/kmBtkQ_vSDG0bTlXDSI8Jw/brokers | jq

{ "kind": "KafkaBrokerList", "metadata": { "self": "http://localhost:9193/kafka/v3/clusters/kmBtkQ_vSDG0bTlXDSI8Jw/brokers", "next": null }, "data": [ { "kind": "KafkaBroker", "metadata": { "self": "http://localhost:9193/kafka/v3/clusters/kmBtkQ_vSDG0bTlXDSI8Jw/brokers/1", "resource_name": "crn:///kafka=kmBtkQ_vSDG0bTlXDSI8Jw/broker=1" }, "cluster_id": "kmBtkQ_vSDG0bTlXDSI8Jw", "broker_id": 1, "host": "kafka-1", "port": 9191, "rack": null, // a bunch more properties... }, // other brokers... ] }

You can manage ACLs and configs for brokers in much the same way you would for topics. If we want to take down one of our brokers for maintenance, as long as we have Self-Balancing Clusters configured, it’s as easy as deleting a broker:

curl -X DELETE http://localhost:8082/kafka/v3/clusters/kmBtkQ_vSDG0bTlXDSI8Jw/brokers/5

These APIs and many more are available now with Confluent Platform 6.0. Take a look at the full API documentation for more information. If you prefer, you can also check the OpenAPI spec for the new APIs, which you can use to auto generate an SDK for your preferred programming language.

What’s next for Confluent REST Proxy

Currently, the Confluent REST Proxy Consumer API is stateful, which presents challenges when you are trying to use a fleet of Confluent REST Proxy servers behind a load balancer. Moving forward, we will continue to work on improving Confluent REST Proxy for consumption use cases.

In the coming releases, we hope to:

- Offer a stateless Consumer API

- Decouple key and value serializers/deserializers for the producer and consumer APIs

- Add support for Kafka headers

- Offer the producer/consumer HTTP API in Confluent Server

Interested in more?

Check out Tim Berglund’s video summary or podcast for an overview of Confluent Platform 6.0 and download Confluent Platform 6.0 to get started with REST Proxy today.

Did you like this blog post? Share it now

Subscribe to the Confluent blog

Unified Stream Manager: Manage and Monitor Apache Kafka® Across Environments

Unified Stream Manager is now GA! Bridge the gap between Confluent Platform and Confluent Cloud with a single pane of glass for hybrid data governance, end-to-end lineage, and observability.

Introducing Confluent Private Cloud: Cloud-Level Agility for Your Private Infrastructure

Confluent Private Cloud (CPC) is a new software package that extends Confluent’s cloud-native innovations to your private infrastructure. CPC offers an enhanced broker with up to 10x higher throughput and a new Gateway that provides network isolation and central policy enforcement without client...