[Virtual Event] GenAI Streamposium: Learn to Build & Scale Real-Time GenAI Apps | Register Now

Stream Processing

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

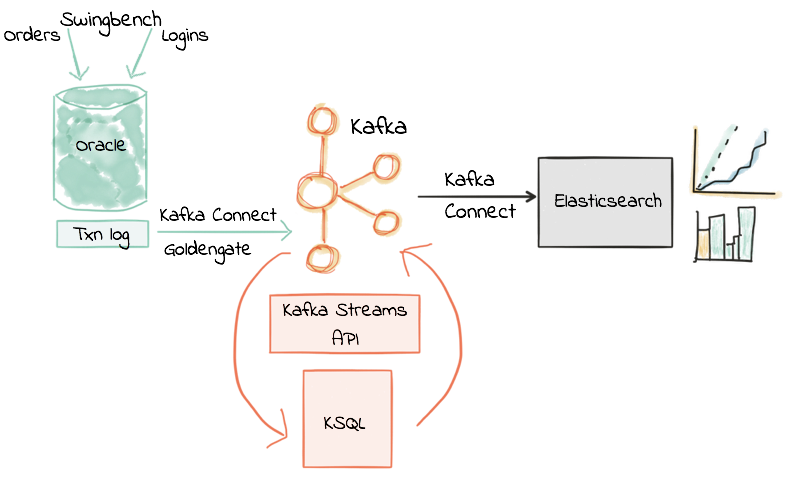

KSQL in Action: Real-Time Streaming ETL from Oracle Transactional Data

In this post I’m going to show what streaming ETL looks like in practice. We’re replacing batch extracts with event streams, and batch transformation with in-flight transformation. But first, a […]

KSQL January release: Streaming SQL for Apache Kafka

We are pleased to announce the release of KSQL 0.4, aka the January 2018 release of KSQL. As usual, this release is a mix of new features as well as […]

KSQL December Release: Streaming SQL for Apache Kafka

We are very excited to announce the December release of KSQL, the streaming SQL engine for Apache Kafka®! As we announced in the November release blog, we are releasing KSQL […]

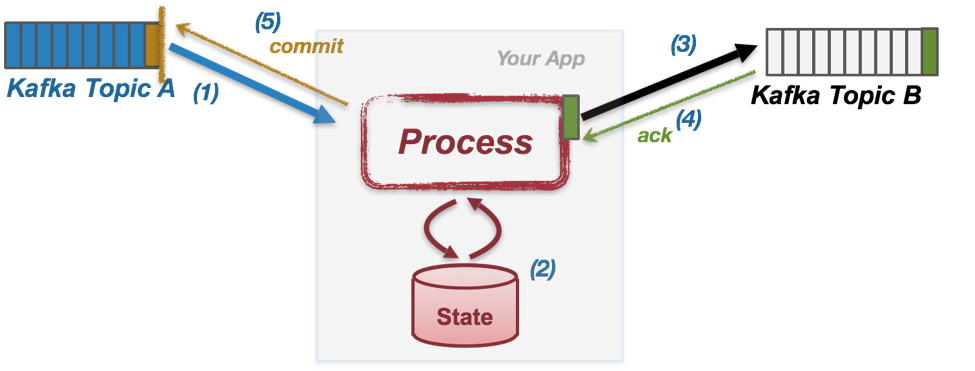

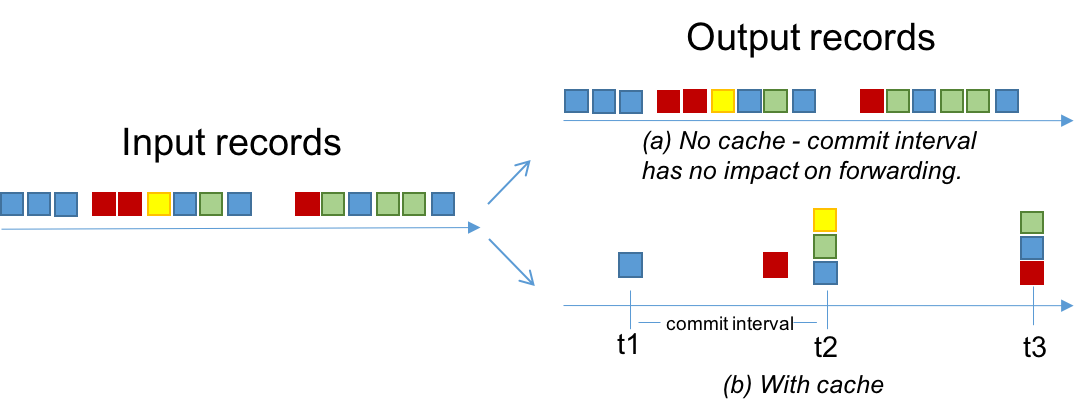

Enabling Exactly-Once in Kafka Streams

This blog post is the third and last in a series about the exactly-once semantics for Apache Kafka®. See Exactly-once Semantics are Possible: Here’s How Kafka Does it for the […]

November Update of KSQL Developer Preview Available

Update: KSQL is now available as a component of the Confluent Platform. Today we are releasing the first update to KSQL since its launch as a Developer Preview at Kafka […]

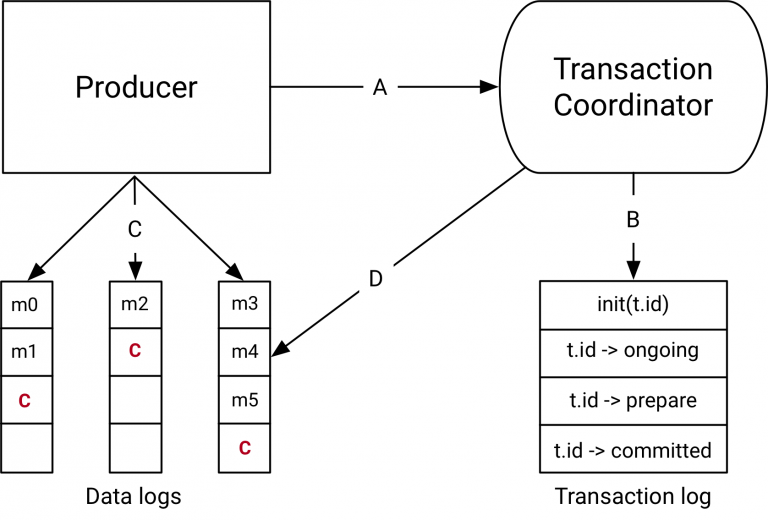

Transactions in Apache Kafka

Note For the latest, check out Building Systems Using Transactions in Apache Kafka on Confluent Developer.

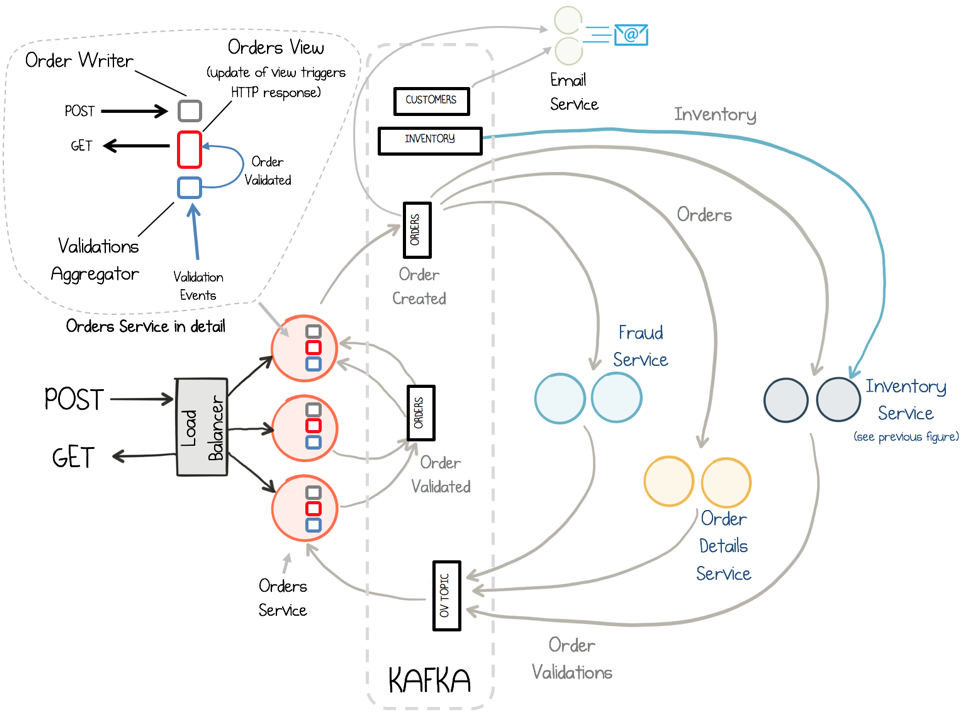

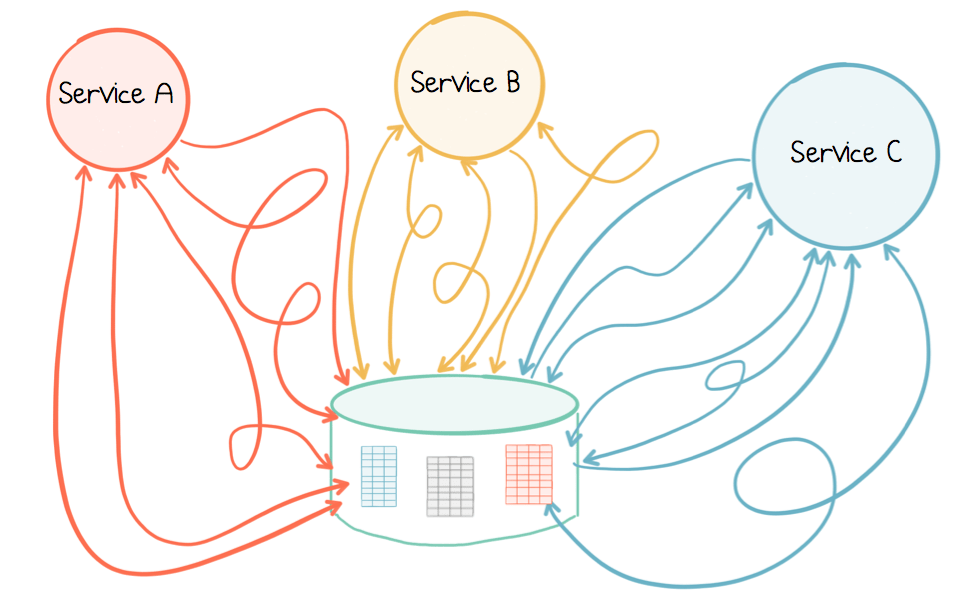

Building a Microservices Ecosystem with Kafka Streams and KSQL

Today, we invariably operate in ecosystems: groups of applications and services which together work towards some higher level business goal. When we make these systems event-driven they come with a […]

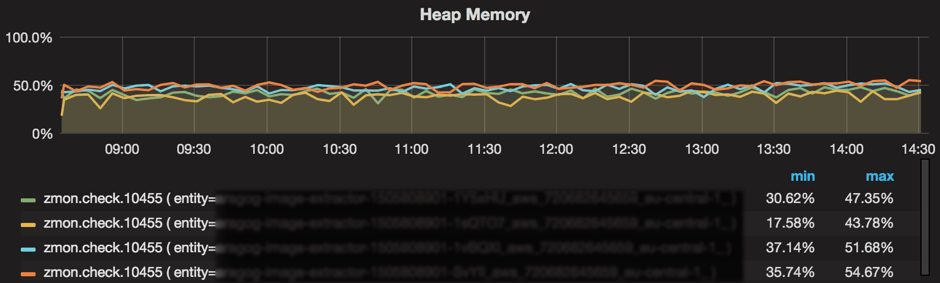

Running Kafka Streams Applications in AWS

This guest blog post is the second in a series about the use of Apache Kafka’s Streams API by Zalando, Europe’s largest online fashion retailer. See Ranking Websites in Real-time […]

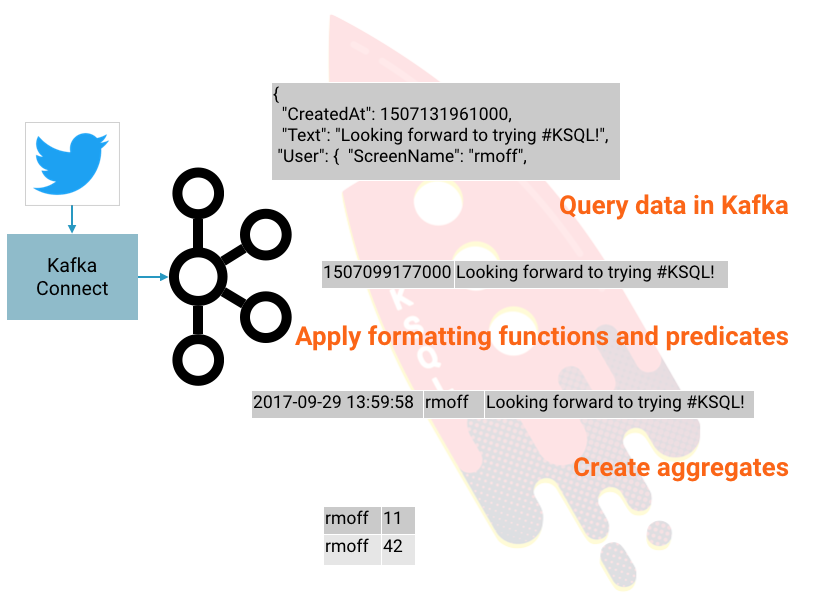

Getting Started Analyzing Twitter Data in Apache Kafka through KSQL

KSQL is the streaming SQL engine for Apache Kafka®. It lets you do sophisticated stream processing on Kafka topics, easily, using a simple and interactive SQL interface. In this short […]

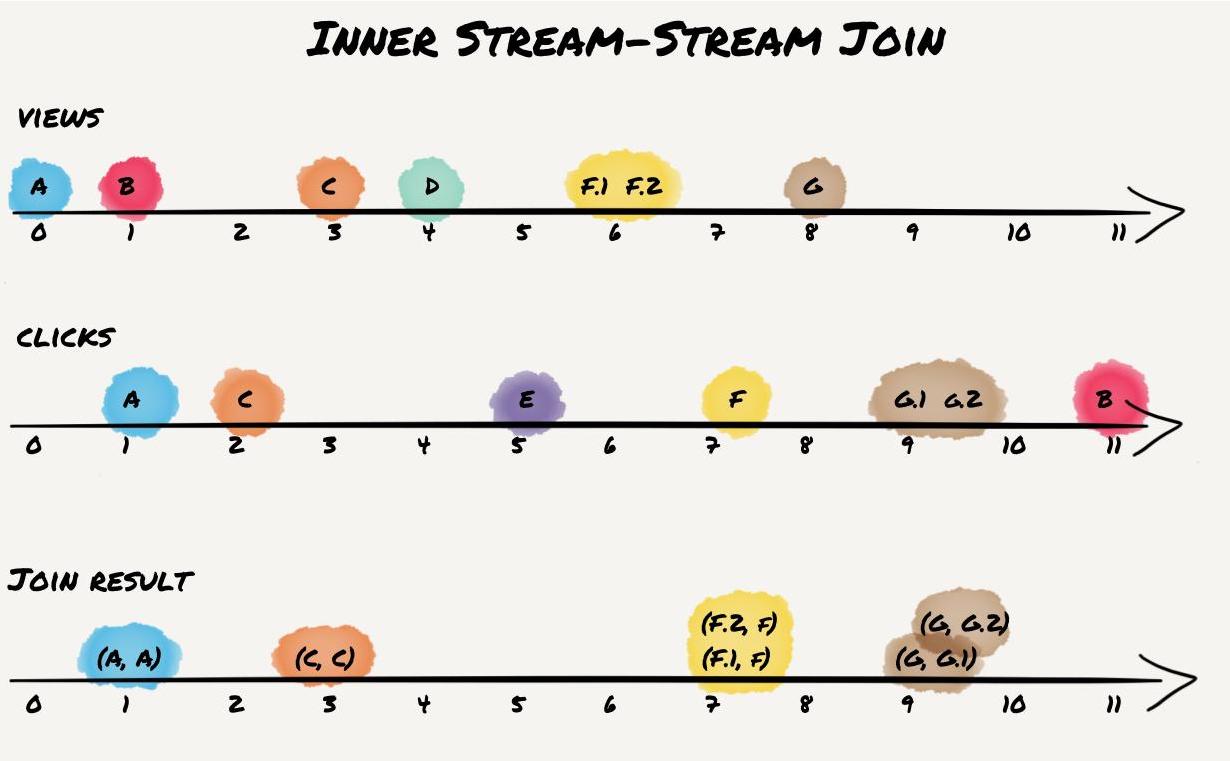

Crossing the Streams – Joins in Apache Kafka

This post was originally published at the Codecentric blog with a focus on “old” join semantics in Apache Kafka versions 0.10.0 and 0.10.1. Version 0.10.0 of the popular distributed streaming […]

Introducing KSQL: Streaming SQL for Apache Kafka

Note ksqlDB is the successor to KSQL. Read the announcement to learn more. To get started with ksqlDB in Confluent Cloud, you can sign up for fully managed Apache Kafka […]

Leveraging the Power of a Database ‘Unbundled’

When you build microservices using Apache Kafka®, the log can be used as more than just a communication protocol. It can be used to store events: messaging that remembers. This […]

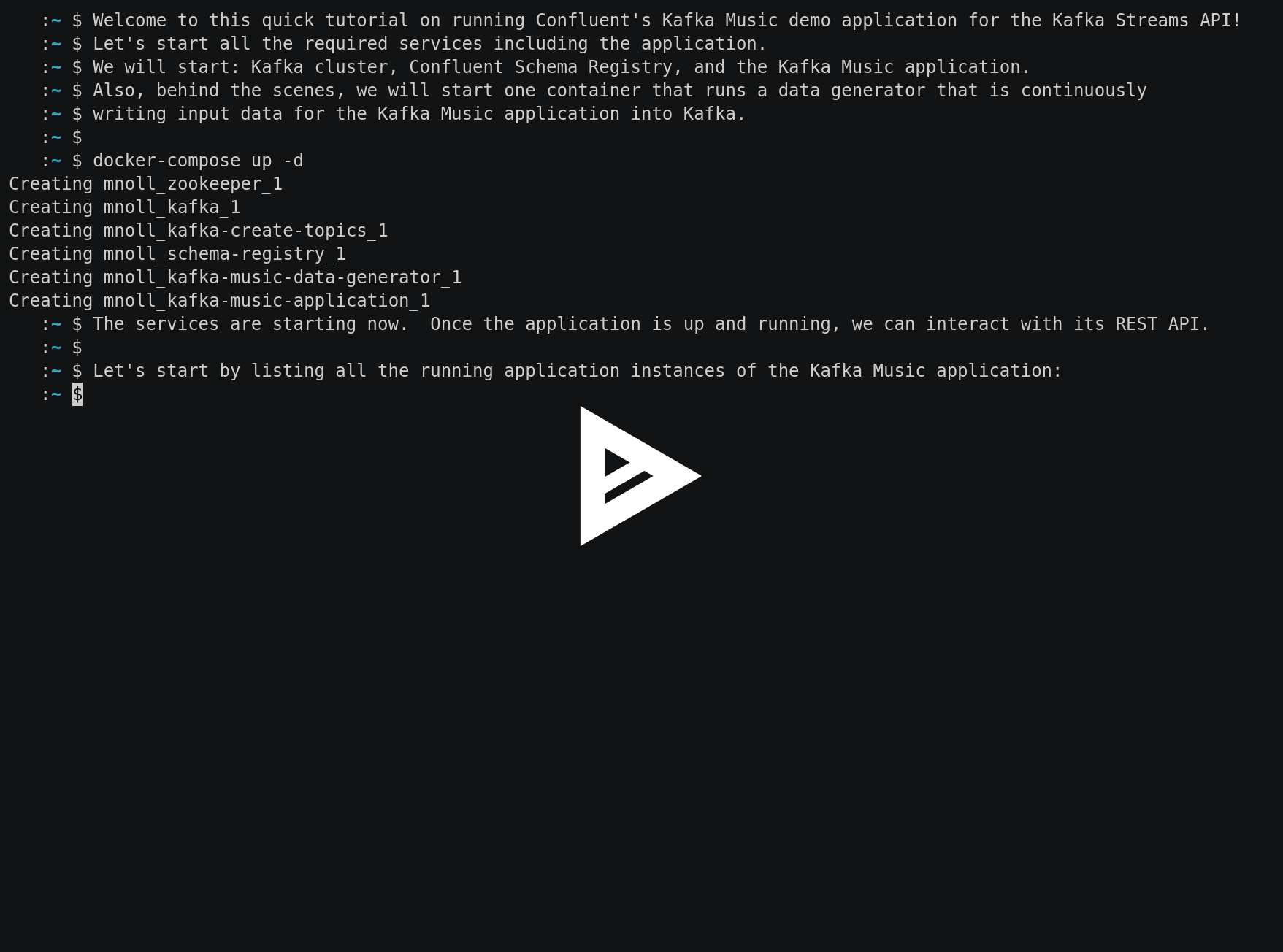

Getting Started with the Kafka Streams API using Confluent Docker Images

Introduction What’s great about the Kafka Streams API is not just how fast your application can process data with it, but also how fast you can get up and running […]

Watermarks, Tables, Event Time, and the Dataflow Model

The Google Dataflow team has done a fantastic job in evangelizing their model of handling time for stream processing. Their key observation is that in most cases you can’t globally […]