[Webinar] AI-Powered Innovation with Confluent & Microsoft Azure | Register Now

Stream Processing

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

Stop Treating Your LLM Like a Database

GenAI thrives on real-time contextual data: In a modern system, LLMs should be designed to engage, synthesize, and contribute, rather than to simply serve as queryable data stores.

Generative AI Meets Data Streaming (Part III) – Scaling AI in Real Time: Data Streaming and Event-Driven Architecture

In this final part of the blog series, we bring it all together by exploring data streaming platforms (DSPs), event-driven architecture (EDA), and real-time data processing to scale AI-powered solutions across your organization.

Generative AI Meets Data Streaming (Part II) – Enhancing Generative AI: Adding Context with RAG and VectorDBs

In Part 2 of the series, we take things a step further by enhancing GenAI with the tools it needs to deliver smarter, more relevant responses. We introduce retrieval-augmented generation (RAG) and vector databases (VectorDBs), key technologies that provide LLMs with the context they need.

Generative AI Meets Data Streaming (Part I) – Data as the Engine: Building the AI Fundamentals

This blog series explores how technologies like generative AI, RAG, VectorDBs, and DSPs can work together to provide the freshest and most actionable data. Part 1 lays the foundation for understanding how data fuels AI, and why having the right data at the right time is essential for success.

Unify Streaming and Analytical Data with Apache Iceberg®, Confluent Tableflow, and Amazon SageMaker® Lakehouse

Tableflow can seamlessly make your Kafka operational data available to your AWS analytics ecosystem with minimal effort, leveraging the capabilities of Confluent Tableflow and Amazon SageMaker Lakehouse.

Shift Left: Headless Data Architecture, Part 2

Building a headless data architecture requires us to identify the work we’re already doing deep inside our data analytics plane, and shift it to the left. Learn the specifics in this blog.

Shift Left: Headless Data Architecture, Part 1

A headless data architecture means no longer having to coordinate multiple copies of data, and being free to use whatever processing or query engine is most suitable for the job. This blog details how it works.

Shift Left: Bad Data in Event Streams, Part 2

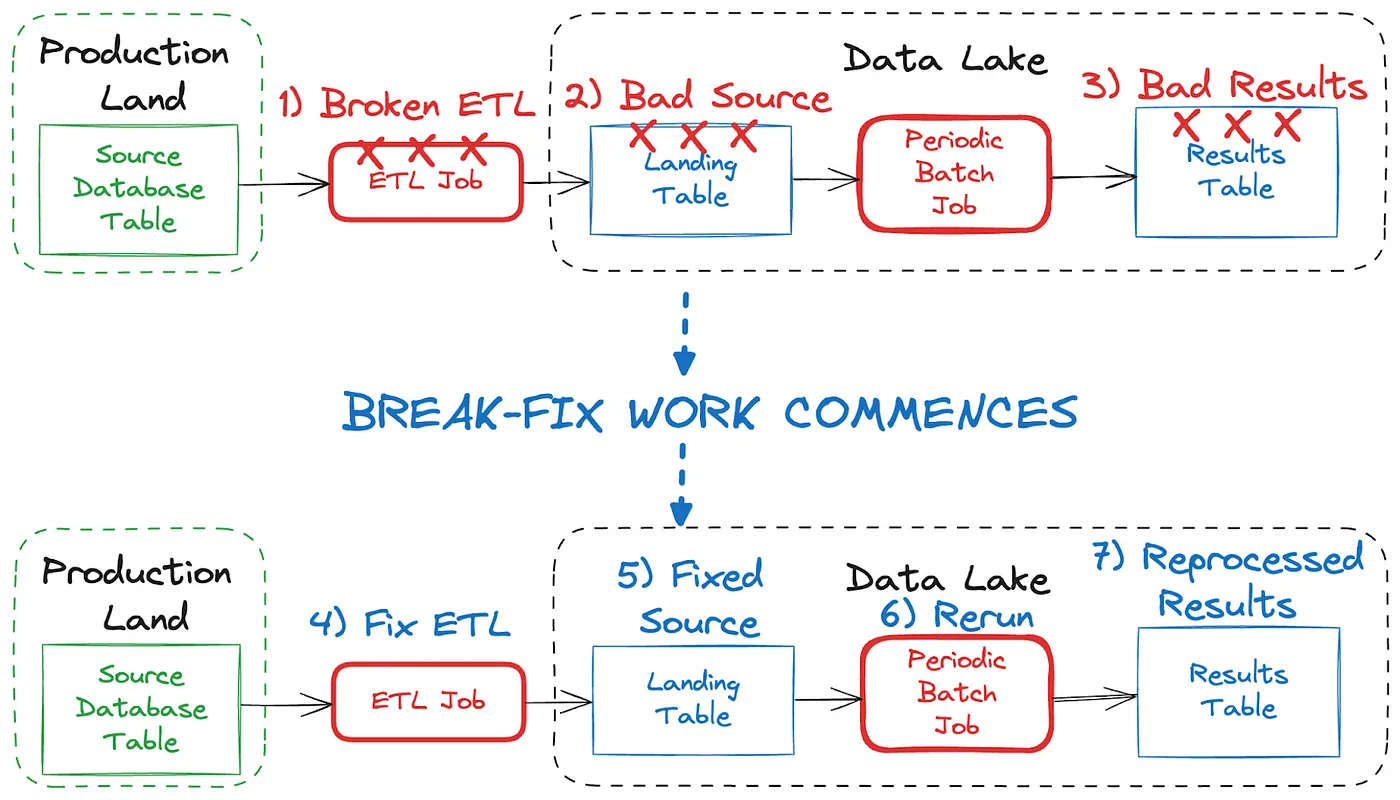

Event design plays a big role in your ability to fix bad data in your streams. But if you’ve wrecked a stream with bad data (i.e., it’s unavoidably contaminated), you'll need to employ a "rewind, rebuild, and retry" strategy.

Shift Left: Bad Data in Event Streams, Part 1

At a high level, bad data is data that doesn’t conform to what is expected, and it can cause serious issues and outages for all downstream data users. This blog looks at how bad data may come to be, and how we can deal with it when it comes to event streams.

Introducing Versioned State Store in Kafka Streams

Versioned key-value state stores, introduced to Kafka Streams in 3.5, enhance stateful processing capabilities by allowing users to store multiple record versions per key, rather than only the single latest version per key as is the case for existing key-value stores today...

Delivery Guarantees and the Ethics of Teleportation

This blog post discusses the two generals problems, how it impacts message delivery guarantees, and how those guarantees would affect a futuristic technology such as teleportation.

Stream Processing in Your Native Tongue

Stream processing has long forced an uncomfortable trade-off: choose a framework based on its power, or in your preferred programming language. GraalVM may offer an alternative solution to avoid having to choose.

Combining CDC Transactional Messages Using Kafka Streams

An Approach to combining Change Data Capture (CDC) messages from a relational database into transactional messages using Kafka Streams.

Succeeding with Change Data Capture

Change data capture (CDC) converts all the changes that occur inside your database into events and publishes them to an event stream. You can then use these events to power analytics, drive operational use cases, hydrate databases, and more. The pattern is enjoying wider adoption than ever before.