[Virtual Event] GenAI Streamposium: Learn to Build & Scale Real-Time GenAI Apps | Register Now

Technology

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

Confluent Cloud Is Now 100% KRaft and You Should Be Too

Confluent has helped thousands migrate to KRaft, Kafka’s new consensus protocol that replaces ZooKeeper for metadata management. Kafka users can migrate to KRaft quickly and with ease by using automated tools like Confluent for Kubernetes (CFK) and Ansible Playbooks.

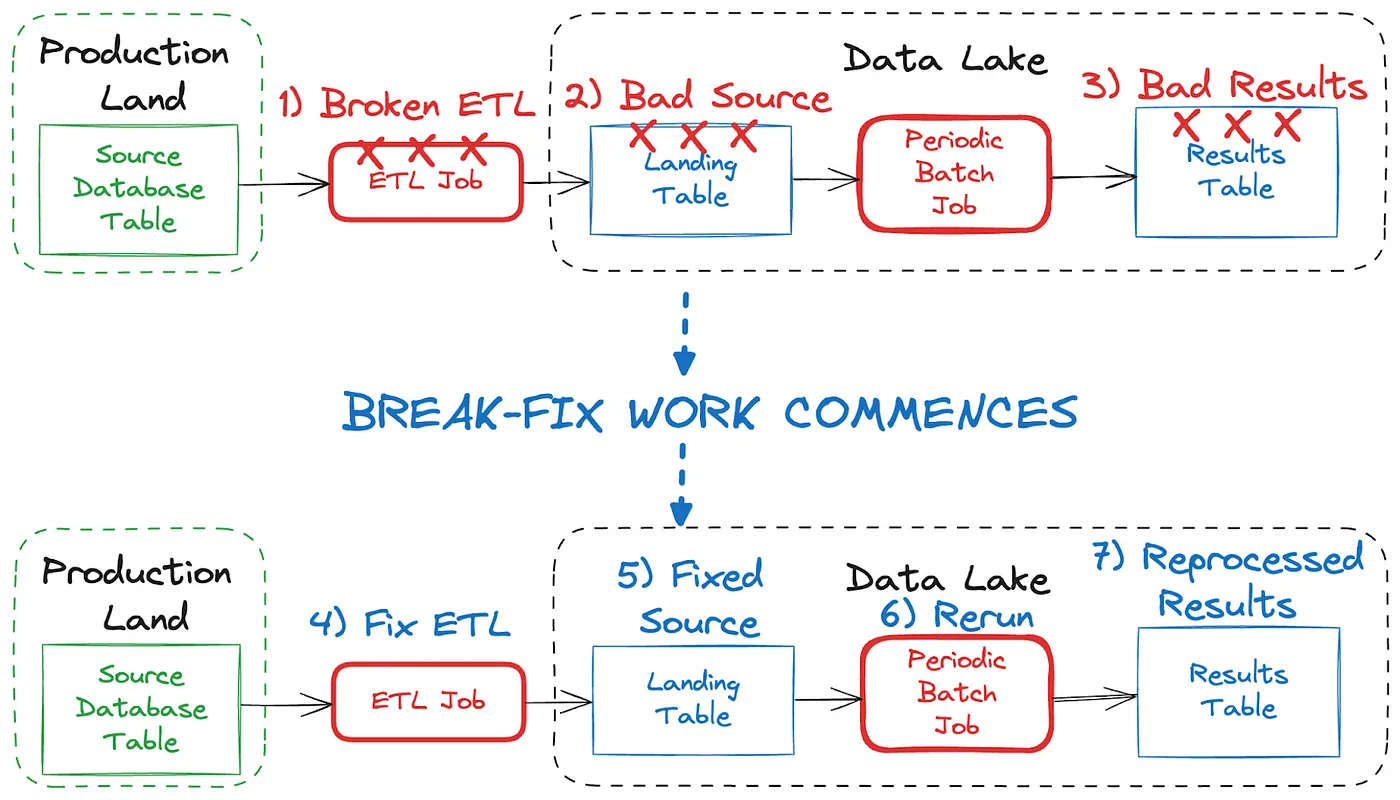

Shift Left: Bad Data in Event Streams, Part 2

Event design plays a big role in your ability to fix bad data in your streams. But if you’ve wrecked a stream with bad data (i.e., it’s unavoidably contaminated), you'll need to employ a "rewind, rebuild, and retry" strategy.

Spring Into Confluent Cloud with Kotlin – Part 2: Kafka Streams

In this edition, we’ll have a look at creating Kafka Streams topologies—exploring the dependency injection and design principles with Spring Framework, while also highlighting some syntactic sugar of Kotlin that makes for more concise and legible topologies.

Enhancing Security with IAM Roles in Confluent Managed Connectors

This blog post talks about Confluent’s newest enhancement to their fully managed connectors: the ability to assume IAM roles.

Shift Left: Bad Data in Event Streams, Part 1

At a high level, bad data is data that doesn’t conform to what is expected, and it can cause serious issues and outages for all downstream data users. This blog looks at how bad data may come to be, and how we can deal with it when it comes to event streams.

Your Guide to the Apache Flink® Table API: An In-Depth Exploration

The blog post provides a comprehensive overview of the Flink Table API, demonstrating how it enables developers to express complex data processing logic using Java or Python in a user-friendly manner. It also includes practical examples and guidance, making it a valuable resource for anyone...

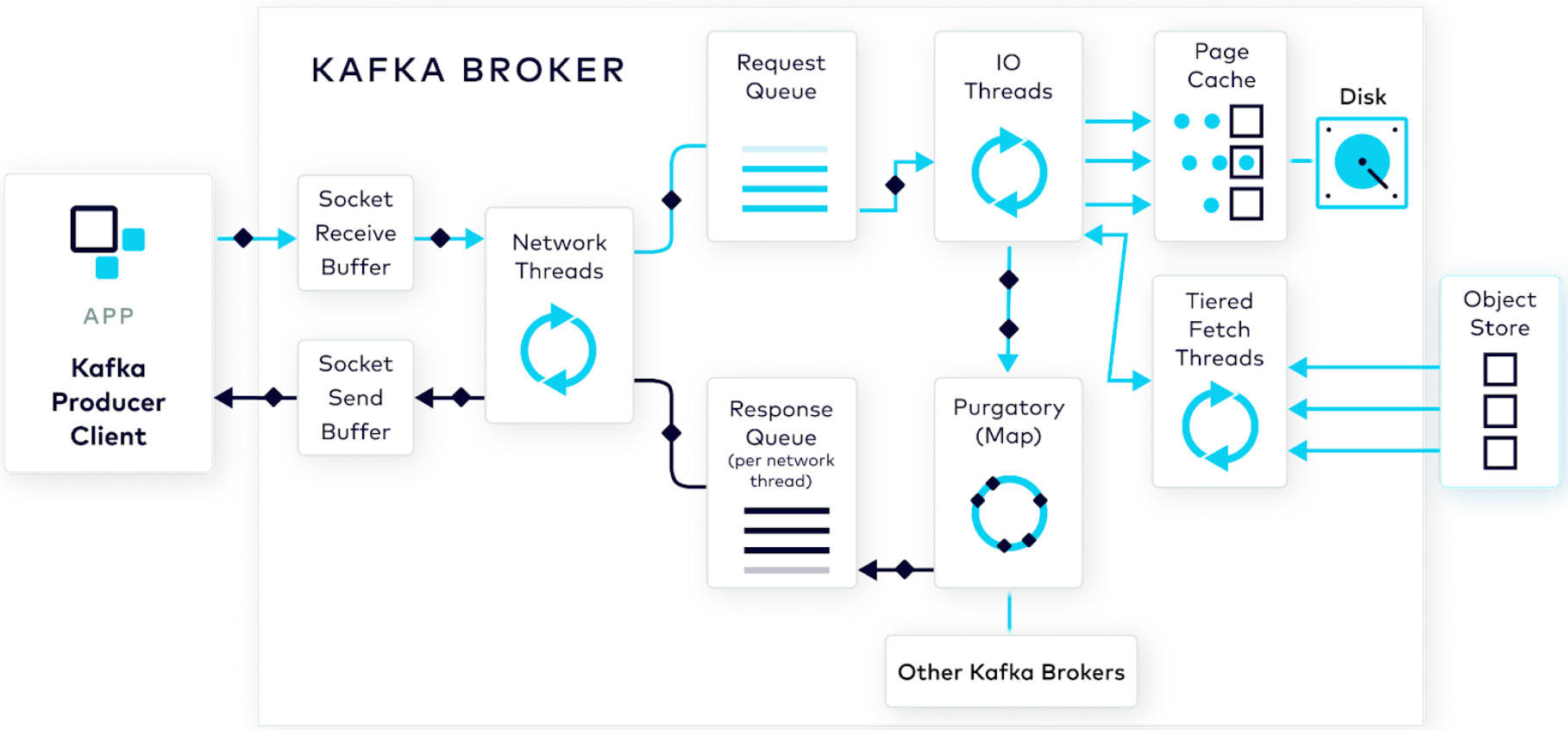

Handling the Producer Request: Kafka Producer and Consumer Internals, Part 2

In this post, the second in the Kafka Producer and Consumer Internals Series, we follow our brave hero—a well-formed produce request—which is on its way to be processed by the broker and have its data stored on the cluster.

Convening With Data Streaming Engineers at Current 2024

We covered so much at Current 2024, from the 138 breakout sessions, lightning talks, and meetups on the expo floor to what happened on the main stage. If you heard any snippets or saw quotes from the Day 2 keynote, then you already know what I told the room: We are all data streaming engineers now.

Build, Manage, and Monitor Data Streaming Applications, All Within Your Favorite IDE

We’re excited to announce Early Access for Confluent for VS Code. This Visual Studio integration streamlines workflows, accelerates development, and enhances real-time data processing, all in a unified environment. This post shows how to get started, and also lists opportunities to get involved.

New in Confluent Cloud: Making Serverless Flink a Developer's Best Friend, Protecting Sensitive Data, and More

The Q3 Cloud Bundle Launch comes to you from Current 2024, where data streaming industry experts have come together to show you why data streaming is critical today, especially in the age of AI, and how it will become even more important in shaping tomorrow’s businesses...

Producing Messages With a Schema in Confluent Cloud Console

If you are a developer looking for an easier way to test your apps on topics with schemas, this is for you! Now you can easily create a message with a topic schema directly from the Confluent Cloud Console, with built-in validation and error checking.

How Producers Work: Kafka Producer and Consumer Internals, Part 1

The beauty of Kafka as a technology is that it can do a lot with little effort on your part. In effect, it’s a black box. But what if you need to see into the black box to debug something? This post shows what the producer does behind the scenes to help prepare your raw event data for the broker.

Let Flink Cook: Mastering Real-Time Retrieval-Augmented Generation (RAG) with Flink

With AI model inference in Flink SQL, Confluent allows you to simplify the development and deployment of RAG-enabled GenAI applications by providing a unified platform for both data processing and AI tasks. Learn how you can use it to build a RAG-enabled Q&A chatbot using real-time airline data.

Unlock Real-Time Value from DynamoDB Data with Confluent's CDC Source Connector

62% of Confluent Cloud clusters run on AWS. Meanwhile, hundreds of thousands of customers are using DynamoDB. This blog explains how the connector helps customers integrate both platforms together.