[Demo Webinar] Ready to break up with ZooKeeper? Meet KRaft! | Register Now

Confluent Blog

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Contributing to Apache Kafka®: How to Write a KIP

Learn how to contribute to open source Apache Kafka by writing Kafka Improvement Proposals (KIPs) that solve problems and add features! Read on for real examples.

Mock APIs vs. Real Backends – Getting the Best of Both Worlds

When building API-driven web applications, there is one key metric that engineering teams should minimize: the blocked factor. The blocked factor measures how much time developers spend in the following […]

Introducing Confluent Developer

Today, I am pleased to announce the launch of Confluent Developer, the one and only portal for everything you need to get started with Apache Kafka®, Confluent Platform, and Confluent […]

99th Percentile Latency at Scale with Apache Kafka

Fraud detection, payment systems, and stock trading platforms are only a few of many Apache Kafka® use cases that require both fast and predictable delivery of data. For example, detecting […]

Turning Data at REST into Data in Motion with Kafka Streams

The world is changing fast, and keeping up can be hard. Companies must evolve their IT to stay modern, providing services that are more and more sophisticated to their customers. […]

Celebrating Over 100 Supported Apache Kafka Connectors

We just released Confluent Platform 5.4, which is one of our most important releases to date in terms of the features we’ve delivered to help enterprises take Apache Kafka® and […]

Apache Kafka as a Service with Confluent Cloud Now Available on Azure Marketplace

Less than six months ago, we announced support for Microsoft Azure in Confluent Cloud, which allows developers using Azure as a public cloud to build event streaming applications with Apache […]

Announcing ksqlDB 0.7.0

We are pleased to announce the release of ksqlDB 0.7.0. This release features highly available state, security enhancements for queries, a broadened range of language/data expressions, performance improvements, bug fixes, […]

Seamless SIEM – Part 2: Anomaly Detection with Machine Learning and ksqlDB

We talked about how easy it is to send osquery logs to the Confluent Platform in part 1. Now, we’ll consume streams of osquery logs, detect anomalous behavior using machine […]

Integrating Elasticsearch and ksqlDB for Powerful Data Enrichment and Analytics

Apache Kafka® is often deployed alongside Elasticsearch to perform log exploration, metrics monitoring and alerting, data visualisation, and analytics. It is complementary to Elasticsearch but also overlaps in some ways, […]

Seamless SIEM – Part 1: Osquery Event Log Aggregation and Confluent Platform

Osquery (developed by Facebook) is an open source tool used to gather audit log events from an operating system (OS). What’s unique about osquery is that it uses basic SQL […]

Building a Materialized Cache with ksqlDB

When a company becomes overreliant on a centralized database, a world of bad things start to happen. Queries become slow, taxing an overburdened execution engine. Engineering decisions come to a […]

Kafka Summit London 2020 Agenda, Keynotes, and Other News

Do you make New Year’s resolutions? The most I personally hear about them is people making a big show about how they don’t do them. And sure enough, I don’t […]

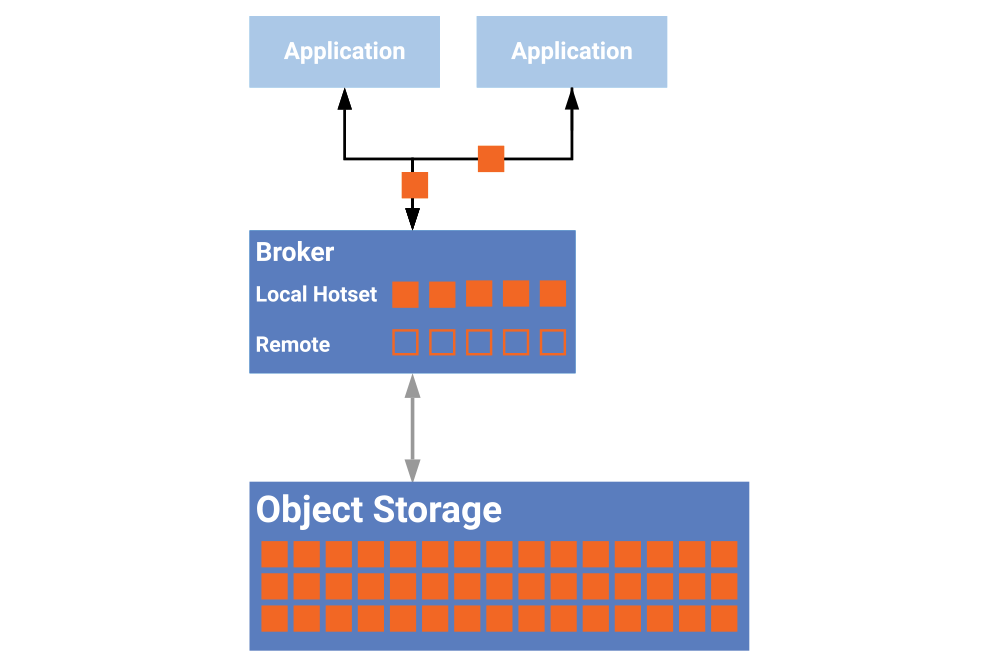

Streaming Machine Learning with Tiered Storage and Without a Data Lake

The combination of streaming machine learning (ML) and Confluent Tiered Storage enables you to build one scalable, reliable, but also simple infrastructure for all machine learning tasks using the Apache […]

Infinite Storage in Confluent Platform

A preview of Confluent Tiered Storage is now available in Confluent Platform 5.4, enabling operators to add an additional storage tier for data in Confluent Platform. If you’re curious about […]