[Live Lab] Streaming 101: Hands-On with Kafka & Flink | Secure Your Spot

Confluent Blog

Data Products, Data Contracts, and Change Data Capture

Change data capture is a popular method to connect database tables to data streams, but it comes with drawbacks. The next evolution of the CDC pattern, first-class data products, provide resilient pipelines that support both real-time and batch processing while isolating upstream systems...

Unlock Cost Savings with Freight Clusters–Now in General Availability

Confluent Cloud Freight clusters are now Generally Available on AWS. In this blog, learn how Freight clusters can save you up to 90% at GBps+ scale.

Unleash Real-Time Agentic AI: Introducing Streaming Agents on Confluent Cloud

Build event-driven agents on Apache Flink® with Streaming Agents on Confluent Cloud—fresh context, MCP tool calling, real-time embeddings, and enterprise governance.

Disaster Recovery in 60 Seconds: A POC for Seamless Client Failover on Confluent Cloud

Kafka client failover is hard. This post proposes a gateway‑orchestrated pattern: use Confluent Cloud Gateway plus Cluster Linking to reroute traffic, reverse replication, and enable one‑click failover/failback with minimal RTO.

Focal Systems: Boosting Store Performance with an AI Retail Operating System and Real-Time Data

Discover how Focal Systems uses computer vision, AI, and data streaming to improve retail store performance, shelf availability, and real-time inventory accuracy.

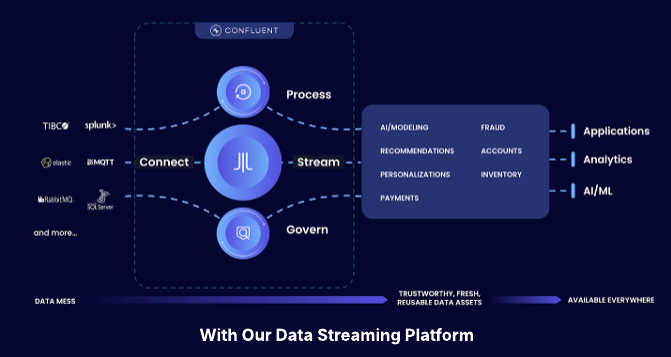

Streaming Data Integration with Apache Kafka®

Streaming data integration supports enriched, reusable, canonical streams that can be transformed, shared ,or replicated to different destinations, not just one.

Cloud API Keys vs Resource-Specific API Keys in Confluent Cloud

Learn the difference between cloud API keys and resource-specific API keys in Confluent Cloud, plus best practices for service accounts and production security.

Empowering Customers: The Role of Confluent’s Trust Center

Learn how the Confluent Trust Center helps security and compliance teams accelerate due diligence, simplify audits, and gain confidence through transparency.

How a Tier‑1 Bank Tuned Apache Kafka® for Ultra‑Low‑Latency Trading

A global investment bank and Confluent used Apache Kafka to deliver sub-5ms p99 end-to-end latency with strict durability. Through disciplined architecture, monitoring, and tuning, they scaled from 100k to 1.6M msgs/s (<5KB), preserving order and transparent tail latency.

Thunai Automates Customer Support with AI Agents and Data Streaming

Learn how Thunai uses real-time data streaming to power agentic AI, achieving 70–80% L1 support deflection and cutting resolution time from hours to minutes.

Starting With Purpose: In-Person Onboarding in a Remote-First World

How Confluent blends remote-first flexibility with in-person onboarding to build connection, purpose, and long-term employee success from day one.

Confluent Cloud Is Your Life (K)Raft Away From Hosted Apache Kafka®

ZooKeeper is going away. Learn why hosted Kafka migrations are accelerating—and how Confluent Cloud simplifies your move with KRaft and KCP.

The Current Status of Current

Find out what’s next for Current 2026—including the role of the event in the growing data streaming community and updates to plans for Asia-Pacific and United States events.

How to Build a Custom Kafka Connector – A Comprehensive Guide

Learn how to build a custom Kafka connector, which is an essential skill for anyone working with Apache Kafka® in real-time data streaming environments with a wide variety of data sources and sinks.

Why Managing Your Apache Kafka® Schemas Is Costing You More Than You Think

Manual schema management in Apache Kafka® leads to rising costs, compatibility risks, and engineering overhead. See how Confluent lowers your total cost of ownership for Kafka with Schema Registry and more.

Confluent Recognized in 2025 Gartner® Magic Quadrant™ for Data Integration Tools

Confluent is recognized in the 2025 Gartner Data Integration Tools MQ. While valued for execution, we are running a different race. Learn how we are defining the data streaming platform category with our Apache Flink® service and Tableflow to power the modern real-time enterprise.

Why Cluster Rebalancing Counts More Than You Think in Your Apache Kafka® Costs

Apache Kafka® cluster rebalancing seems routine, but it drives hidden costs in time, resources, and cloud spend. Learn how Confluent helps reduce your Kafka total cost of ownership.